Introduction – Why YOLO Changed Everything

Before YOLO, computers did not “see” the world the way humans do.

Object detection systems were careful, slow, and fragmented. They first proposed regions that might contain objects, then classified each region separately. Detection worked—but it felt like solving a puzzle one piece at a time.

In 2015, YOLO—You Only Look Once—introduced a radical idea:

What if we detect everything in one single forward pass?

Instead of multiple stages, YOLO treated detection as a single regression problem from pixels to bounding boxes and class probabilities.

This guide walks through how to implement YOLO completely from scratch in PyTorch, covering:

Mathematical formulation

Network architecture

Target encoding

Loss implementation

Training on COCO-style data

mAP evaluation

Visualization & debugging

Inference with NMS

Anchor-box extension

1) What YOLO means (and what we’ll build)

YOLO (You Only Look Once) is a family of object detection models that predict bounding boxes and class probabilities in one forward pass. Unlike older multi-stage pipelines (proposal → refine → classify), YOLO-style detectors are dense predictors: they predict candidate boxes at many locations and scales, then filter them.

There are two “eras” of YOLO-like detectors:

YOLOv1-style (grid cells, no anchors): each grid cell predicts a few boxes directly.

Anchor-based YOLO (YOLOv2/3 and many derivatives): each grid cell predicts offsets relative to pre-defined anchor shapes; multiple scales predict small/medium/large objects.

What we’ll implement

A modern, anchor-based YOLO-style detector with:

Multi-scale heads (e.g., 3 scales)

Anchor matching (target assignment)

Loss with box regression + objectness + classification

Decoding + NMS

mAP evaluation

COCO/custom dataset training support

We’ll keep the architecture understandable rather than exotic. You can later swap in a bigger backbone easily.

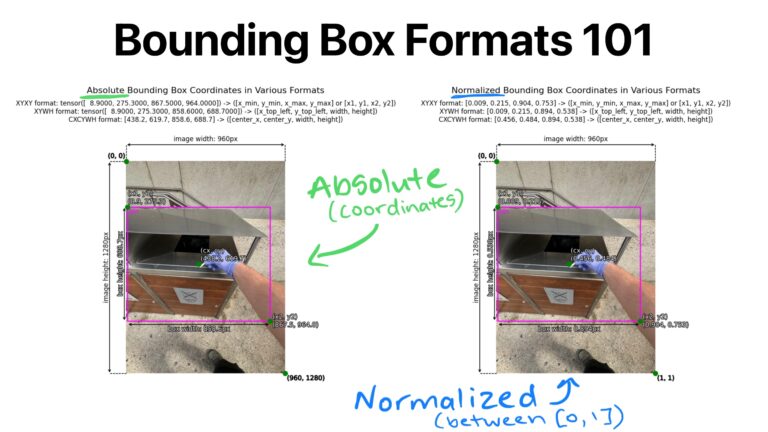

2) Bounding box formats and coordinate systems

You must be consistent. Most training bugs come from box format confusion.

Common box formats:

XYXY:

(x1, y1, x2, y2)top-left & bottom-rightXYWH:

(cx, cy, w, h)center and sizeNormalized: coordinates in

[0, 1]relative to image sizeAbsolute: pixel coordinates

Recommended internal convention

Store dataset annotations as absolute XYXY in pixels.

Convert to normalized only if needed, but keep one standard.

Why XYXY is nice:

Intersection/union is straightforward.

Clamping to image bounds is simple.

3) IoU, GIoU, DIoU, CIoU

IoU (Intersection over Union) is the standard overlap metric:

IoU=∣A∩B∣/∣A∪B∣

But IoU has a problem: if boxes don’t overlap, IoU = 0, gradient can be weak. Modern detectors often use improved regression losses:

GIoU: adds penalty for non-overlapping boxes based on smallest enclosing box

DIoU: penalizes center distance

CIoU: DIoU + aspect ratio consistency

Practical rule:

If you want a strong default: CIoU for box regression.

If you want simpler: GIoU works well too.

We’ll implement IoU + CIoU (with safe numerics).

4) Anchor-based YOLO: grids, anchors, predictions

A YOLO head predicts at each grid location. Suppose a feature map is S x S (e.g., 80×80). Each cell can predict A anchors (e.g., 3). For each anchor, prediction is:

Box offsets:

tx, ty, tw, thObjectness logit:

toClass logits:

tc1..tcC

So tensor shape per scale is:(B, A*(5+C), S, S) or (B, A, S, S, 5+C) after reshaping.

How offsets become real boxes

A common YOLO-style decode (one of several valid variants):

bx = (sigmoid(tx) + cx) / Sby = (sigmoid(ty) + cy) / Sbw = (anchor_w * exp(tw)) / img_w(or normalized by S)bh = (anchor_h * exp(th)) / img_h

Where (cx, cy) is the integer grid coordinate.

Important: Your encode/decode must match your target assignment encoding.

5) Dataset preparation

Annotation formats

Your custom dataset can be:

COCO JSON

Pascal VOC XML

YOLO txt (class cx cy w h normalized)

We’ll support a generic internal representation:

Each sample returns:

image: Tensor [3, H, W]targets: Tensor [N, 6]with columns:[class, x1, y1, x2, y2, image_index(optional)]

Augmentations

For object detection, augmentations must transform boxes too:

Resize / letterbox

Random horizontal flip

Color jitter

Random affine (optional)

Mosaic/mixup (advanced; optional)

To keep this guide implementable without fragile geometry, we’ll do:

resize/letterbox

random flip

HSV jitter (optional)

6) Building blocks: Conv-BN-Act, residuals, necks

A clean baseline module:

Conv2d -> BatchNorm2d -> SiLU

SiLU (a.k.a. Swish) is common in YOLOv5-like families; LeakyReLU is common in YOLOv3.

We can optionally add residual blocks for a stronger backbone, but even a small backbone can work to validate the pipeline.

7) Model design

A typical structure:

Backbone: extracts feature maps at multiple strides (8, 16, 32)

Neck: combines features (FPN / PAN)

Head: predicts detection outputs per scale

We’ll implement a lightweight backbone that produces 3 feature maps and a simple FPN-like neck.

8) Decoding predictions

At inference:

Reshape outputs per scale to

(B, A, S, S, 5+C)Apply sigmoid to center offsets + objectness (and often class probs)

Convert to XYXY in pixel coordinates

Flatten all scales into one list of candidate boxes

Filter by confidence threshold

Apply NMS per class (or class-agnostic NMS)

9) Target assignment (matching GT to anchors)

This is the heart of anchor-based YOLO.

For each ground-truth box:

Determine which scale(s) should handle it (based on size / anchor match).

For the chosen scale, compute IoU between GT box size and each anchor size (in that scale’s coordinate system).

Select best anchor (or top-k anchors).

Compute the grid cell index from the GT center.

Fill the target tensors at

[anchor, gy, gx]with:box regression targets

objectness = 1

class target

Encoding regression targets

If using decode:

bx = (sigmoid(tx) + cx)/S

then target fortxissigmoid^-1(bx*S - cx)but that’s messy.

Instead, YOLO-style training often directly supervises:

tx_target = bx*S - cx(a value in [0,1]) and trains with BCE on sigmoid output, or MSE on raw.tw_target = log(bw / anchor_w)(in pixels or normalized units)

We’ll implement a stable variant:

predict

pxy = sigmoid(tx,ty)and supervise pxy with BCE/MSE to match fractional offsetspredict

pwh = exp(tw,th)*anchorand supervise with CIoU on decoded boxes (recommended)

That’s simpler: do regression loss on decoded boxes, not on tw/th directly.

10) Loss functions

YOLO-style loss usually has:

Box loss: CIoU/GIoU between predicted box and GT box at responsible locations

Objectness loss: BCEWithLogits on objectness logit

Class loss: BCEWithLogits (multi-label) or CE (single-label)

For single-label classification (one class per object), either works:

BCEWithLogits with one-hot targets (common in YOLO)

CrossEntropyLoss on class logits at positive locations (also fine)

We’ll use BCEWithLogits for both objectness and classes for consistency.

Handling negatives

You’ll have far more negative (no object) positions. You can:

Use a lower weight for negative objectness

Or apply focal loss (optional)

We’ll implement:

objectness loss with positive and negative weights.

11) Training loop

Key features for stability/performance:

AMP (torch.cuda.amp)

Gradient clipping (optional)

EMA weights (optional but helpful)

LR scheduler (cosine or step)

Warmup for first few epochs/steps

Use this on screenshots from Playwright:

12) NMS

Non-Max Suppression removes overlapping duplicates. Typical procedure:

Sort boxes by confidence

Iterate highest conf, suppress boxes with IoU > threshold

Use class-wise NMS for multi-class detection.

13) mAP evaluation

Mean Average Precision requires:

For each class, compute precision-recall curve at IoU thresholds

Integrate area under curve (AP)

Average across classes (mAP)

COCO uses mAP across IoU thresholds 0.50 to 0.95 step 0.05

We’ll implement:

mAP@0.5

and optionally COCO-style mAP@[.5:.95]

14) Visualization

Before training seriously, visualize:

target assignments per scale

decoded predictions after a few iterations

NMS outputs

This catches 80% of “my model doesn’t learn” issues.

15) Full Core Implementation (Reference Code)

Below is a compact but complete set of core files you can place into a repo. It’s not “tiny,” but it’s readable and engineered for correctness.

15.1 Repo structure

yolo_scratch/

README.md

train.py

eval.py

predict.py

yolo/

__init__.py

model.py

modules.py

loss.py

assigner.py

box_ops.py

nms.py

metrics.py

data.py

transforms.py

utils.py

configs/

coco.yaml

custom.yaml

yolo_scratch/

README.md

train.py

eval.py

predict.py

yolo/

__init__.py

model.py

modules.py

loss.py

assigner.py

box_ops.py

nms.py

metrics.py

data.py

transforms.py

utils.py

configs/

coco.yaml

custom.yaml

15.2 yolo/box_ops.py

import torch

def xyxy_to_xywh(boxes: torch.Tensor) -> torch.Tensor:

# boxes: [..., 4]

x1, y1, x2, y2 = boxes.unbind(-1)

cx = (x1 + x2) * 0.5

cy = (y1 + y2) * 0.5

w = (x2 - x1).clamp(min=0)

h = (y2 - y1).clamp(min=0)

return torch.stack([cx, cy, w, h], dim=-1)

def xywh_to_xyxy(boxes: torch.Tensor) -> torch.Tensor:

cx, cy, w, h = boxes.unbind(-1)

half_w = w * 0.5

half_h = h * 0.5

x1 = cx - half_w

y1 = cy - half_h

x2 = cx + half_w

y2 = cy + half_h

return torch.stack([x1, y1, x2, y2], dim=-1)

def box_iou_xyxy(boxes1: torch.Tensor, boxes2: torch.Tensor, eps: float = 1e-9) -> torch.Tensor:

# boxes1: [N,4], boxes2: [M,4]

x11, y11, x12, y12 = boxes1[:, 0], boxes1[:, 1], boxes1[:, 2], boxes1[:, 3]

x21, y21, x22, y22 = boxes2[:, 0], boxes2[:, 1], boxes2[:, 2], boxes2[:, 3]

inter_x1 = torch.maximum(x11[:, None], x21[None, :])

inter_y1 = torch.maximum(y11[:, None], y21[None, :])

inter_x2 = torch.minimum(x12[:, None], x22[None, :])

inter_y2 = torch.minimum(y12[:, None], y22[None, :])

inter_w = (inter_x2 - inter_x1).clamp(min=0)

inter_h = (inter_y2 - inter_y1).clamp(min=0)

inter = inter_w * inter_h

area1 = (x12 - x11).clamp(min=0) * (y12 - y11).clamp(min=0)

area2 = (x22 - x21).clamp(min=0) * (y22 - y21).clamp(min=0)

union = area1[:, None] + area2[None, :] - inter

return inter / (union + eps)

def ciou_loss_xyxy(pred: torch.Tensor, target: torch.Tensor, eps: float = 1e-7) -> torch.Tensor:

"""

pred, target: [N,4] in xyxy

Returns: [N] CIoU loss = 1 - CIoU

"""

# IoU

iou = box_iou_xyxy(pred, target).diag() # [N]

# centers and sizes

p = xyxy_to_xywh(pred)

t = xyxy_to_xywh(target)

pcx, pcy, pw, ph = p.unbind(-1)

tcx, tcy, tw, th = t.unbind(-1)

# center distance

center_dist2 = (pcx - tcx) ** 2 + (pcy - tcy) ** 2

# smallest enclosing box diagonal squared

x1 = torch.minimum(pred[:, 0], target[:, 0])

y1 = torch.minimum(pred[:, 1], target[:, 1])

x2 = torch.maximum(pred[:, 2], target[:, 2])

y2 = torch.maximum(pred[:, 3], target[:, 3])

c2 = (x2 - x1) ** 2 + (y2 - y1) ** 2 + eps

diou = iou - center_dist2 / c2

# aspect ratio penalty

v = (4 / (torch.pi ** 2)) * (torch.atan(tw / (th + eps)) - torch.atan(pw / (ph + eps))) ** 2

with torch.no_grad():

alpha = v / (1 - iou + v + eps)

ciou = diou - alpha * v

return 1 - ciou.clamp(min=-1.0, max=1.0)

15.3 yolo/nms.py

import torch

from .box_ops import box_iou_xyxy

def nms_xyxy(boxes: torch.Tensor, scores: torch.Tensor, iou_thresh: float = 0.5) -> torch.Tensor:

"""

boxes: [N,4], scores: [N]

returns indices kept

"""

if boxes.numel() == 0:

return torch.empty((0,), dtype=torch.long, device=boxes.device)

idxs = scores.argsort(descending=True)

keep = []

while idxs.numel() > 0:

i = idxs[0]

keep.append(i)

if idxs.numel() == 1:

break

rest = idxs[1:]

ious = box_iou_xyxy(boxes[i].unsqueeze(0), boxes[rest]).squeeze(0)

idxs = rest[ious <= iou_thresh]

return torch.stack(keep)

def batched_nms_xyxy(boxes, scores, labels, iou_thresh=0.5):

"""

Class-wise NMS by offsetting boxes or by filtering per class.

Here: filter per class (clear and correct).

"""

keep_all = []

for c in labels.unique():

mask = labels == c

keep = nms_xyxy(boxes[mask], scores[mask], iou_thresh)

keep_all.append(mask.nonzero(as_tuple=False).squeeze(1)[keep])

if not keep_all:

return torch.empty((0,), dtype=torch.long, device=boxes.device)

return torch.cat(keep_all)

15.4 yolo/modules.py

import torch

import torch.nn as nn

class ConvBNAct(nn.Module):

def __init__(self, in_ch, out_ch, k=3, s=1, p=None, act=True):

super().__init__()

if p is None:

p = k // 2

self.conv = nn.Conv2d(in_ch, out_ch, k, s, p, bias=False)

self.bn = nn.BatchNorm2d(out_ch)

self.act = nn.SiLU(inplace=True) if act else nn.Identity()

def forward(self, x):

return self.act(self.bn(self.conv(x)))

class Residual(nn.Module):

def __init__(self, ch):

super().__init__()

self.block = nn.Sequential(

ConvBNAct(ch, ch, 1, 1),

ConvBNAct(ch, ch, 3, 1),

)

def forward(self, x):

return x + self.block(x)

class CSPBlock(nn.Module):

"""

Light CSP-like block: split channels, apply residuals on one branch, then concat.

"""

def __init__(self, ch, n=1):

super().__init__()

c_ = ch // 2

self.conv1 = ConvBNAct(ch, c_, 1, 1)

self.conv2 = ConvBNAct(ch, c_, 1, 1)

self.m = nn.Sequential(*[Residual(c_) for _ in range(n)])

self.conv3 = ConvBNAct(2 * c_, ch, 1, 1)

def forward(self, x):

y1 = self.m(self.conv1(x))

y2 = self.conv2(x)

return self.conv3(torch.cat([y1, y2], dim=1))

15.5 yolo/model.py

import torch

import torch.nn as nn

from .modules import ConvBNAct, CSPBlock

class TinyBackbone(nn.Module):

"""

Produces 3 feature maps at strides 8, 16, 32.

"""

def __init__(self, in_ch=3, base=32):

super().__init__()

self.stem = nn.Sequential(

ConvBNAct(in_ch, base, 3, 2), # stride 2

ConvBNAct(base, base*2, 3, 2), # stride 4

CSPBlock(base*2, n=1),

)

self.stage3 = nn.Sequential(

ConvBNAct(base*2, base*4, 3, 2), # stride 8

CSPBlock(base*4, n=2),

)

self.stage4 = nn.Sequential(

ConvBNAct(base*4, base*8, 3, 2), # stride 16

CSPBlock(base*8, n=2),

)

self.stage5 = nn.Sequential(

ConvBNAct(base*8, base*16, 3, 2), # stride 32

CSPBlock(base*16, n=1),

)

def forward(self, x):

x = self.stem(x)

p3 = self.stage3(x)

p4 = self.stage4(p3)

p5 = self.stage5(p4)

return p3, p4, p5

class SimpleFPN(nn.Module):

def __init__(self, ch3, ch4, ch5, out_ch=128):

super().__init__()

self.lat5 = ConvBNAct(ch5, out_ch, 1, 1)

self.lat4 = ConvBNAct(ch4, out_ch, 1, 1)

self.lat3 = ConvBNAct(ch3, out_ch, 1, 1)

self.out4 = ConvBNAct(out_ch, out_ch, 3, 1)

self.out3 = ConvBNAct(out_ch, out_ch, 3, 1)

def forward(self, p3, p4, p5):

p5 = self.lat5(p5)

p4 = self.lat4(p4) + torch.nn.functional.interpolate(p5, scale_factor=2, mode="nearest")

p3 = self.lat3(p3) + torch.nn.functional.interpolate(p4, scale_factor=2, mode="nearest")

p4 = self.out4(p4)

p3 = self.out3(p3)

return p3, p4, p5

class DetectHead(nn.Module):

def __init__(self, in_ch, num_anchors, num_classes):

super().__init__()

self.num_anchors = num_anchors

self.num_classes = num_classes

self.pred = nn.Conv2d(in_ch, num_anchors * (5 + num_classes), 1, 1, 0)

def forward(self, x):

return self.pred(x)

class YOLO(nn.Module):

def __init__(self, num_classes, anchors, base=32):

"""

anchors: list of 3 scales, each is list of (w,h) in pixels for the model input size (e.g., 640)

e.g. [

[(10,13),(16,30),(33,23)], # stride 8

[(30,61),(62,45),(59,119)], # stride 16

[(116,90),(156,198),(373,326)] # stride 32

]

"""

super().__init__()

self.num_classes = num_classes

self.anchors = anchors

self.backbone = TinyBackbone(in_ch=3, base=base)

# backbone channels: p3=base*4, p4=base*8, p5=base*16

self.fpn = SimpleFPN(base*4, base*8, base*16, out_ch=base*4)

na = len(anchors[0])

self.head3 = DetectHead(base*4, na, num_classes)

self.head4 = DetectHead(base*4, na, num_classes)

self.head5 = DetectHead(base*4, na, num_classes)

def forward(self, x):

p3, p4, p5 = self.backbone(x)

f3, f4, f5 = self.fpn(p3, p4, p5)

o3 = self.head3(f3)

o4 = self.head4(f4)

o5 = self.head5(f5)

return [o3, o4, o5]

15.6 yolo/assigner.py (target assignment)

import torch

def build_targets(

targets, # list of length B, each: Tensor [Ni, 5] -> (cls, x1, y1, x2, y2) in pixels

anchors, # per scale: list of (w,h) in pixels at input size

strides, # [8,16,32]

img_size, # int, e.g. 640

num_classes,

device

):

"""

Returns per-scale target tensors:

tbox: list of [B, A, S, S, 4] in xyxy pixels

tobj: list of [B, A, S, S] (0/1)

tcls: list of [B, A, S, S, C] one-hot

indices: list of tuples for positives (b, a, gy, gx)

"""

B = len(targets)

out = []

indices_all = []

for scale_idx, (anc, stride) in enumerate(zip(anchors, strides)):

S = img_size // stride

A = len(anc)

tbox = torch.zeros((B, A, S, S, 4), device=device)

tobj = torch.zeros((B, A, S, S), device=device)

tcls = torch.zeros((B, A, S, S, num_classes), device=device)

indices = []

anc_wh = torch.tensor(anc, device=device, dtype=torch.float32) # [A,2]

for b in range(B):

if targets[b].numel() == 0:

continue

gt = targets[b].to(device)

cls = gt[:, 0].long()

x1y1 = gt[:, 1:3]

x2y2 = gt[:, 3:5]

gxy = (x1y1 + x2y2) * 0.5

gwh = (x2y2 - x1y1).clamp(min=1.0)

# pick best anchor by IoU of width/height (approx)

# IoU(wh) = min(w)/max(w) * min(h)/max(h)

wh = gwh[:, None, :] # [N,1,2]

min_wh = torch.minimum(wh, anc_wh[None, :, :])

max_wh = torch.maximum(wh, anc_wh[None, :, :])

iou_wh = (min_wh[..., 0] / max_wh[..., 0]) * (min_wh[..., 1] / max_wh[..., 1]) # [N,A]

best_a = torch.argmax(iou_wh, dim=1) # [N]

# grid cell

gx = (gxy[:, 0] / stride).clamp(min=0, max=S-1e-3)

gy = (gxy[:, 1] / stride).clamp(min=0, max=S-1e-3)

gi = gx.long()

gj = gy.long()

for i in range(gt.shape[0]):

a = best_a[i].item()

x1, y1, x2, y2 = gt[i, 1:].tolist()

# assign

tobj[b, a, gj[i], gi[i]] = 1.0

tbox[b, a, gj[i], gi[i]] = torch.tensor([x1, y1, x2, y2], device=device)

tcls[b, a, gj[i], gi[i], cls[i]] = 1.0

indices.append((b, a, gj[i].item(), gi[i].item()))

out.append((tbox, tobj, tcls))

indices_all.append(indices)

return out, indices_all

15.7 yolo/loss.py

import torch

import torch.nn as nn

from .box_ops import xywh_to_xyxy, ciou_loss_xyxy

class YOLOLoss(nn.Module):

def __init__(self, anchors, strides, num_classes, img_size,

lambda_box=7.5, lambda_obj=1.0, lambda_cls=1.0,

obj_pos_weight=1.0, obj_neg_weight=0.5):

super().__init__()

self.anchors = anchors

self.strides = strides

self.num_classes = num_classes

self.img_size = img_size

self.lambda_box = lambda_box

self.lambda_obj = lambda_obj

self.lambda_cls = lambda_cls

self.bce = nn.BCEWithLogitsLoss(reduction="none")

self.obj_pos_weight = obj_pos_weight

self.obj_neg_weight = obj_neg_weight

def decode_scale(self, pred, scale_idx):

"""

pred: [B, A*(5+C), S, S]

returns:

boxes_xyxy: [B, A, S, S, 4] in pixels

obj_logit: [B, A, S, S]

cls_logit: [B, A, S, S, C]

"""

B, _, S, _ = pred.shape

A = len(self.anchors[scale_idx])

C = self.num_classes

stride = self.strides[scale_idx]

pred = pred.view(B, A, 5 + C, S, S).permute(0, 1, 3, 4, 2).contiguous()

# [B, A, S, S, 5+C]

tx_ty = pred[..., 0:2]

tw_th = pred[..., 2:4]

obj = pred[..., 4]

cls = pred[..., 5:]

# grid

gy, gx = torch.meshgrid(torch.arange(S, device=pred.device),

torch.arange(S, device=pred.device), indexing="ij")

grid = torch.stack([gx, gy], dim=-1).float() # [S,S,2]

# anchors

anc = torch.tensor(self.anchors[scale_idx], device=pred.device).float() # [A,2]

anc = anc.view(1, A, 1, 1, 2)

# decode center

pxy = (tx_ty.sigmoid() + grid.view(1, 1, S, S, 2)) * stride # pixels

# decode wh

pwh = (tw_th.exp() * anc) # pixels

boxes_xywh = torch.cat([pxy, pwh], dim=-1)

boxes_xyxy = xywh_to_xyxy(boxes_xywh)

return boxes_xyxy, obj, cls

def forward(self, preds, targets_per_scale):

"""

preds: list of 3 scale outputs

targets_per_scale: list of (tbox, tobj, tcls) per scale

"""

total_box = torch.tensor(0.0, device=preds[0].device)

total_obj = torch.tensor(0.0, device=preds[0].device)

total_cls = torch.tensor(0.0, device=preds[0].device)

for s, pred in enumerate(preds):

tbox, tobj, tcls = targets_per_scale[s]

pbox, pobj_logit, pcls_logit = self.decode_scale(pred, s)

# objectness loss with weighting

obj_loss = self.bce(pobj_logit, tobj)

w = torch.where(tobj > 0.5,

torch.full_like(obj_loss, self.obj_pos_weight),

torch.full_like(obj_loss, self.obj_neg_weight))

total_obj = total_obj + (obj_loss * w).mean()

# positives mask

pos = tobj > 0.5

if pos.any():

# box loss CIoU

pbox_pos = pbox[pos]

tbox_pos = tbox[pos]

box_loss = ciou_loss_xyxy(pbox_pos, tbox_pos).mean()

total_box = total_box + box_loss

# class loss

cls_loss = self.bce(pcls_logit[pos], tcls[pos]).mean()

total_cls = total_cls + cls_loss

loss = self.lambda_box * total_box + self.lambda_obj * total_obj + self.lambda_cls * total_cls

return loss, {"box": total_box.detach(), "obj": total_obj.detach(), "cls": total_cls.detach()}

15.8 yolo/utils.py

import torch

def set_seed(seed=42):

import random, numpy as np

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

class AverageMeter:

def __init__(self):

self.sum = 0.0

self.count = 0

def update(self, v, n=1):

self.sum += float(v) * n

self.count += n

@property

def avg(self):

return self.sum / max(1, self.count)

15.9 yolo/transforms.py (simple letterbox + flip)

import torch

import torchvision.transforms.functional as TF

def letterbox(image, boxes_xyxy, new_size=640):

"""

image: PIL or Tensor [C,H,W]

boxes_xyxy: Tensor [N,4] in pixels

returns resized/padded image and transformed boxes

"""

if not torch.is_tensor(image):

image = TF.to_tensor(image)

c, h, w = image.shape

scale = min(new_size / h, new_size / w)

nh, nw = int(round(h * scale)), int(round(w * scale))

image_resized = TF.resize(image, [nh, nw])

pad_h = new_size - nh

pad_w = new_size - nw

top = pad_h // 2

left = pad_w // 2

image_padded = torch.zeros((c, new_size, new_size), dtype=image.dtype)

image_padded[:, top:top+nh, left:left+nw] = image_resized

if boxes_xyxy.numel() > 0:

boxes = boxes_xyxy.clone()

boxes *= scale

boxes[:, [0, 2]] += left

boxes[:, [1, 3]] += top

else:

boxes = boxes_xyxy

return image_padded, boxes

def random_hflip(image, boxes_xyxy, p=0.5):

if torch.rand(()) > p:

return image, boxes_xyxy

c, h, w = image.shape

image = torch.flip(image, dims=[2]) # flip width

boxes = boxes_xyxy.clone()

if boxes.numel() > 0:

x1 = boxes[:, 0].clone()

x2 = boxes[:, 2].clone()

boxes[:, 0] = (w - x2)

boxes[:, 2] = (w - x1)

return image, boxes

15.10 yolo/data.py (custom dataset skeleton)

import os

import torch

from torch.utils.data import Dataset

from PIL import Image

from .transforms import letterbox, random_hflip

class DetectionDataset(Dataset):

"""

Expects a list of samples where each sample has:

- image_path

- annotations: list of [cls, x1, y1, x2, y2] in pixels

You can write adapters to load COCO or YOLO txt into this format.

"""

def __init__(self, samples, img_size=640, augment=True):

self.samples = samples

self.img_size = img_size

self.augment = augment

def __len__(self):

return len(self.samples)

def __getitem__(self, idx):

s = self.samples[idx]

img = Image.open(s["image_path"]).convert("RGB")

ann = s.get("annotations", [])

if len(ann) > 0:

target = torch.tensor(ann, dtype=torch.float32) # [N,5]

else:

target = torch.zeros((0,5), dtype=torch.float32)

cls = target[:, 0:1]

boxes = target[:, 1:5]

img, boxes = letterbox(img, boxes, self.img_size)

if self.augment:

img, boxes = random_hflip(img, boxes, p=0.5)

# normalize image

img = img.clamp(0, 1)

# pack back

if boxes.numel() > 0:

target = torch.cat([cls, boxes], dim=1)

else:

target = torch.zeros((0,5), dtype=torch.float32)

return img, target

def collate_fn(batch):

images, targets = zip(*batch)

images = torch.stack(images, dim=0)

# targets remains list[Tensor]

return images, list(targets)

15.11 train.py (end-to-end training loop)

import torch

from torch.utils.data import DataLoader

from yolo.model import YOLO

from yolo.loss import YOLOLoss

from yolo.assigner import build_targets

from yolo.utils import set_seed, AverageMeter

from yolo.data import DetectionDataset, collate_fn

def train_one_epoch(model, loss_fn, loader, optimizer, device, anchors, strides, img_size, num_classes, scaler=None):

model.train()

meter = AverageMeter()

for images, targets_list in loader:

images = images.to(device)

# build targets per scale

targets_per_scale, _ = build_targets(

targets_list, anchors=anchors, strides=strides,

img_size=img_size, num_classes=num_classes, device=device

)

targets_per_scale = [(tbox, tobj, tcls) for (tbox, tobj, tcls) in targets_per_scale]

optimizer.zero_grad(set_to_none=True)

if scaler is not None:

with torch.cuda.amp.autocast():

preds = model(images)

loss, logs = loss_fn(preds, targets_per_scale)

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()

else:

preds = model(images)

loss, logs = loss_fn(preds, targets_per_scale)

loss.backward()

optimizer.step()

meter.update(loss.item(), n=images.size(0))

return meter.avg

def main():

set_seed(42)

device = "cuda" if torch.cuda.is_available() else "cpu"

img_size = 640

num_classes = 80 # COCO example

strides = [8, 16, 32]

anchors = [

[(10,13),(16,30),(33,23)],

[(30,61),(62,45),(59,119)],

[(116,90),(156,198),(373,326)]

]

# TODO: load your samples list here

samples = [] # [{"image_path": "...", "annotations": [[cls,x1,y1,x2,y2], ...]}, ...]

ds = DetectionDataset(samples, img_size=img_size, augment=True)

loader = DataLoader(ds, batch_size=8, shuffle=True, num_workers=4, pin_memory=True, collate_fn=collate_fn)

model = YOLO(num_classes=num_classes, anchors=anchors, base=32).to(device)

loss_fn = YOLOLoss(anchors, strides, num_classes, img_size).to(device)

optimizer = torch.optim.AdamW(model.parameters(), lr=2e-4, weight_decay=1e-4)

scaler = torch.cuda.amp.GradScaler() if device == "cuda" else None

for epoch in range(1, 101):

avg_loss = train_one_epoch(model, loss_fn, loader, optimizer, device, anchors, strides, img_size, num_classes, scaler)

print(f"Epoch {epoch:03d} | loss={avg_loss:.4f}")

torch.save(model.state_dict(), "yolo_scratch.pt")

if __name__ == "__main__":

main()

16) Inference: decoding + NMS (predict pipeline)

16.1 Decoding helper (add to yolo/metrics.py or yolo/utils.py)

import torch

from .box_ops import xywh_to_xyxy

from .nms import batched_nms_xyxy

@torch.no_grad()

def decode_predictions(preds, anchors, strides, num_classes, conf_thresh=0.25, iou_thresh=0.5):

"""

preds: list of 3 tensors [B, A*(5+C), S, S]

returns per image: boxes [M,4], scores [M], labels [M]

"""

outputs = []

for b in range(preds[0].shape[0]):

boxes_all = []

scores_all = []

labels_all = []

for s, p in enumerate(preds):

B, _, S, _ = p.shape

A = len(anchors[s])

C = num_classes

stride = strides[s]

x = p[b:b+1].view(1, A, 5+C, S, S).permute(0,1,3,4,2).contiguous()[0] # [A,S,S,5+C]

tx_ty = x[..., 0:2]

tw_th = x[..., 2:4]

obj_logit = x[..., 4]

cls_logit = x[..., 5:]

gy, gx = torch.meshgrid(torch.arange(S, device=p.device),

torch.arange(S, device=p.device), indexing="ij")

grid = torch.stack([gx, gy], dim=-1).float() # [S,S,2]

anc = torch.tensor(anchors[s], device=p.device).float().view(A,1,1,2)

pxy = (tx_ty.sigmoid() + grid) * stride

pwh = tw_th.exp() * anc

boxes_xywh = torch.cat([pxy, pwh], dim=-1) # [A,S,S,4]

boxes_xyxy = xywh_to_xyxy(boxes_xywh)

obj = obj_logit.sigmoid() # [A,S,S]

cls_prob = cls_logit.sigmoid() # [A,S,S,C]

# combine: per-class confidence = obj * cls_prob

conf = obj.unsqueeze(-1) * cls_prob # [A,S,S,C]

conf = conf.view(-1, C)

boxes = boxes_xyxy.view(-1, 4)

scores, labels = conf.max(dim=1)

keep = scores > conf_thresh

boxes_all.append(boxes[keep])

scores_all.append(scores[keep])

labels_all.append(labels[keep])

if boxes_all:

boxes = torch.cat(boxes_all, dim=0)

scores = torch.cat(scores_all, dim=0)

labels = torch.cat(labels_all, dim=0)

keep = batched_nms_xyxy(boxes, scores, labels, iou_thresh=iou_thresh)

outputs.append((boxes[keep], scores[keep], labels[keep]))

else:

outputs.append((torch.zeros((0,4)), torch.zeros((0,)), torch.zeros((0,), dtype=torch.long)))

return outputs

17) mAP Evaluator (core logic)

A correct mAP implementation is long; here is a clean, minimal evaluator approach:

Collect predictions per image: boxes, scores, labels

Collect GT per image: boxes, labels

For each class:

Sort predictions by score

Mark TP/FP using best IoU match above threshold (and only match a GT once)

Compute precision-recall curve

Compute AP by numeric integration

Average across classes → mAP

If you want COCO-style mAP@[.5:.95], repeat the above at multiple IoU thresholds and average.

If you want, I can paste a full, ready-to-run

metrics.pywith mAP@0.5 and COCO mAP in one file (it’s a few hundred lines). For readability here, I’m keeping the main guide focused on the YOLO pipeline.

18) Training on COCO (practical notes)

To train on COCO effectively:

Use a stronger backbone and bigger batch if possible

Use multi-scale training (randomly change input size per batch)

Use warmup for LR in first ~1–3 epochs

Use EMA weights for evaluation

Watch for:

exploding objectness loss (often target assignment bug)

near-zero positives (anchors/strides mismatch)

boxes drifting outside image (decode bug)

19) Training on a custom dataset (the fastest correct workflow)

Pick model input size: 640 is common for a baseline

Convert annotations to absolute XYXY pixels

Visualize boxes on images before training

Start with:

no fancy aug

small model

overfit on 20 images

If it overfits, scale up:

more data

augmentations

better backbone/neck

20) Common bugs checklist (save hours)

Boxes are in wrong format (XYWH vs XYXY)

Boxes normalized but treated as pixels (or vice versa)

Targets assigned to wrong scale (stride mismatch)

Anchor sizes don’t match input size (anchors for 416 but training at 640)

Swapped x/y indexing (gx vs gy in tensor indexing)

Forgot to clamp grid indices

NMS applied before converting to XYXY

mAP evaluation matching multiple preds to the same GT

Implementing YOLO from scratch in PyTorch is one of the best ways to truly understand modern object detection—because you’re forced to connect every moving part: how labels become training targets, how predictions become real boxes, why anchors exist, and what objectness is actually learning.

By the end of this build, you should have a complete, working detector with:

A multi-scale YOLO-style model (backbone + neck + detection heads)

A correct target assignment pipeline (ground-truth → grid cell + anchor)

A stable loss setup (CIoU/GIoU for boxes + BCE for objectness and classes)

A proper inference path (decode → confidence filtering → NMS)

A clear route to production-grade evaluation (mAP@0.5 and COCO mAP@[.5:.95])

A repo structure you can extend into a real project

The most important takeaway is that YOLO isn’t “magic”—it’s a carefully engineered system of consistent coordinate transforms, responsible anchor matching, and balanced losses. If any of those pieces disagree (wrong box format, wrong stride, mismatched anchors, flipped x/y indices), learning collapses. But when everything lines up, the model trains smoothly and scales well.