Introduction Higher education is entering one of the most transformative periods in its history. Just as the internet redefined access to knowledge and online learning reshaped classrooms, Generative Artificial Intelligence (Generative AI) is now redefining how knowledge is created, delivered, and consumed. Unlike traditional AI systems that analyze or classify data, Generative AI can produce new content — including text, code, images, simulations, and even research drafts. Tools powered by large language models are already assisting students with learning, supporting professors in course design, and accelerating academic research workflows. Universities worldwide are moving beyond experimentation. Generative AI is rapidly becoming an essential academic infrastructure — influencing pedagogy, administration, research, and institutional strategy. This article explores how Generative AI is transforming higher education, its opportunities and risks, and what institutions must do to adapt responsibly. What Is Generative AI? Generative AI refers to artificial intelligence systems capable of creating original outputs based on patterns learned from large datasets. These systems rely on advanced machine learning architectures such as: Large Language Models (LLMs) Diffusion models Transformer-based neural networks Multimodal AI systems Examples of generative outputs include: Essays and academic explanations Programming code Research summaries Visual diagrams Educational simulations Interactive tutoring conversations In higher education, this ability shifts AI from being a passive analytical tool into an active collaborator in learning and research. Personalized Learning at Scale One of the most powerful applications of Generative AI is personalized education. Traditional classrooms struggle to adapt to individual learning speeds and styles. AI-powered systems can now: Explain complex concepts in multiple ways Adjust difficulty dynamically Provide instant feedback Generate customized practice exercises Support multilingual learning A student struggling with calculus, for example, can receive step-by-step explanations tailored to their understanding level — something previously impossible at scale. Benefits for Students 24/7 academic assistance Reduced learning gaps Improved engagement Increased confidence in difficult subjects Generative AI effectively acts as a personal academic tutor available anytime. The Evolution of Technology in Higher Education To understand the impact of Generative AI, it helps to view it within the broader evolution of educational technology: Era Technology Impact Pre-2000 Digital libraries and basic computing 2000–2015 Learning Management Systems (LMS) and online courses 2015–2022 Data analytics and adaptive learning 2023–Present Generative AI and intelligent academic assistance While previous technologies improved access and efficiency, Generative AI changes something deeper — how knowledge itself is produced and understood. Empowering Educators, Not Replacing Them A common misconception is that AI will replace professors. In reality, Generative AI is emerging as a productivity amplifier. Educators can use AI to: Draft lecture materials Create quizzes and assignments Generate case studies Design simulations Summarize research papers Translate learning content This reduces administrative workload and allows instructors to focus on what matters most: Mentorship Critical discussion Research supervision Human-centered teaching The role of educators is shifting from information delivery toward learning facilitation and intellectual guidance. Revolutionizing Academic Research Research is another domain experiencing rapid transformation. Generative AI accelerates research workflows by helping scholars: Conduct literature reviews faster Summarize thousands of papers Generate hypotheses Assist with coding and data analysis Draft early manuscript versions For interdisciplinary research, AI can bridge knowledge gaps across domains, helping researchers explore unfamiliar fields more efficiently. However, AI-generated research must always be validated by human expertise to maintain academic integrity. AI-Assisted Writing and Academic Productivity Writing is central to higher education, and Generative AI has dramatically changed the writing process. Students and researchers now use AI tools for: Brainstorming ideas Structuring arguments Improving clarity and grammar Formatting citations Editing drafts When used responsibly, AI becomes a thinking partner, not a shortcut. Universities increasingly encourage transparent AI usage policies rather than outright bans. Administrative Transformation Beyond classrooms and research, Generative AI is reshaping university operations. Applications include: Automated student support chatbots Enrollment assistance Academic advising systems Curriculum planning analysis Predictive student success modeling Institutions can improve efficiency while providing faster and more personalized student services. Ethical Challenges and Academic Integrity Despite its benefits, Generative AI introduces serious challenges. Key Concerns Academic plagiarism Overreliance on AI-generated work Bias in training data Hallucinated information Data privacy risks Universities must rethink assessment methods. Instead of memorization-based exams, institutions are moving toward: Project-based learning Oral examinations Critical reasoning evaluation AI-assisted but transparent workflows The goal is not to eliminate AI usage but to teach responsible AI literacy. The Rise of AI Literacy as a Core Skill Just as digital literacy became essential in the early 2000s, AI literacy is becoming a foundational academic skill. Students must learn: How AI systems work When AI outputs are unreliable Ethical usage practices Prompt engineering Verification and fact-checking Future graduates will not compete against AI — they will compete against people who know how to use AI effectively. Challenges Universities Must Overcome Adopting Generative AI at scale requires addressing institutional barriers: Faculty training gaps Policy uncertainty Infrastructure costs Data governance concerns Resistance to change Universities that delay adaptation risk falling behind in global academic competitiveness. The Future of Higher Education with Generative AI Looking ahead, several trends are emerging: AI-native universities and curricula Fully personalized degree pathways Intelligent research assistants Multimodal learning environments AI-driven virtual laboratories Education may shift from standardized programs toward adaptive lifelong learning ecosystems. Best Practices for Responsible Adoption Institutions should consider: ✅ Clear AI usage guidelines✅ Faculty and student training programs✅ Transparent disclosure policies✅ Human oversight in assessment✅ Ethical AI governance frameworks Responsible adoption ensures innovation without compromising academic values. Conclusion Generative AI is not simply another educational technology trend — it represents a structural transformation in how higher education operates. By enabling personalized learning, accelerating research, empowering educators, and improving institutional efficiency, Generative AI has the potential to democratize knowledge at an unprecedented scale. The universities that succeed will not be those that resist AI, but those that integrate it thoughtfully, ethically, and strategically. Higher education is evolving from static knowledge delivery toward dynamic human-AI collaboration, preparing students for a future where creativity, critical thinking, and technological fluency define success. Visit Our Data Annotation Service Visit Now

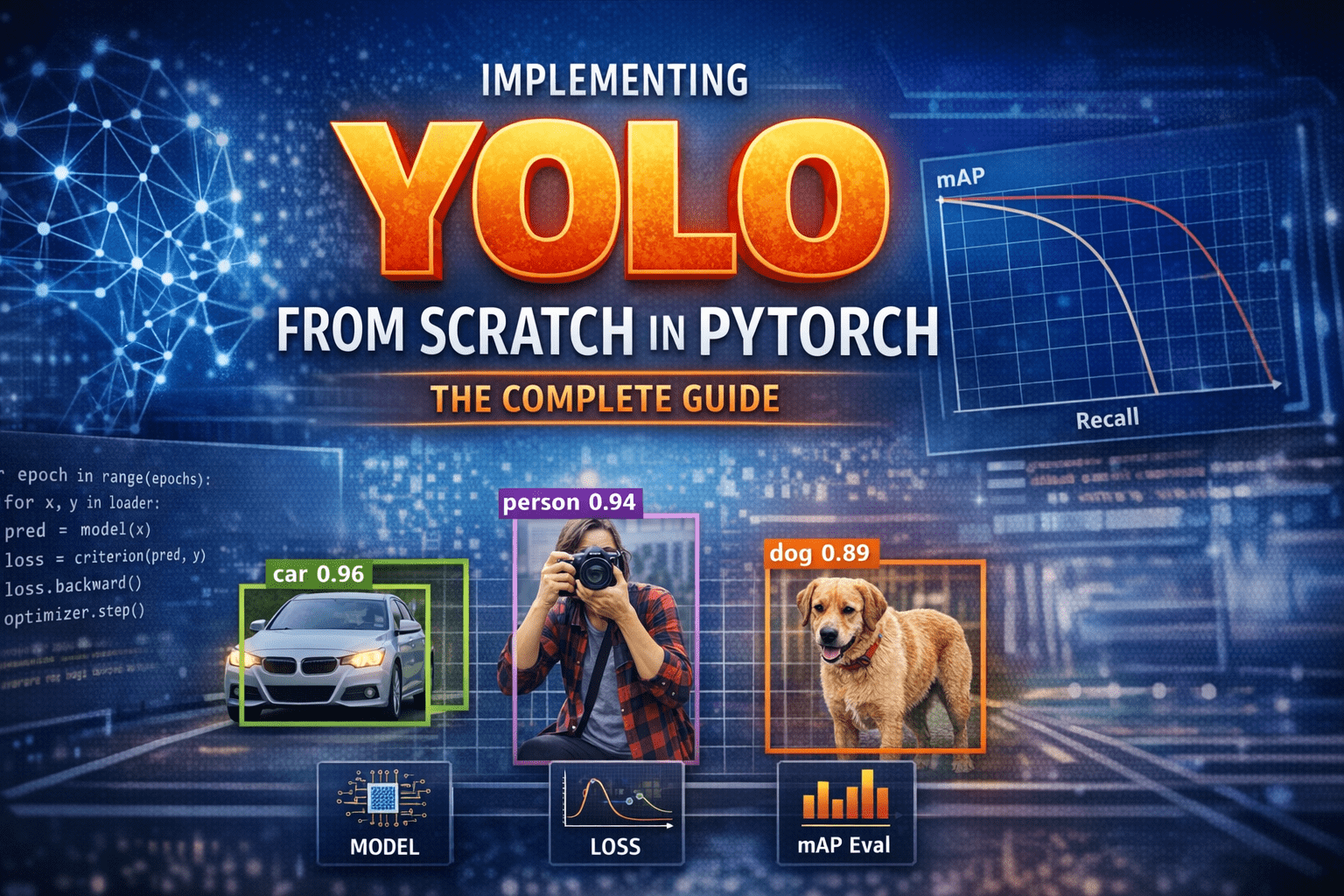

Introduction – Why YOLO Changed Everything Before YOLO, computers did not “see” the world the way humans do.Object detection systems were careful, slow, and fragmented. They first proposed regions that might contain objects, then classified each region separately. Detection worked—but it felt like solving a puzzle one piece at a time. In 2015, YOLO—You Only Look Once—introduced a radical idea: What if we detect everything in one single forward pass? Instead of multiple stages, YOLO treated detection as a single regression problem from pixels to bounding boxes and class probabilities. This guide walks through how to implement YOLO completely from scratch in PyTorch, covering: Mathematical formulation Network architecture Target encoding Loss implementation Training on COCO-style data mAP evaluation Visualization & debugging Inference with NMS Anchor-box extension 1) What YOLO means (and what we’ll build) YOLO (You Only Look Once) is a family of object detection models that predict bounding boxes and class probabilities in one forward pass. Unlike older multi-stage pipelines (proposal → refine → classify), YOLO-style detectors are dense predictors: they predict candidate boxes at many locations and scales, then filter them. There are two “eras” of YOLO-like detectors: YOLOv1-style (grid cells, no anchors): each grid cell predicts a few boxes directly. Anchor-based YOLO (YOLOv2/3 and many derivatives): each grid cell predicts offsets relative to pre-defined anchor shapes; multiple scales predict small/medium/large objects. What we’ll implement A modern, anchor-based YOLO-style detector with: Multi-scale heads (e.g., 3 scales) Anchor matching (target assignment) Loss with box regression + objectness + classification Decoding + NMS mAP evaluation COCO/custom dataset training support We’ll keep the architecture understandable rather than exotic. You can later swap in a bigger backbone easily. 2) Bounding box formats and coordinate systems You must be consistent. Most training bugs come from box format confusion. Common box formats: XYXY: (x1, y1, x2, y2) top-left & bottom-right XYWH: (cx, cy, w, h) center and size Normalized: coordinates in [0, 1] relative to image size Absolute: pixel coordinates Recommended internal convention Store dataset annotations as absolute XYXY in pixels. Convert to normalized only if needed, but keep one standard. Why XYXY is nice: Intersection/union is straightforward. Clamping to image bounds is simple. 3) IoU, GIoU, DIoU, CIoU IoU (Intersection over Union) is the standard overlap metric: IoU=∣A∩B∣/∣A∪B∣ But IoU has a problem: if boxes don’t overlap, IoU = 0, gradient can be weak. Modern detectors often use improved regression losses: GIoU: adds penalty for non-overlapping boxes based on smallest enclosing box DIoU: penalizes center distance CIoU: DIoU + aspect ratio consistency Practical rule: If you want a strong default: CIoU for box regression. If you want simpler: GIoU works well too. We’ll implement IoU + CIoU (with safe numerics). 4) Anchor-based YOLO: grids, anchors, predictions A YOLO head predicts at each grid location. Suppose a feature map is S x S (e.g., 80×80). Each cell can predict A anchors (e.g., 3). For each anchor, prediction is: Box offsets: tx, ty, tw, th Objectness logit: to Class logits: tc1..tcC So tensor shape per scale is:(B, A*(5+C), S, S) or (B, A, S, S, 5+C) after reshaping. How offsets become real boxes A common YOLO-style decode (one of several valid variants): bx = (sigmoid(tx) + cx) / S by = (sigmoid(ty) + cy) / S bw = (anchor_w * exp(tw)) / img_w (or normalized by S) bh = (anchor_h * exp(th)) / img_h Where (cx, cy) is the integer grid coordinate. Important: Your encode/decode must match your target assignment encoding. 5) Dataset preparation Annotation formats Your custom dataset can be: COCO JSON Pascal VOC XML YOLO txt (class cx cy w h normalized) We’ll support a generic internal representation: Each sample returns: image: Tensor [3, H, W] targets: Tensor [N, 6] with columns: [class, x1, y1, x2, y2, image_index(optional)] Augmentations For object detection, augmentations must transform boxes too: Resize / letterbox Random horizontal flip Color jitter Random affine (optional) Mosaic/mixup (advanced; optional) To keep this guide implementable without fragile geometry, we’ll do: resize/letterbox random flip HSV jitter (optional) 6) Building blocks: Conv-BN-Act, residuals, necks A clean baseline module: Conv2d -> BatchNorm2d -> SiLUSiLU (a.k.a. Swish) is common in YOLOv5-like families; LeakyReLU is common in YOLOv3. We can optionally add residual blocks for a stronger backbone, but even a small backbone can work to validate the pipeline. 7) Model design A typical structure: Backbone: extracts feature maps at multiple strides (8, 16, 32) Neck: combines features (FPN / PAN) Head: predicts detection outputs per scale We’ll implement a lightweight backbone that produces 3 feature maps and a simple FPN-like neck. 8) Decoding predictions At inference: Reshape outputs per scale to (B, A, S, S, 5+C) Apply sigmoid to center offsets + objectness (and often class probs) Convert to XYXY in pixel coordinates Flatten all scales into one list of candidate boxes Filter by confidence threshold Apply NMS per class (or class-agnostic NMS) 9) Target assignment (matching GT to anchors) This is the heart of anchor-based YOLO. For each ground-truth box: Determine which scale(s) should handle it (based on size / anchor match). For the chosen scale, compute IoU between GT box size and each anchor size (in that scale’s coordinate system). Select best anchor (or top-k anchors). Compute the grid cell index from the GT center. Fill the target tensors at [anchor, gy, gx] with: box regression targets objectness = 1 class target Encoding regression targets If using decode: bx = (sigmoid(tx) + cx)/Sthen target for tx is sigmoid^-1(bx*S – cx) but that’s messy. Instead, YOLO-style training often directly supervises: tx_target = bx*S – cx (a value in [0,1]) and trains with BCE on sigmoid output, or MSE on raw. tw_target = log(bw / anchor_w) (in pixels or normalized units) We’ll implement a stable variant: predict pxy = sigmoid(tx,ty) and supervise pxy with BCE/MSE to match fractional offsets predict pwh = exp(tw,th)*anchor and supervise with CIoU on decoded boxes (recommended) That’s simpler: do regression loss on decoded boxes, not on tw/th directly. 10) Loss functions YOLO-style loss usually has: Box loss: CIoU/GIoU between predicted

Introduction Before YOLO, computers didn’t see the world the way humans do. They inspected it slowly, cautiously, one object proposal at a time. Object detection worked, but it was fragmented, computationally expensive, and far from real time. Then, in 2015, a single paper changed everything. “You Only Look Once: Unified, Real-Time Object Detection” by Joseph Redmon et al. introduced YOLOv1, a model that redefined how machines perceive images. It wasn’t just an incremental improvement, it was a conceptual revolution. This is the story of how YOLOv1 was born, how it worked, and why its impact still echoes across modern computer vision systems today. Object Detection Before YOLO: A Fragmented World Before YOLOv1, object detection research was dominated by complex pipelines stitched together from multiple independent components. Each component worked reasonably well on its own, but the overall system was fragile, slow, and difficult to optimize. The Classical Detection Pipeline A typical object detection system before 2015 looked like this: Hand-crafted or heuristic-based region proposal Selective Search Edge Boxes Sliding windows (earlier methods) Feature extraction CNN features (AlexNet, VGG, etc.) Run separately on each proposed region Classification SVMs or softmax classifiers One classifier per region Bounding box regression Fine-tuning box coordinates post-classification Each stage was trained independently, often with different objectives. Why This Was a Problem Redundant computationThe same image features were recomputed hundreds of times. No global contextThe model never truly “saw” the full image at once. Pipeline fragilityErrors in region proposals could never be recovered downstream. Poor real-time performanceEven Fast R-CNN struggled to exceed a few FPS. Object detection worked, but it felt like a workaround, not a clean solution. The YOLO Philosophy: Detection as a Single Learning Problem YOLOv1 challenged the dominant assumption that object detection must be a multi-stage problem. Instead, it asked a radical question: Why not predict everything at once, directly from pixels? A Conceptual Shift YOLO reframed object detection as: A single regression problem from image pixels to bounding boxes and class probabilities. This meant: No region proposals No sliding windows No separate classifiers No post-hoc stitching Just one neural network, trained end-to-end. Why This Matters This shift: Simplified the learning objective Reduced engineering complexity Allowed gradients to flow across the entire detection task Enabled true real-time inference YOLO didn’t just optimize detection, it redefined what detection was. How YOLOv1 Works: A New Visual Grammar YOLOv1 introduced a structured way for neural networks to “describe” an image. Grid-Based Responsibility Assignment The image is divided into an S × S grid (commonly 7 × 7). Each grid cell: Is responsible for objects whose center lies within it Predicts bounding boxes and class probabilities This created a spatial prior that helped the network reason about where objects tend to appear. Bounding Box Prediction Details Each grid cell predicts B bounding boxes, where each box consists of: x, y → center coordinates (relative to the grid cell) w, h → width and height (relative to the image) confidence score The confidence score encodes: Pr(object) × IoU(predicted box, ground truth) This was clever, it forced the network to jointly reason about objectness and localization quality. Class Prediction Strategy Instead of predicting classes per bounding box, YOLOv1 predicted: One set of class probabilities per grid cell This reduced complexity but introduced limitations in crowded scenes, a trade-off YOLOv1 knowingly accepted. YOLOv1 Architecture: Designed for Global Reasoning YOLOv1’s network architecture was intentionally designed to capture global image context. Architecture Breakdown 24 convolutional layers 2 fully connected layers Inspired by GoogLeNet (but simpler) Pretrained on ImageNet classification The final fully connected layers allowed YOLO to: Combine spatially distant features Understand object relationships Avoid false positives caused by local texture patterns Why Global Context Matters Traditional detectors often mistook: Shadows for objects Textures for meaningful regions YOLO’s global reasoning reduced these errors by understanding the scene as a whole. The YOLOv1 Loss Function: Balancing Competing Objectives Training YOLOv1 required solving a delicate optimization problem. Multi-Part Loss Components YOLOv1’s loss function combined: Localization loss Errors in x, y, w, h Heavily weighted to prioritize accurate boxes Confidence loss Penalized incorrect objectness predictions Classification loss Penalized wrong class predictions Smart Design Choices Higher weight for bounding box regression Lower weight for background confidence Square root applied to width and height to stabilize gradients These design choices directly influenced how future detection losses were built. Speed vs Accuracy: A Conscious Design Trade-Off YOLOv1 was explicit about its priorities. YOLO’s Position Slightly worse localization is acceptable if it enables real-time vision. Performance Impact YOLOv1 ran an order of magnitude faster than competing detectors Enabled deployment on: Live camera feeds Robotics systems Embedded devices (with Fast YOLO) This trade-off reshaped how researchers evaluated detection systems, not just by accuracy, but by usability. Where YOLOv1 Fell Short, and Why That’s Important YOLOv1’s limitations weren’t accidental; they revealed deep insights. Small Objects Grid resolution limited detection granularity Small objects often disappeared within grid cells Crowded Scenes One object class prediction per cell Overlapping objects confused the model Localization Precision Coarse bounding box predictions Lower IoU scores than region-based methods Each weakness became a research question that drove YOLOv2, YOLOv3, and beyond. Why YOLOv1 Changed Computer Vision Forever YOLOv1 didn’t just introduce a model, it introduced a mindset. End-to-End Learning as a Principle Detection systems became: Unified Differentiable Easier to deploy and optimize Real-Time as a First-Class Metric After YOLO: Speed was no longer optional Real-time inference became an expectation A Blueprint for Future Detectors Modern architectures, CNN-based and transformer-based alike, inherit YOLO’s core ideas: Dense prediction Single-pass inference Deployment-aware design Final Reflection: The Day Detection Became Vision YOLOv1 marked the moment when object detection stopped being a patchwork of tricks and became a coherent vision system. It taught the field that: Seeing fast unlocks new realities Simplicity scales End-to-end learning changes how machines understand the world YOLO didn’t just look once. It made computer vision see differently forever. Visit Our Data Annotation Service Visit Now Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec

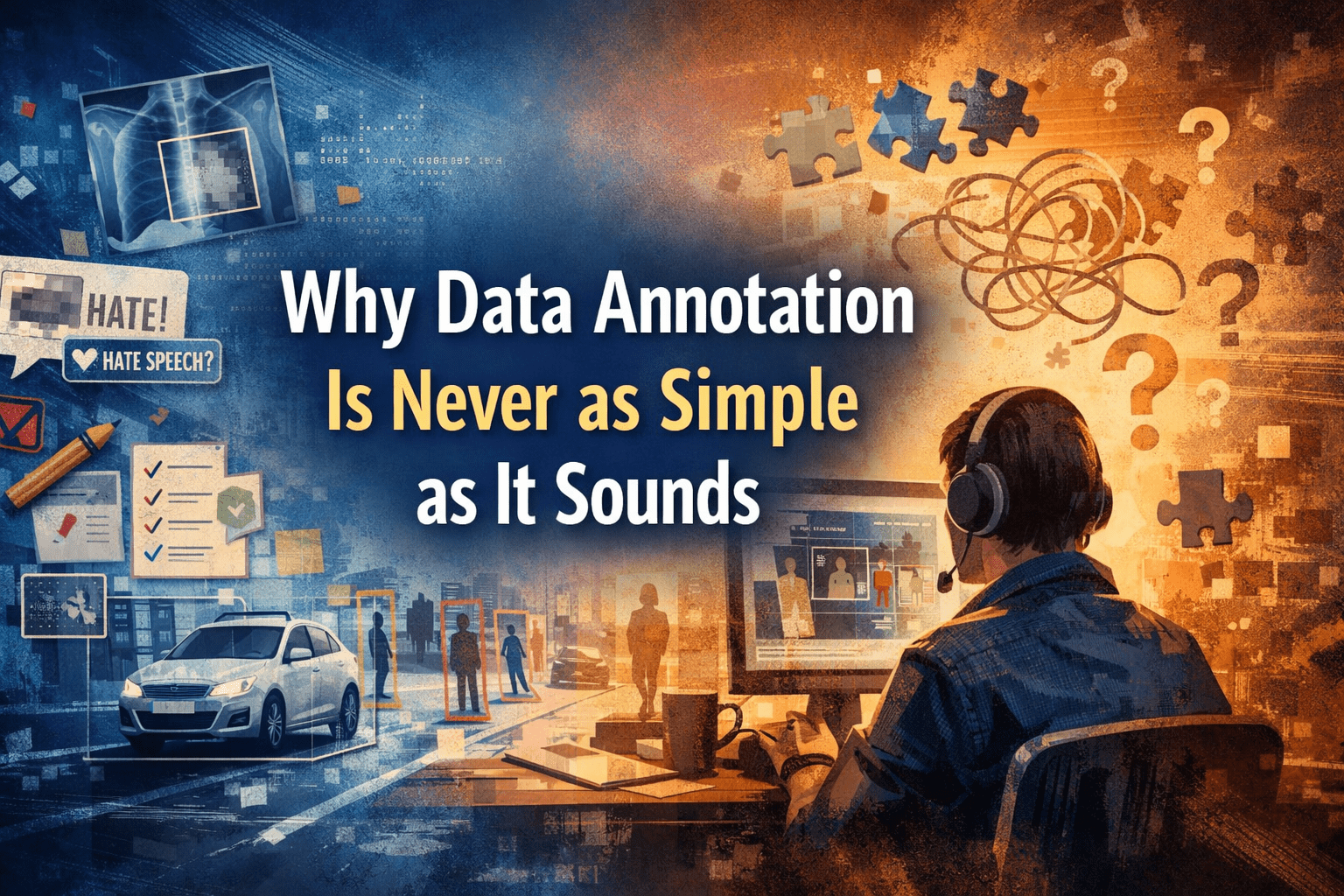

Introduction Data annotation is often described as the “easy part” of artificial intelligence. Draw a box, label an image, tag a sentence, done. In reality, data annotation is one of the most underestimated, labor-intensive, and intellectually demanding stages of any AI system. Many modern AI failures can be traced not to weak models, but to weak or inconsistent annotation. This article explores why data annotation is far more complex than it appears, what makes it so critical, and how real-world experience exposes its hidden challenges. 1. Annotation Is Not Mechanical Work At first glance, annotation looks like repetitive manual labor. In practice, every annotation is a decision. Even simple tasks raise difficult questions: Where exactly does an object begin and end? Is this object partially occluded or fully visible? Does this text express sarcasm or literal meaning? Is this medical structure normal or pathological? These decisions require context, judgment, and often domain knowledge. Two annotators can look at the same data and produce different “correct” answers, both defensible and both problematic for model training. 2. Ambiguity Is the Default, Not the Exception Real-world data is messy by nature. Images are blurry, audio is noisy, language is vague, and human behavior rarely fits clean categories. Annotation guidelines attempt to reduce ambiguity, but they can never eliminate it. Edge cases appear constantly: Is a pedestrian behind glass still a pedestrian? Does a cracked bone count as fractured or intact? Is a social media post hate speech or quoted hate speech? Every edge case forces annotators to interpret intent, context, and consequences, something no checkbox can fully capture. 3. Quality Depends on Consistency, Not Just Accuracy A single correct annotation is not enough. Models learn patterns across millions of examples, which means consistency matters more than individual brilliance. Problems arise when: Guidelines are interpreted differently across teams Multiple vendors annotate the same dataset Annotation rules evolve mid-project Cultural or linguistic differences affect judgment Inconsistent annotation introduces noise that models quietly absorb, leading to unpredictable behavior in production. The model does not know which annotator was “right”. It only knows patterns. 3. Quality Depends on Consistency, Not Just Accuracy A single correct annotation is not enough. Models learn patterns across millions of examples, which means consistency matters more than individual brilliance. Problems arise when: Guidelines are interpreted differently across teams Multiple vendors annotate the same dataset Annotation rules evolve mid-project Cultural or linguistic differences affect judgment Inconsistent annotation introduces noise that models quietly absorb, leading to unpredictable behavior in production. The model does not know which annotator was “right”. It only knows patterns. 5. Scale Introduces New Problems As annotation projects grow, complexity compounds: Thousands of annotators Millions of samples Tight deadlines Continuous dataset updates Maintaining quality at scale requires audits, consensus scoring, gold standards, retraining, and constant feedback loops. Without this infrastructure, annotation quality degrades silently while costs continue to rise. 6. The Human Cost Is Often Ignored Annotation is cognitively demanding and, in some cases, emotionally exhausting. Content moderation, medical data, accident footage, or sensitive text can take a real psychological toll. Yet annotation work is frequently undervalued, underpaid, and invisible. This leads to high turnover, rushed decisions, and reduced quality, directly impacting AI performance. 7. A Real Experience from the Field “At the beginning, I thought annotation was just drawing boxes,” says Ahmed, a data annotator who worked on a medical imaging project for over two years. “After the first week, I realized every image was an argument. Radiologists disagreed with each other. Guidelines changed. What was ‘correct’ on Monday was ‘wrong’ by Friday.” He explains that the hardest part was not speed, but confidence. “You’re constantly asking yourself: am I helping the model learn the right thing, or am I baking in confusion? When mistakes show up months later in model evaluation, you don’t even know which annotation caused it.” For Ahmed, annotation stopped being a task and became a responsibility. “Once you understand that models trust your labels blindly, you stop calling it simple work.” 8. Why This Matters More Than Ever As AI systems move into healthcare, transportation, education, and governance, annotation quality becomes a foundation issue. Bigger models cannot compensate for unclear or biased labels. More data does not fix inconsistent data. The industry’s focus on model size and architecture often distracts from a basic truth:AI systems are only as good as the data they are taught to trust. Conclusion Data annotation is not a preliminary step. It is core infrastructure. It demands judgment, consistency, domain expertise, and human care. Calling it “simple” minimizes the complexity of real-world data and the people who shape it. The next time an AI system fails in an unexpected way, the answer may not be in the model at all, but in the labels it learned from. Visit Our Data Annotation Service Visit Now Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Introduction When people hear “AI-powered driving,” many instinctively think of Large Language Models (LLMs). After all, LLMs can write essays, generate code, and argue philosophy at 2 a.m. But putting a car safely through a busy intersection is a very different problem. Waymo, Google’s autonomous driving company, operates far beyond the scope of LLMs. Its vehicles rely on a deeply integrated robotics and AI stack, combining sensors, real-time perception, probabilistic reasoning, and control systems that must work flawlessly in the physical world, where mistakes are measured in metal, not tokens. In short: Waymo doesn’t talk its way through traffic. It computes its way through it. The Big Picture: The Waymo Autonomous Driving Stack Waymo’s system can be understood as a layered pipeline: Sensing the world Perceiving and understanding the environment Predicting what will happen next Planning safe and legal actions Controlling the vehicle in real time Each layer is specialized, deterministic where needed, probabilistic where required, and engineered for safety, not conversation. 1. Sensors: Seeing More Than Humans Can Waymo vehicles are packed with redundant, high-resolution sensors. This is the foundation of everything. Key Sensor Types LiDAR: Creates a precise 3D map of the environment using laser pulses. Essential for depth and shape understanding. Cameras: Capture color, texture, traffic lights, signs, and human gestures. Radar: Robust against rain, fog, and dust; excellent for detecting object velocity. Audio & IMU sensors: Support motion tracking and system awareness. Unlike humans, Waymo vehicles see 360 degrees, day and night, without blinking or getting distracted by billboards. 2. Perception: Turning Raw Data Into Reality Sensors alone are just noisy streams of data. Perception is where AI earns its keep. What Perception Does Detects objects: cars, pedestrians, cyclists, animals, cones Classifies them: vehicle type, posture, motion intent Tracks them over time in 3D space Understands road geometry: lanes, curbs, intersections This layer relies heavily on computer vision, sensor fusion, and deep neural networks, trained on millions of real-world and simulated scenarios. Importantly, this is not text-based reasoning. It is spatial, geometric, and continuous, things LLMs are fundamentally bad at. 3. Prediction: Anticipating the Future (Politely) Driving isn’t about reacting; it’s about predicting. Waymo’s prediction systems estimate: Where nearby agents are likely to move Multiple possible futures, each with probabilities Human behaviors like hesitation, aggression, or compliance For example, a pedestrian near a crosswalk isn’t just a “person.” They’re a set of possible trajectories with likelihoods attached. This probabilistic modeling is critical, and again, very different from next-word prediction in LLMs. 4. Planning: Making Safe, Legal, and Social Decisions Once the system understands the present and predicts the future, it must decide what to do. Planning Constraints Traffic laws Safety margins Passenger comfort Road rules and local norms The planner evaluates thousands of possible maneuvers, lane changes, stops, turns, and selects the safest viable path. This process involves optimization algorithms, rule-based logic, and learned models, not free-form language generation. There is no room for “creative interpretation” when a red light is involved. 5. Control: Executing With Precision Finally, the control system translates plans into: Steering angles Acceleration and braking Real-time corrections These controls operate at high frequency (milliseconds), reacting instantly to changes. This is classical robotics and control theory territory, domains where determinism beats eloquence every time. Where LLMs Fit (and Where They Don’t) LLMs are powerful, but Waymo’s core driving system does not depend on them. LLMs May Help With: Human–machine interaction Customer support Natural language explanations Internal tooling and documentation LLMs Are Not Used For: Real-time driving decisions Safety-critical control Sensor fusion or perception Vehicle motion planning Why? Because LLMs are: Non-deterministic Hard to formally verify Prone to confident errors (a.k.a. hallucinations) A car that hallucinates is not a feature. The Bigger Picture: Democratizing Medical AI Healthcare inequality is not just about access to doctors, it is about access to knowledge. Open medical AI models: Lower barriers for low-resource regions Enable local innovation Reduce dependence on external vendors If used responsibly, MedGemma could help ensure that medical AI benefits are not limited to the few who can afford them. Simulation: Where Waymo Really Scales One of Waymo’s biggest advantages is simulation. Billions of miles driven virtually Rare edge cases replayed thousands of times Synthetic scenarios that would be unsafe to test in reality Simulation allows Waymo to validate improvements before deployment and measure safety statistically—something no human-only driving system can do. Safety and Redundancy: The Unsexy Superpower Waymo’s system is designed with: Hardware redundancy Software fail-safes Conservative decision policies Continuous monitoring If something is uncertain, the car slows down or stops. No bravado. No ego. Just math. Conclusion: Beyond Language, Into Reality Waymo works because it treats autonomous driving as a robotics and systems engineering problem, not a conversational one. While LLMs dominate headlines, Waymo quietly solves one of the hardest real-world AI challenges: safely navigating unpredictable human environments at scale. In other words, LLMs may explain traffic laws beautifully, but Waymo actually follows them. And on the road, that matters more than sounding smart. Visit Our Data Annotation Service Visit Now Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Introduction Artificial intelligence has been circling healthcare for years, diagnosing images, summarizing clinical notes, predicting risks, yet much of its real power has remained locked behind proprietary walls. Google’s MedGemma changes that equation. By releasing open medical AI models built specifically for healthcare contexts, Google is signaling a shift from “AI as a black box” to AI as shared infrastructure for medicine. This is not just another model release. MedGemma represents a structural change in how healthcare AI can be developed, validated, and deployed. The Problem With Healthcare AI So Far Healthcare AI has faced three persistent challenges: OpacityMany high-performing medical models are closed. Clinicians cannot inspect them, regulators cannot fully audit them, and researchers cannot adapt them. General Models, Specialized RisksLarge general-purpose language models are not designed for clinical nuance. Small mistakes in medicine are not “edge cases”, they are liability. Inequitable AccessAdvanced medical AI often ends up concentrated in large hospitals, well-funded startups, or high-income countries. The result is a paradox: AI shows promise in healthcare, but trust, scalability, and equity remain unresolved. What Is MedGemma? MedGemma is a family of open-weight medical AI models released by Google, built on the Gemma architecture but adapted specifically for healthcare and biomedical use cases. Key characteristics include: Medical-domain tuning (clinical language, biomedical concepts) Open weights, enabling inspection, fine-tuning, and on-prem deployment Designed for responsible use, with explicit positioning as decision support, not clinical authority In simple terms: MedGemma is not trying to replace doctors. It is trying to become a reliable, transparent assistant that developers and institutions can actually trust. Why “Open” Matters More in Medicine Than Anywhere Else In most consumer applications, closed models are an inconvenience. In healthcare, they are a risk. Transparency and Auditability Open models allow: Independent evaluation of bias and failure modes Regulatory scrutiny Reproducible research This aligns far better with medical ethics than “trust us, it works.” Customization for Real Clinical Settings Hospitals differ. So do patient populations. Open models can be fine-tuned for: Local languages Regional disease prevalence Institutional workflows Closed APIs cannot realistically offer this depth of adaptation. Data Privacy and Sovereignty With MedGemma, organizations can: Run models on-premises Keep patient data inside institutional boundaries Comply with strict data protection regulations For healthcare systems, this is not optional, it is mandatory. Potential Use Cases That Actually Make Sense MedGemma is not a silver bullet, but it enables realistic, high-impact applications: 1. Clinical Documentation Support Drafting summaries from structured notes Translating between clinical and patient-friendly language Reducing physician burnout (quietly, which is how doctors prefer it) 2. Medical Education and Training Interactive case simulations Question-answering grounded in medical terminology Localized medical training tools in under-resourced regions 3. Research Acceleration Literature review assistance Hypothesis exploration Data annotation support for medical datasets 4. Decision Support (Not Decision Making) Flagging potential issues Surfacing relevant guidelines Assisting, not replacing, clinical judgment The distinction matters. MedGemma is positioned as a copilot, not an autopilot. Safety, Responsibility, and the Limits of AI Google has been explicit about one thing: MedGemma is not a diagnostic authority. This is important for two reasons: Legal and Ethical RealityMedicine requires accountability. AI cannot be held accountable, people can. Trust Through ConstraintModels that openly acknowledge their limits are more trustworthy than those that pretend omniscience. MedGemma’s real value lies in supporting human expertise, not competing with it. How MedGemma Could Shift the Healthcare AI Landscape From Products to Platforms Instead of buying opaque AI tools, hospitals can build their own systems on top of open foundations. From Vendor Lock-In to Ecosystems Researchers, startups, and institutions can collaborate on improvements rather than duplicating effort behind closed doors. From “AI Hype” to Clinical Reality Open evaluation encourages realistic benchmarking, failure analysis, and incremental improvement, exactly how medicine advances. The Bigger Picture: Democratizing Medical AI Healthcare inequality is not just about access to doctors, it is about access to knowledge. Open medical AI models: Lower barriers for low-resource regions Enable local innovation Reduce dependence on external vendors If used responsibly, MedGemma could help ensure that medical AI benefits are not limited to the few who can afford them. Final Thoughts Google’s MedGemma is not revolutionary because it is powerful. It is revolutionary because it is open, medical-first, and constrained by responsibility. In a field where trust matters more than raw capability, that may be exactly what healthcare AI needs. The real transformation will not come from AI replacing clinicians, but from clinicians finally having AI they can understand, adapt, and trust. Visit Our Data Annotation Service Visit Now Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Introduction For years, real-time object detection has followed the same rigid blueprint: define a closed set of classes, collect massive labeled datasets, train a detector, bolt on a segmenter, then attach a tracker for video. This pipeline worked—but it was fragile, expensive, and fundamentally limited. Any change in environment, object type, or task often meant starting over. Meta’s Segment Anything Model 3 (SAM 3) breaks this cycle entirely. As described in the Coding Nexus analysis, SAM 3 is not just an improvement in accuracy or speed—it is a structural rethinking of how object detection, segmentation, and tracking should work in modern computer vision systems . SAM 3 replaces class-based detection with concept-based understanding, enabling real-time segmentation and tracking using simple natural-language prompts. This shift has deep implications across robotics, AR/VR, video analytics, dataset creation, and interactive AI systems. 1. The Core Problem With Traditional Object Detection Before understanding why SAM 3 matters, it’s important to understand what was broken. 1.1 Rigid Class Definitions Classic detectors (YOLO, Faster R-CNN, SSD) operate on a fixed label set. If an object category is missing—or even slightly redefined—the model fails. “Dog” might work, but “small wet dog lying on the floor” does not. 1.2 Fragmented Pipelines A typical real-time vision system involves: A detector for bounding boxes A segmenter for pixel masks A tracker for temporal consistency Each component has its own failure modes, configuration overhead, and performance tradeoffs. 1.3 Data Dependency Every new task requires new annotations. Collecting and labeling data often costs more than training the model itself. SAM 3 directly targets all three issues. 2. SAM 3’s Conceptual Breakthrough: From Classes to Concepts The most important innovation in SAM 3 is the move from class-based detection to concept-based segmentation. Instead of asking: “Is there a car in this image?” SAM 3 answers: “Show me everything that matches this concept.” That concept can be expressed as: a short text phrase a descriptive noun group or a visual example This approach is called Promptable Concept Segmentation (PCS) . Why This Matters Concepts are open-ended No retraining is required The same model works across images and videos Semantic understanding replaces rigid taxonomy This fundamentally changes how humans interact with vision systems. 3. Unified Detection, Segmentation, and Tracking SAM 3 eliminates the traditional multi-stage pipeline. What SAM 3 Does in One Pass Detects all instances of a concept Produces pixel-accurate masks Assigns persistent identities across video frames Unlike earlier SAM versions, which segmented one object per prompt, SAM 3 returns all matching instances simultaneously, each with its own identity for tracking . This makes real-time video understanding far more robust, especially in crowded or dynamic scenes. 4. How SAM 3 Works (High-Level Architecture) While the Medium article avoids low-level math, it highlights several key architectural ideas: 4.1 Language–Vision Alignment Text prompts are embedded into the same representational space as visual features, allowing semantic matching between words and pixels. 4.2 Presence-Aware Detection SAM 3 doesn’t just segment—it first determines whether a concept exists in the scene, reducing false positives and improving precision. 4.3 Temporal Memory For video, SAM 3 maintains internal memory so objects remain consistent even when: partially occluded temporarily out of frame changing shape or scale This is why SAM 3 can replace standalone trackers. 5. Real-Time Performance Implications A key insight from the article is that real-time no longer means simplified models. SAM 3 demonstrates that: High-quality segmentation Open-vocabulary understanding Multi-object tracking can coexist in a single real-time system—provided the architecture is unified rather than modular . This redefines expectations for what “real-time” vision systems can deliver. 6. Impact on Dataset Creation and Annotation One of the most immediate consequences of SAM 3 is its effect on data pipelines. Traditional Annotation Manual labeling Long turnaround times High cost per image or frame With SAM 3 Prompt-based segmentation generates masks instantly Humans shift from labeling to verification Dataset creation scales dramatically faster This is especially relevant for industries like autonomous driving, medical imaging, and robotics, where labeled data is a bottleneck. 7. New Possibilities in Video and Interactive Media SAM 3 enables entirely new interaction patterns: Text-driven video editing Semantic search inside video streams Live AR effects based on descriptions, not predefined objects For example: “Highlight all moving objects except people.” Such instructions were impractical with classical detectors but become natural with SAM 3’s concept-based approach. 8. Comparison With Previous SAM Versions Feature SAM / SAM 2 SAM 3 Object count per prompt One All matching instances Video tracking Limited / external Native Vocabulary Implicit Open-ended Pipeline complexity Moderate Unified Real-time use Experimental Practical SAM 3 is not a refinement—it is a generational shift. 9. Current Limitations Despite its power, SAM 3 is not a silver bullet: Compute requirements are still significant Complex reasoning (multi-step instructions) requires external agents Edge deployment remains challenging without distillation However, these are engineering constraints, not conceptual ones. 10. Why SAM 3 Represents a Structural Shift in Computer Vision SAM 3 changes the role of object detection in AI systems: From rigid perception → flexible understanding From labels → language From pipelines → unified models As emphasized in the Coding Nexus article, this shift is comparable to the jump from keyword search to semantic search in NLP . Final Thoughts Meta’s SAM 3 doesn’t just improve object detection—it redefines how humans specify visual intent. By making language the interface and concepts the unit of understanding, SAM 3 pushes computer vision closer to how people naturally perceive the world. In the long run, SAM 3 is less about segmentation masks and more about a future where vision systems understand what we mean, not just what we label. Visit Our Data Annotation Service Visit Now

Introduction Artificial intelligence has entered a stage of maturity where it is no longer a futuristic experiment but an operational driver for modern life. In 2026, AI tools are powering businesses, automating creative work, enriching education, strengthening research accuracy, and transforming how individuals plan, communicate, and make decisions. What once required large technical teams or specialized expertise can now be completed by AI systems that think, generate, optimize, and execute tasks autonomously. The AI landscape of 2026 is shaped by intelligent copilots embedded into everyday applications, autonomous agents capable of running full business workflows, advanced media generation platforms, and enterprise-grade decision engines supported by structured data systems. These tools are not only faster and more capable—they are deeply integrated into professional workflows, securely aligned with governance requirements, and tailored to deliver actionable outcomes rather than raw output. This guide highlights the most impactful AI tools shaping 2026, explaining what they do best, who they are designed for, and why they matter today. Whether the goal is productivity, innovation, or operational scale, these platforms represent the leading edge of AI adoption. Best AI Productivity & Copilot Tools These redefine personal work, rewriting how people research, write, plan, manage, and analyze. OpenAI WorkSuite Best for: Document creation, research workflows, email automation The 2026 version integrates persistent memory, team-level agent execution, and secure document interpretation. It has become the default writing, planning, and corporate editing environment. Standout abilities Auto-structured research briefs Multi-document analysis Workflow templates Real-time voice collaboration Microsoft Copilot 365 Best for: Large organizations using Microsoft ecosystems Copilot now interprets full organizational knowledge—not just files in a local account. Capabilities Predictive planning inside Teams Structured financial and KPI summaries from Excel Real-time slide generation in PowerPoint Automated meeting reasoning Google Gemini Office Cloud Best for: Multi-lingual teams and Google Workspace heavy users Gemini generates full workflow outcomes: docs, emails, user flows, dashboards. Notable improvements Ethical scoring for content Multi-input document reasoning Search indexing-powered organization Best AI Tools for Content Creation & Media Production 2026 media creation is defined by near-photorealistic video generation, contextual storytelling, and brand-aware asset production. Runway Genesis Studio Best for: Video production without studio equipment 2026 models produce: Real human movements Dynamic lighting consistency Scene continuity across frames Used by advertising agencies and indie creators. OpenAI Video Model Best for: Script-to-film workflows Generates: Camera angles Narrative scene segmentation Actor continuity Advanced version supports actor preservation licensing, reducing rights conflicts. Midjourney Pro Studio Best for: Brand-grade imagery Strength points: Perfect typography Predictable style anchors Adaptive visual identity Corporate teams use it for product demos, packaging, and motion banners. Autonomous AI Agents & Workflow Automation Tools These tools actually “run work,” not just assist it. Devin AI Developer Agent Best for: End-to-end engineering sequences Devin executes tasks: UI building Server configuration Functional QA Deployment Tracking dashboard shows each sequence executed. Anthropic Enterprise Agents Best for: Compliance-centric industries The model obeys governance rules, reference logs, and audit policies. Typical client fields: Healthcare Banking Insurance Public sector Zapier AI Orchestrator Best for: Multi-app business automation From 2026 update: Agents can run continuously Actions can fork into real-time branches Example:Lead arrival → qualification → outreach → CRM update → dashboard entry. Best AI Tools for Data & Knowledge Optimization Organizations now rely on AI for scalable structured data operations. Snowflake Cortex Intelligence Best for: Enterprise-scale knowledge curation Using Cortex, companies: Extract business entities Remove anomalies Enforce compliance visibility Fully governed environments are now standard. Databricks Lakehouse AI Best for: Machine-learning-ready structured data streams Tools deliver: Feature indexing Long-window time-series analytics Batch inference pipelines Useful for manufacturing, energy, and logistics sectors. Best AI Tools for Software Development & Engineering AI generates functional software, tests it, and scales deployment. GitHub Copilot Enterprise X Best for: Managed code reasoning Features: Test auto-generation Code architecture recommendation Runtime debugging insights Teams gain 20–45% engineering-cycle reduction. Pydantic AI Best for: Safe model-integration development Clean workflow for: API scaffolding schema validation deterministic inference alignment Preferred for regulated AI integrations. Best AI Platforms for Education & Learning Industries Adaptive learning replaces static courseware. Khanmigo Learning Agent Best for: K-12 and early undergraduate programs System personalizes: Study pacing Assessment style Skill reinforcement Parent or teacher dashboards show cognitive progression over time. Coursera Skill-Agent Pathways Best for: Skill-linked credential programs Learners can: Build portfolios automatically Benchmark progress Convert learning steps into résumé output Most Emerging AI Tools of 2026—Worth Watching SynthLogic Legal Agent Performs: Contract comparison Clause extraction Policy traceability Used for M&A analysis. Atlas Human-Behavior Simulation Engine Simulates decision patterns for: Marketing Security analysis UX flow optimization How AI Tools in 2026 Are Changing Work The key shift is not intelligence but agency. In 2026: Tools remember context Tasks persist autonomously Systems coordinate with other systems AI forms organizational memory Results are validated against policies Work becomes outcome-driven rather than effort-driven. Final Perspective The best AI tools in 2026 share three traits: They act autonomously. They support customized workflows. They integrate securely into enterprise knowledge systems. The most strategic decision for individuals and enterprises is matching roles with the right AI frameworks: content creators need generative suites, analysts need structured reasoning copilots, and engineers benefit from persistent development agents. Visit Our Data Collection Service Visit Now

Introduction Enterprise-grade data crawling and scraping has transformed from a niche technical capability into a core infrastructure layer for modern AI systems, competitive intelligence workflows, large-scale analytics, and foundation-model training pipelines. In 2025, organizations no longer ask whether they need large-scale data extraction, but how to build a resilient, compliant, and scalable pipeline that spans millions of URLs, dynamic JavaScript-heavy sites, rate limits, CAPTCHAs, and ever-growing data governance regulations. This landscape has become highly competitive. Providers must now deliver far more than basic scraping, they must offer web-scale coverage, anti-blocking infrastructure, automation, structured data pipelines, compliance-by-design, and increasingly, AI-native extraction that supports multimodal and LLM-driven workloads. The following list highlights the Top 10 Enterprise Web-Scale Data Crawling & Scraping Providers in 2025, selected based on scalability, reliability, anti-detection capability, compliance posture, and enterprise readiness. The Top 10 Companies SO Development – The AI-First Web-Scale Data Infrastructure Platform SO Development leads the 2025 landscape with a web-scale data crawling ecosystem designed explicitly for AI training, multimodal data extraction, competitive intelligence, and automated data pipelines across 40+ industries. Leveraging a hybrid of distributed crawlers, high-resilience proxy networks, and LLM-driven extraction engines, SO Development delivers fully structured, clean datasets without requiring clients to build scraping infrastructure from scratch. Highlights Global-scale crawling (public, deep, dynamic JS, mobile) AI-powered parsing of text, tables, images, PDFs, and complex layouts Full compliance pipeline: GDPR/HIPAA/CCPA-ready data workflows Parallel crawling architecture optimized for enterprise throughput Integrated dataset pipelines for AI model training and fine-tuning Specialized vertical solutions (medical, financial, e-commerce, legal, automotive) Why They’re #1 SO Development stands out by merging traditional scraping infrastructure with next-gen AI data processing, enabling enterprises to transform raw web content into ready-to-train datasets at unprecedented speed and quality. Bright Data – The Proxy & Scraping Cloud Powerhouse Bright Data remains one of the most mature players, offering a massive proxy network, automated scraping templates, and advanced browser automation tools. Their distributed network ensures scalability even for high-volume tasks. Strengths Large residential and mobile proxy network No-code scraping studio for rapid workflows Browser automation and CAPTCHA handling Strong enterprise SLAs Zyte – Clean, Structured, Developer-Friendly Crawling Formerly Scrapinghub, Zyte continues to excel in high-quality structured extraction at scale. Their “Smart Proxy” and “Automatic Extraction” tools streamline dynamic crawling for complex websites. Strengths Automatic schema detection Quality-cleaning pipeline Cloud-based Spider service ML-powered content normalization Oxylabs – High-Volume Proxy & Web Intelligence Provider Oxylabs specializes in large-scale crawling powered by AI-based proxy management. They target industries requiring high extraction throughput—finance, travel, cybersecurity, and competitive markets. Strengths Large residential & datacenter proxy pools AI-powered unlocker for difficult sites Web Intelligence service High success rates for dynamic websites Apify – Automation Platform for Custom Web Robots Apify turns scraping tasks into reusable web automation actors. Enterprise teams rely on their marketplace and SDK to build robust custom crawlers and API-like data endpoints. Strengths Pre-built marketplace crawlers SDK for reusable automation Strong developer tools Batch pipeline capabilities Diffbot – AI-Powered Web Extraction & Knowledge Graph Diffbot is unique for its AI-based autonomous agents that parse the web into structured knowledge. Instead of scripts, it relies on computer vision and ML to understand page content. Strengths Automated page classification Visual parsing engine Massive commercial Knowledge Graph Ideal for research, analytics, and LLM training SerpApi – High-Precision Google & E-Commerce SERP Scraping Focused on search engines and marketplace data, SerpApi delivers API endpoints that return fully structured SERP results with consistent reliability. Strengths Google, Bing, Baidu, and major SERP coverage Built-in CAPTCHA bypass Millisecond-level response speeds Scalable API usage tiers Webz.io – Enterprise Web-Data-as-a-Service Webz.io provides continuous streams of structured public web data. Their feeds are widely used in cybersecurity, threat detection, academic research, and compliance. Strengths News, blogs, forums, and dark web crawlers Sentiment and topic classification Real-time monitoring High consistency across global regions Smartproxy – Cost-Effective Proxy & Automation Platform Smartproxy is known for affordability without compromising reliability. They excel in scalable proxy infrastructure and SaaS tools for lightweight enterprise crawling. Strengths Residential, datacenter, and mobile proxies Simple scraping APIs Budget-friendly for mid-size enterprises High reliability for basic to mid-complexity tasks ScraperAPI – Simple, High-Success Web Request API ScraperAPI focuses on a simplified developer experience: send URLs, receive parsed pages. The platform manages IP rotation, retries, and browser rendering automatically. Strengths Automatic JS rendering Built-in CAPTCHA defeat Flexible pricing for small teams and startups High success rates across various endpoints Comparison Table for All 10 Providers Rank Provider Strengths Best For Key Capabilities 1 SO Development AI-native pipelines, enterprise-grade scaling, compliance infrastructure AI training, multimodal datasets, regulated industries Distributed crawlers, LLM extraction, PDF/HTML/image parsing, GDPR/HIPAA workflows 2 Bright Data Largest proxy network, strong unlocker High-volume scraping, anti-blocking Residential/mobile proxies, API, browser automation 3 Zyte Clean structured data, quality filters Dynamic sites, e-commerce, data consistency Automatic extraction, smart proxy, schema detection 4 Oxylabs High-complexity crawling, AI proxy engine Finance, travel, cybersecurity Unlocker tech, web intelligence platform 5 Apify Custom automation actors Repeated workflows, custom scripts Marketplace, actor SDK, robotic automation 6 Diffbot Knowledge Graph + AI extraction Research, analytics, knowledge systems Visual AI parsing, automated classification 7 SerpApi Fast SERP and marketplace scraping SEO, research, e-commerce analysis Google/Bing APIs, CAPTCHAs bypassed 8 Webz.io Continuous public data streams Security intelligence, risk monitoring News/blog/forum feeds, dark web crawling 9 Smartproxy Affordable, reliable Budget enterprise crawling Simple APIs, proxy rotation 10 ScraperAPI Simple “URL in → data out” model Startups, easy integration JS rendering, auto-rotation, retry logic How to Choose the Right Web-Scale Data Provider in 2025 Selecting the right provider depends on your specific use case. Here is a quick framework: For AI model training and multimodal datasets Choose: SO Development, Diffbot, Webz.ioThese offer structured-compliant data pipelines at scale. For high-volume crawling with anti-blocking resilience Choose: Bright Data, Oxylabs, Zyte For automation-first scraping workflows Choose: Apify, ScraperAPI For specialized SERP and marketplace data Choose: SerpApi For cost-efficiency and ease of use Choose: Smartproxy, ScraperAPI The Future of Enterprise Web Data Extraction (2025–2030) Over the next five years, enterprise web-scale data extraction will

Introduction In computer vision, segmentation used to feel like the “manual labor” of AI: click here, draw a box there, correct that mask, repeat a few thousand times, try not to cry. Meta’s original Segment Anything Model (SAM) turned that grind into a point-and-click magic trick: tap a few pixels, get a clean object mask. SAM 2 pushed further to videos, bringing real-time promptable segmentation to moving scenes. Now SAM 3 arrives as the next major step: not just segmenting things you click, but segmenting concepts you describe. Instead of manually hinting at each object, you can say “all yellow taxis” or “players wearing red jerseys” and let the model find, segment, and track every matching instance in images and videos. This blog goes inside SAM 3—what it is, how it differs from its predecessors, what “Promptable Concept Segmentation” really means, and how it changes the way we think about visual foundation models. 1. From SAM to SAM 3: A short timeline Before diving into SAM 3, it helps to step back and see how we got here. SAM (v1): Click-to-segment The original SAM introduced a powerful idea: a large, generalist segmentation model that could segment “anything” given visual prompts—points, boxes, or rough masks. It was trained on a massive, diverse dataset and showed strong zero-shot segmentation performance across many domains. SAM 2: Images and videos, in real time SAM 2 extended the concept to video, treating an image as just a one-frame video and adding a streaming memory mechanism to support real-time segmentation over long sequences. Key improvements in SAM 2: Unified model for images and videos Streaming memory for efficient video processing Model-in-the-loop data engine to build a huge SA-V video segmentation dataset But SAM 2 still followed the same interaction pattern: you specify a particular location (point/box/mask) and get one object instance back at a time. SAM 3: From “this object” to “this concept” SAM 3 changes the game by introducing Promptable Concept Segmentation (PCS)—instead of saying “segment the thing under this click,” you can say “segment every dog in this video” and get: All instances of that concept Segmentation masks for each instance Consistent identities for each instance across frames (tracking) In other words, SAM 3 is no longer just a segmentation tool—it’s a unified, open-vocabulary detection, segmentation, and tracking model for images and videos. 2. What exactly is SAM 3? At its core, SAM 3 is a unified foundation model for promptable segmentation in images and videos that operates on concept prompts. Core capabilities According to Meta’s release and technical overview, SAM 3 can: Detect and segment objects Given a text or visual prompt, SAM 3 finds all matching object instances in an image or video and returns instance masks. Track objects over time For video, SAM 3 maintains stable identities, so the same object can be followed across frames. Work with multiple prompt types Text: “yellow school bus”, “person wearing a backpack” Image exemplars: example boxes/masks of an object Visual prompts: points, boxes, masks (SAM 2-style) Combined prompts: e.g., “red car” + one exemplar, for even sharper control Support open-vocabulary segmentation It doesn’t rely on a closed set of pre-defined classes. Instead, it uses language prompts and exemplars to generalize to new concepts. Scale to large image/video collections SAM 3 is explicitly designed to handle the “find everything like X” problem across large datasets, not just a single frame. Compared to SAM 2, SAM 3 formalizes PCS and adds language-driven concept understanding while preserving (and improving) the interactive segmentation capabilities of earlier versions. 3. Promptable Concept Segmentation (PCS): The big idea “Promptable Concept Segmentation” is the central new task that SAM 3 tackles. You provide a concept prompt, and the model returns masks + IDs for all objects matching that concept. Concept prompts can be: Text prompts Simple noun phrases like “red apple”, “striped cat”, “football player in blue”, “car in the left lane”. Image exemplars Positive/negative example boxes around objects you care about. Combined prompts Text + exemplars, e.g., “delivery truck” plus one example bounding box to steer the model. This is fundamentally different from classic SAM-style visual prompts: Feature SAM / SAM 2 SAM 3 (PCS) Prompt type Visual (points/boxes/masks) Text, exemplars, visual, or combinations Output per prompt One instance per interaction All instances of the concept Task scope Local, instance-level Global, concept-level across frame(s) Vocabulary Implicit, not language-driven Open-vocabulary via text + exemplars This means you can do things like: “Find every motorcycle in this 10-minute traffic video.” “Segment all people wearing helmets in a construction site dataset.” “Count all green apples versus red apples in a warehouse scan.” All without manually clicking each object. The dream of “query-like segmentation at scale” is much closer to reality. 4. Under the hood: How SAM 3 works (conceptually) Meta has published an overview and open-sourced the reference implementation via GitHub and model hubs such as Hugging Face. While the exact implementation details are in the official paper and code, the high-level ingredients look roughly like this: Vision backbone A powerful image/video encoder transforms each frame into a rich spatiotemporal feature representation. Concept encoder (language + exemplars) Text prompts are encoded using a language model or text encoder. Visual exemplars (e.g., boxes/masks around an example object) are encoded as visual features. The system fuses these into a concept embedding that represents “what you’re asking for”. Prompt–vision fusion The concept embedding interacts with the visual features (e.g., via attention) to highlight regions that correspond to the requested concept. Instance segmentation head From the fused feature map, the model produces: Binary/soft masks Instance IDs Optional detection boxes or scores Temporal component for tracking For video, SAM 3 uses mechanisms inspired by SAM 2’s streaming memory to maintain consistent identities for objects across frames, enabling efficient concept tracking over time. You can think of SAM 3 as “SAM 2 + a powerful vision-language concept engine,” wrapped into a single unified model. 5. SAM 3 vs SAM 2 and traditional detectors How does SAM 3 actually compare