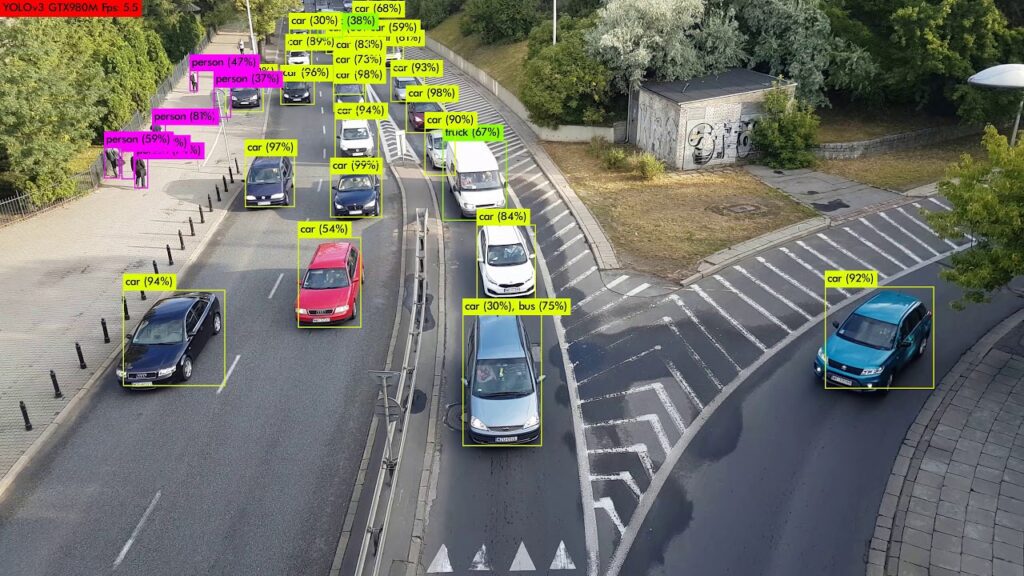

Object detection has witnessed groundbreaking advancements over the past decade, with the YOLO (You Only Look Once) series consistently setting new benchmarks in real-time performance and accuracy. With the release of YOLOv11 and YOLOv12, we see the integration of novel architectural innovations aimed at improving efficiency, precision, and scalability.

This in-depth comparison explores the key differences between YOLOv11 and YOLOv12, analyzing their technical advancements, performance metrics, and applications across industries.

Evolution of the YOLO Series

Since its inception in 2016, the YOLO series has evolved from a simple yet effective object detection framework to a highly sophisticated model that balances speed and accuracy. Over the years, each iteration has introduced enhancements in feature extraction, backbone architectures, attention mechanisms, and optimization techniques.

- YOLOv1 to YOLOv5 focused on refining CNN-based architectures and improving detection efficiency.

- YOLOv6 to YOLOv9 integrated advanced training techniques and lightweight structures for better deployment flexibility.

- YOLOv10 introduced transformer-based models and eliminated the need for Non-Maximum Suppression (NMS), further optimizing real-time detection.

- YOLOv11 and YOLOv12 build upon these improvements, integrating novel methodologies to push the boundaries of efficiency and precision.

YOLOv11: Key Features and Advancements

YOLOv11, released in late 2024, introduced several fundamental enhancements aimed at optimizing both detection speed and accuracy:

1. Transformer-Based Backbone

One of the most notable improvements in YOLOv11 is the shift from a purely CNN-based architecture to a transformer-based backbone. This enhances the model’s capability to understand global spatial relationships, improving object detection for complex and overlapping objects.

2. Dynamic Head Design

YOLOv11 incorporates a dynamic detection head, which adjusts processing power based on image complexity. This results in more efficient computational resource allocation and higher accuracy in challenging detection scenarios.

3. NMS-Free Training

By eliminating Non-Maximum Suppression (NMS) during training, YOLOv11 improves inference speed while maintaining detection precision.

4. Dual Label Assignment

To enhance detection for densely packed objects, YOLOv11 employs a dual label assignment strategy, utilizing both one-to-one and one-to-many label assignment techniques.

5. Partial Self-Attention (PSA)

YOLOv11 selectively applies attention mechanisms to specific regions of the feature map, improving its global representation capabilities without increasing computational overhead.

Performance Benchmarks

- Mean Average Precision (mAP):5%

- Inference Speed:60 FPS

- Parameter Count:~40 million

YOLOv12: The Next Evolution in Object Detection

YOLOv12, launched in early 2025, builds upon the innovations of YOLOv11 while introducing additional optimizations aimed at increasing efficiency.

1. Area Attention Module (A2)

This module optimizes the use of attention mechanisms by dividing the feature map into specific areas, allowing for a large receptive field while maintaining computational efficiency.

2. Residual Efficient Layer Aggregation Networks (R-ELAN)

R-ELAN enhances training stability by incorporating block-level residual connections, improving both convergence speed and model performance.

3. FlashAttention Integration

YOLOv12 introduces FlashAttention, an optimized memory management technique that reduces access bottlenecks, enhancing the model’s inference efficiency.

4. Architectural Refinements

Several structural refinements have been made, including:

- Removing positional encoding

- Adjusting the Multi-Layer Perceptron (MLP) ratio

- Reducing block depth

- Increasing the use of convolution operations for enhanced computational efficiency

Performance Benchmarks

- Mean Average Precision (mAP):6%

- Inference Latency:64 ms (on T4 GPU)

- Efficiency:Outperforms YOLOv10-N and YOLOv11-N in speed-to-accuracy ratio

YOLOv11 vs. YOLOv12: A Direct Comparison

Feature | YOLOv11 | YOLOv12 |

Backbone | Transformer-based | Optimized hybrid with Area Attention |

Detection Head | Dynamic adaptation | FlashAttention-enhanced processing |

Training Method | NMS-free training | Efficient label assignment techniques |

Optimization Techniques | Partial Self-Attention | R-ELAN with memory optimization |

mAP | 61.5% | 40.6% |

Inference Speed | 60 FPS | 1.64 ms latency (T4 GPU) |

Computational Efficiency | High | Higher |

Applications Across Industries

Both YOLOv11 and YOLOv12 serve a wide range of real-world applications, enabling advancements in various fields:

1. Autonomous Vehicles

Improved real-time object detection enhances safety and navigation in self-driving cars, allowing for better lane detection, pedestrian recognition, and obstacle avoidance.

2. Healthcare and Medical Imaging

The ability to detect anomalies with high precision accelerates medical diagnosis and treatment planning, especially in radiology and pathology.

3. Retail and Inventory Management

Automated product tracking and inventory monitoring reduce operational costs and improve stock management efficiency.

4. Surveillance and Security

Advanced threat detection capabilities make these models ideal for intelligent video surveillance and crowd monitoring.

5. Robotics and Industrial Automation

Enhanced perception capabilities empower robots to perform complex tasks with greater autonomy and precision.

Future Directions in YOLO Development

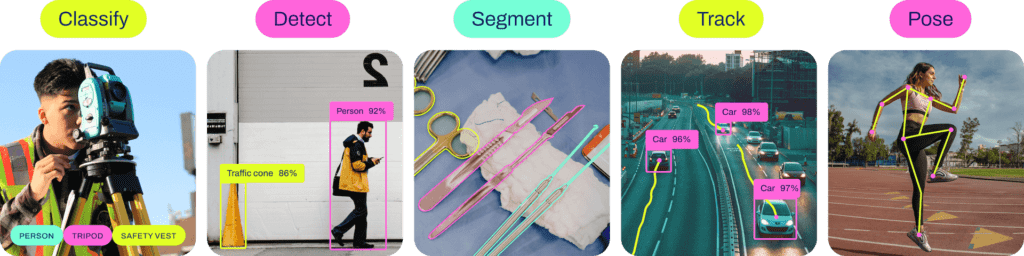

As object detection continues to evolve, several promising research areas could shape the next iterations of YOLO:

- Enhanced Hardware Optimization:Adapting models for edge devices and mobile deployment.

- Expanded Task Applications:Adapting YOLO for applications beyond object detection, such as pose estimation and instance segmentation.

- Advanced Training Methodologies:Integrating self-supervised and semi-supervised learning techniques to improve generalization and reduce data dependency.

Conclusion

Both YOLOv11 and YOLOv12 represent significant milestones in the evolution of real-time object detection. While YOLOv11 excels in accuracy with its transformer-based backbone, YOLOv12 pushes the boundaries of computational efficiency through innovative attention mechanisms and optimized processing techniques.

The choice between these models ultimately depends on the specific application requirements—whether prioritizing accuracy (YOLOv11) or speed and efficiency (YOLOv12). As research continues, the future of YOLO promises even more groundbreaking advancements in deep learning and computer vision.