Introduction

For years, real-time object detection has followed the same rigid blueprint: define a closed set of classes, collect massive labeled datasets, train a detector, bolt on a segmenter, then attach a tracker for video. This pipeline worked—but it was fragile, expensive, and fundamentally limited. Any change in environment, object type, or task often meant starting over.

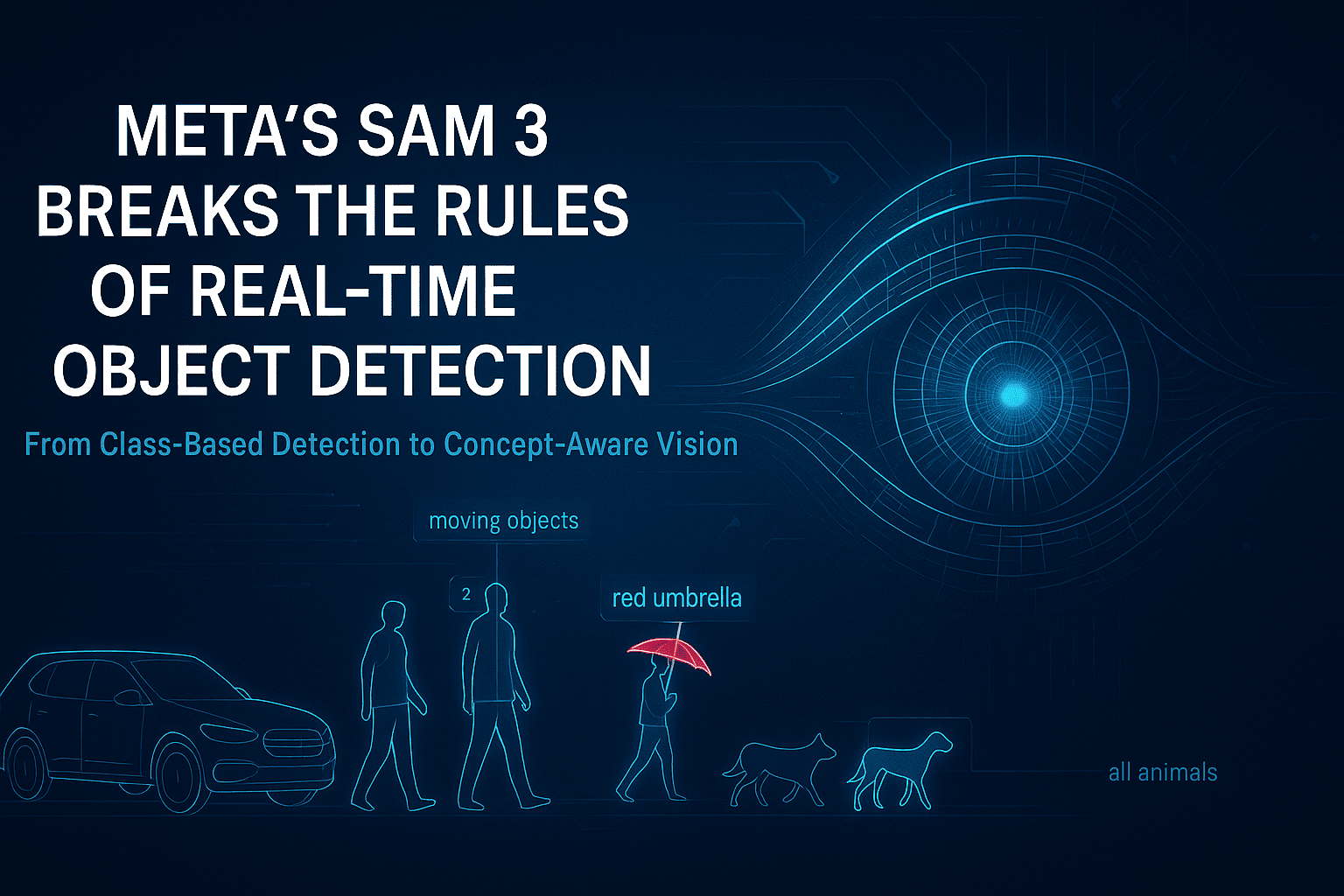

Meta’s Segment Anything Model 3 (SAM 3) breaks this cycle entirely. As described in the Coding Nexus analysis, SAM 3 is not just an improvement in accuracy or speed—it is a structural rethinking of how object detection, segmentation, and tracking should work in modern computer vision systems .

SAM 3 replaces class-based detection with concept-based understanding, enabling real-time segmentation and tracking using simple natural-language prompts. This shift has deep implications across robotics, AR/VR, video analytics, dataset creation, and interactive AI systems.

1. The Core Problem With Traditional Object Detection

Before understanding why SAM 3 matters, it’s important to understand what was broken.

1.1 Rigid Class Definitions

Classic detectors (YOLO, Faster R-CNN, SSD) operate on a fixed label set. If an object category is missing—or even slightly redefined—the model fails. “Dog” might work, but “small wet dog lying on the floor” does not.

1.2 Fragmented Pipelines

A typical real-time vision system involves:

A detector for bounding boxes

A segmenter for pixel masks

A tracker for temporal consistency

Each component has its own failure modes, configuration overhead, and performance tradeoffs.

1.3 Data Dependency

Every new task requires new annotations. Collecting and labeling data often costs more than training the model itself.

SAM 3 directly targets all three issues.

2. SAM 3’s Conceptual Breakthrough: From Classes to Concepts

The most important innovation in SAM 3 is the move from class-based detection to concept-based segmentation.

Instead of asking:

“Is there a car in this image?”

SAM 3 answers:

“Show me everything that matches this concept.”

That concept can be expressed as:

a short text phrase

a descriptive noun group

or a visual example

This approach is called Promptable Concept Segmentation (PCS) .

Why This Matters

Concepts are open-ended

No retraining is required

The same model works across images and videos

Semantic understanding replaces rigid taxonomy

This fundamentally changes how humans interact with vision systems.

3. Unified Detection, Segmentation, and Tracking

SAM 3 eliminates the traditional multi-stage pipeline.

What SAM 3 Does in One Pass

Detects all instances of a concept

Produces pixel-accurate masks

Assigns persistent identities across video frames

Unlike earlier SAM versions, which segmented one object per prompt, SAM 3 returns all matching instances simultaneously, each with its own identity for tracking .

This makes real-time video understanding far more robust, especially in crowded or dynamic scenes.

4. How SAM 3 Works (High-Level Architecture)

While the Medium article avoids low-level math, it highlights several key architectural ideas:

4.1 Language–Vision Alignment

Text prompts are embedded into the same representational space as visual features, allowing semantic matching between words and pixels.

4.2 Presence-Aware Detection

SAM 3 doesn’t just segment—it first determines whether a concept exists in the scene, reducing false positives and improving precision.

4.3 Temporal Memory

For video, SAM 3 maintains internal memory so objects remain consistent even when:

partially occluded

temporarily out of frame

changing shape or scale

This is why SAM 3 can replace standalone trackers.

5. Real-Time Performance Implications

A key insight from the article is that real-time no longer means simplified models.

SAM 3 demonstrates that:

High-quality segmentation

Open-vocabulary understanding

Multi-object tracking

can coexist in a single real-time system—provided the architecture is unified rather than modular .

This redefines expectations for what “real-time” vision systems can deliver.

6. Impact on Dataset Creation and Annotation

One of the most immediate consequences of SAM 3 is its effect on data pipelines.

Traditional Annotation

Manual labeling

Long turnaround times

High cost per image or frame

With SAM 3

Prompt-based segmentation generates masks instantly

Humans shift from labeling to verification

Dataset creation scales dramatically faster

This is especially relevant for industries like autonomous driving, medical imaging, and robotics, where labeled data is a bottleneck.

7. New Possibilities in Video and Interactive Media

SAM 3 enables entirely new interaction patterns:

Text-driven video editing

Semantic search inside video streams

Live AR effects based on descriptions, not predefined objects

For example:

“Highlight all moving objects except people.”

Such instructions were impractical with classical detectors but become natural with SAM 3’s concept-based approach.

8. Comparison With Previous SAM Versions

| Feature | SAM / SAM 2 | SAM 3 |

|---|---|---|

| Object count per prompt | One | All matching instances |

| Video tracking | Limited / external | Native |

| Vocabulary | Implicit | Open-ended |

| Pipeline complexity | Moderate | Unified |

| Real-time use | Experimental | Practical |

SAM 3 is not a refinement—it is a generational shift.

9. Current Limitations

Despite its power, SAM 3 is not a silver bullet:

Compute requirements are still significant

Complex reasoning (multi-step instructions) requires external agents

Edge deployment remains challenging without distillation

However, these are engineering constraints, not conceptual ones.

10. Why SAM 3 Represents a Structural Shift in Computer Vision

SAM 3 changes the role of object detection in AI systems:

From rigid perception → flexible understanding

From labels → language

From pipelines → unified models

As emphasized in the Coding Nexus article, this shift is comparable to the jump from keyword search to semantic search in NLP .

Final Thoughts

Meta’s SAM 3 doesn’t just improve object detection—it redefines how humans specify visual intent. By making language the interface and concepts the unit of understanding, SAM 3 pushes computer vision closer to how people naturally perceive the world.

In the long run, SAM 3 is less about segmentation masks and more about a future where vision systems understand what we mean, not just what we label.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.