Introduction

Artificial intelligence has been circling healthcare for years, diagnosing images, summarizing clinical notes, predicting risks, yet much of its real power has remained locked behind proprietary walls. Google’s MedGemma changes that equation. By releasing open medical AI models built specifically for healthcare contexts, Google is signaling a shift from “AI as a black box” to AI as shared infrastructure for medicine.

This is not just another model release. MedGemma represents a structural change in how healthcare AI can be developed, validated, and deployed.

The Problem With Healthcare AI So Far

Healthcare AI has faced three persistent challenges:

Opacity

Many high-performing medical models are closed. Clinicians cannot inspect them, regulators cannot fully audit them, and researchers cannot adapt them.General Models, Specialized Risks

Large general-purpose language models are not designed for clinical nuance. Small mistakes in medicine are not “edge cases”, they are liability.Inequitable Access

Advanced medical AI often ends up concentrated in large hospitals, well-funded startups, or high-income countries.

The result is a paradox: AI shows promise in healthcare, but trust, scalability, and equity remain unresolved.

What Is MedGemma?

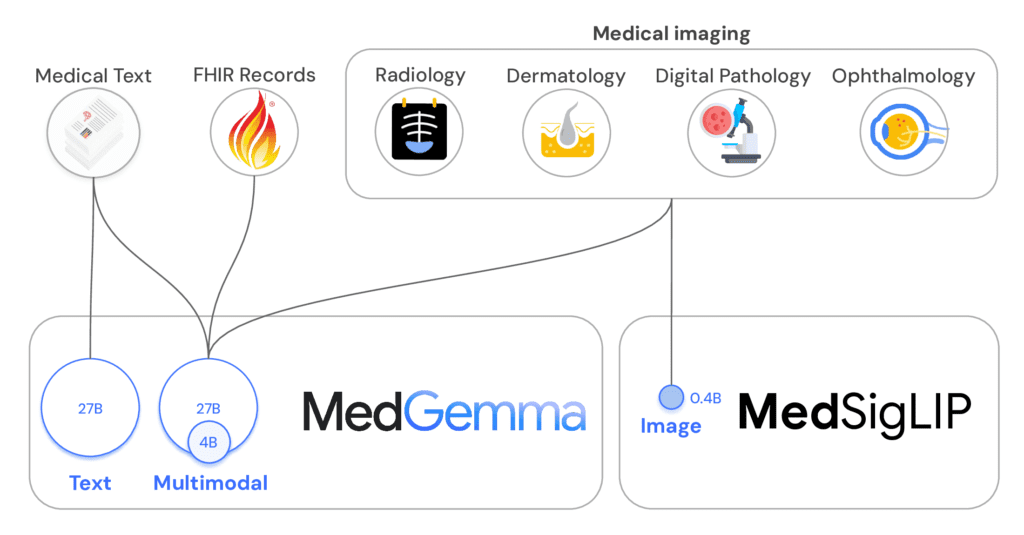

MedGemma is a family of open-weight medical AI models released by Google, built on the Gemma architecture but adapted specifically for healthcare and biomedical use cases.

Key characteristics include:

Medical-domain tuning (clinical language, biomedical concepts)

Open weights, enabling inspection, fine-tuning, and on-prem deployment

Designed for responsible use, with explicit positioning as decision support, not clinical authority

In simple terms: MedGemma is not trying to replace doctors. It is trying to become a reliable, transparent assistant that developers and institutions can actually trust.

Why “Open” Matters More in Medicine Than Anywhere Else

In most consumer applications, closed models are an inconvenience. In healthcare, they are a risk.

Transparency and Auditability

Open models allow:

Independent evaluation of bias and failure modes

Regulatory scrutiny

Reproducible research

This aligns far better with medical ethics than “trust us, it works.”

Customization for Real Clinical Settings

Hospitals differ. So do patient populations. Open models can be fine-tuned for:

Local languages

Regional disease prevalence

Institutional workflows

Closed APIs cannot realistically offer this depth of adaptation.

Data Privacy and Sovereignty

With MedGemma, organizations can:

Run models on-premises

Keep patient data inside institutional boundaries

Comply with strict data protection regulations

For healthcare systems, this is not optional, it is mandatory.

Potential Use Cases That Actually Make Sense

MedGemma is not a silver bullet, but it enables realistic, high-impact applications:

1. Clinical Documentation Support

Drafting summaries from structured notes

Translating between clinical and patient-friendly language

Reducing physician burnout (quietly, which is how doctors prefer it)

2. Medical Education and Training

Interactive case simulations

Question-answering grounded in medical terminology

Localized medical training tools in under-resourced regions

3. Research Acceleration

Literature review assistance

Hypothesis exploration

Data annotation support for medical datasets

4. Decision Support (Not Decision Making)

Flagging potential issues

Surfacing relevant guidelines

Assisting, not replacing, clinical judgment

The distinction matters. MedGemma is positioned as a copilot, not an autopilot.

Safety, Responsibility, and the Limits of AI

Google has been explicit about one thing: MedGemma is not a diagnostic authority.

This is important for two reasons:

Legal and Ethical Reality

Medicine requires accountability. AI cannot be held accountable, people can.Trust Through Constraint

Models that openly acknowledge their limits are more trustworthy than those that pretend omniscience.

MedGemma’s real value lies in supporting human expertise, not competing with it.

How MedGemma Could Shift the Healthcare AI Landscape

From Products to Platforms

Instead of buying opaque AI tools, hospitals can build their own systems on top of open foundations.

From Vendor Lock-In to Ecosystems

Researchers, startups, and institutions can collaborate on improvements rather than duplicating effort behind closed doors.

From “AI Hype” to Clinical Reality

Open evaluation encourages realistic benchmarking, failure analysis, and incremental improvement, exactly how medicine advances.

The Bigger Picture: Democratizing Medical AI

Healthcare inequality is not just about access to doctors, it is about access to knowledge.

Open medical AI models:

Lower barriers for low-resource regions

Enable local innovation

Reduce dependence on external vendors

If used responsibly, MedGemma could help ensure that medical AI benefits are not limited to the few who can afford them.

Final Thoughts

Google’s MedGemma is not revolutionary because it is powerful. It is revolutionary because it is open, medical-first, and constrained by responsibility.

In a field where trust matters more than raw capability, that may be exactly what healthcare AI needs.

The real transformation will not come from AI replacing clinicians, but from clinicians finally having AI they can understand, adapt, and trust.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.