Introduction Before YOLO, computers didn’t see the world the way humans do. They inspected it slowly, cautiously, one object proposal at a time. Object detection worked, but it was fragmented, computationally expensive, and far from real time. Then, in 2015, a single paper changed everything. “You Only Look Once: Unified, Real-Time Object Detection” by Joseph Redmon et al. introduced YOLOv1, a model that redefined how machines perceive images. It wasn’t just an incremental improvement, it was a conceptual revolution. This is the story of how YOLOv1 was born, how it worked, and why its impact still echoes across modern computer vision systems today. Object Detection Before YOLO: A Fragmented World Before YOLOv1, object detection research was dominated by complex pipelines stitched together from multiple independent components. Each component worked reasonably well on its own, but the overall system was fragile, slow, and difficult to optimize. The Classical Detection Pipeline A typical object detection system before 2015 looked like this: Hand-crafted or heuristic-based region proposal Selective Search Edge Boxes Sliding windows (earlier methods) Feature extraction CNN features (AlexNet, VGG, etc.) Run separately on each proposed region Classification SVMs or softmax classifiers One classifier per region Bounding box regression Fine-tuning box coordinates post-classification Each stage was trained independently, often with different objectives. Why This Was a Problem Redundant computationThe same image features were recomputed hundreds of times. No global contextThe model never truly “saw” the full image at once. Pipeline fragilityErrors in region proposals could never be recovered downstream. Poor real-time performanceEven Fast R-CNN struggled to exceed a few FPS. Object detection worked, but it felt like a workaround, not a clean solution. The YOLO Philosophy: Detection as a Single Learning Problem YOLOv1 challenged the dominant assumption that object detection must be a multi-stage problem. Instead, it asked a radical question: Why not predict everything at once, directly from pixels? A Conceptual Shift YOLO reframed object detection as: A single regression problem from image pixels to bounding boxes and class probabilities. This meant: No region proposals No sliding windows No separate classifiers No post-hoc stitching Just one neural network, trained end-to-end. Why This Matters This shift: Simplified the learning objective Reduced engineering complexity Allowed gradients to flow across the entire detection task Enabled true real-time inference YOLO didn’t just optimize detection, it redefined what detection was. How YOLOv1 Works: A New Visual Grammar YOLOv1 introduced a structured way for neural networks to “describe” an image. Grid-Based Responsibility Assignment The image is divided into an S × S grid (commonly 7 × 7). Each grid cell: Is responsible for objects whose center lies within it Predicts bounding boxes and class probabilities This created a spatial prior that helped the network reason about where objects tend to appear. Bounding Box Prediction Details Each grid cell predicts B bounding boxes, where each box consists of: x, y → center coordinates (relative to the grid cell) w, h → width and height (relative to the image) confidence score The confidence score encodes: Pr(object) × IoU(predicted box, ground truth) This was clever, it forced the network to jointly reason about objectness and localization quality. Class Prediction Strategy Instead of predicting classes per bounding box, YOLOv1 predicted: One set of class probabilities per grid cell This reduced complexity but introduced limitations in crowded scenes, a trade-off YOLOv1 knowingly accepted. YOLOv1 Architecture: Designed for Global Reasoning YOLOv1’s network architecture was intentionally designed to capture global image context. Architecture Breakdown 24 convolutional layers 2 fully connected layers Inspired by GoogLeNet (but simpler) Pretrained on ImageNet classification The final fully connected layers allowed YOLO to: Combine spatially distant features Understand object relationships Avoid false positives caused by local texture patterns Why Global Context Matters Traditional detectors often mistook: Shadows for objects Textures for meaningful regions YOLO’s global reasoning reduced these errors by understanding the scene as a whole. The YOLOv1 Loss Function: Balancing Competing Objectives Training YOLOv1 required solving a delicate optimization problem. Multi-Part Loss Components YOLOv1’s loss function combined: Localization loss Errors in x, y, w, h Heavily weighted to prioritize accurate boxes Confidence loss Penalized incorrect objectness predictions Classification loss Penalized wrong class predictions Smart Design Choices Higher weight for bounding box regression Lower weight for background confidence Square root applied to width and height to stabilize gradients These design choices directly influenced how future detection losses were built. Speed vs Accuracy: A Conscious Design Trade-Off YOLOv1 was explicit about its priorities. YOLO’s Position Slightly worse localization is acceptable if it enables real-time vision. Performance Impact YOLOv1 ran an order of magnitude faster than competing detectors Enabled deployment on: Live camera feeds Robotics systems Embedded devices (with Fast YOLO) This trade-off reshaped how researchers evaluated detection systems, not just by accuracy, but by usability. Where YOLOv1 Fell Short, and Why That’s Important YOLOv1’s limitations weren’t accidental; they revealed deep insights. Small Objects Grid resolution limited detection granularity Small objects often disappeared within grid cells Crowded Scenes One object class prediction per cell Overlapping objects confused the model Localization Precision Coarse bounding box predictions Lower IoU scores than region-based methods Each weakness became a research question that drove YOLOv2, YOLOv3, and beyond. Why YOLOv1 Changed Computer Vision Forever YOLOv1 didn’t just introduce a model, it introduced a mindset. End-to-End Learning as a Principle Detection systems became: Unified Differentiable Easier to deploy and optimize Real-Time as a First-Class Metric After YOLO: Speed was no longer optional Real-time inference became an expectation A Blueprint for Future Detectors Modern architectures, CNN-based and transformer-based alike, inherit YOLO’s core ideas: Dense prediction Single-pass inference Deployment-aware design Final Reflection: The Day Detection Became Vision YOLOv1 marked the moment when object detection stopped being a patchwork of tricks and became a coherent vision system. It taught the field that: Seeing fast unlocks new realities Simplicity scales End-to-end learning changes how machines understand the world YOLO didn’t just look once. It made computer vision see differently forever. Visit Our Data Annotation Service Visit Now Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec

Introduction Artificial intelligence has been circling healthcare for years, diagnosing images, summarizing clinical notes, predicting risks, yet much of its real power has remained locked behind proprietary walls. Google’s MedGemma changes that equation. By releasing open medical AI models built specifically for healthcare contexts, Google is signaling a shift from “AI as a black box” to AI as shared infrastructure for medicine. This is not just another model release. MedGemma represents a structural change in how healthcare AI can be developed, validated, and deployed. The Problem With Healthcare AI So Far Healthcare AI has faced three persistent challenges: OpacityMany high-performing medical models are closed. Clinicians cannot inspect them, regulators cannot fully audit them, and researchers cannot adapt them. General Models, Specialized RisksLarge general-purpose language models are not designed for clinical nuance. Small mistakes in medicine are not “edge cases”, they are liability. Inequitable AccessAdvanced medical AI often ends up concentrated in large hospitals, well-funded startups, or high-income countries. The result is a paradox: AI shows promise in healthcare, but trust, scalability, and equity remain unresolved. What Is MedGemma? MedGemma is a family of open-weight medical AI models released by Google, built on the Gemma architecture but adapted specifically for healthcare and biomedical use cases. Key characteristics include: Medical-domain tuning (clinical language, biomedical concepts) Open weights, enabling inspection, fine-tuning, and on-prem deployment Designed for responsible use, with explicit positioning as decision support, not clinical authority In simple terms: MedGemma is not trying to replace doctors. It is trying to become a reliable, transparent assistant that developers and institutions can actually trust. Why “Open” Matters More in Medicine Than Anywhere Else In most consumer applications, closed models are an inconvenience. In healthcare, they are a risk. Transparency and Auditability Open models allow: Independent evaluation of bias and failure modes Regulatory scrutiny Reproducible research This aligns far better with medical ethics than “trust us, it works.” Customization for Real Clinical Settings Hospitals differ. So do patient populations. Open models can be fine-tuned for: Local languages Regional disease prevalence Institutional workflows Closed APIs cannot realistically offer this depth of adaptation. Data Privacy and Sovereignty With MedGemma, organizations can: Run models on-premises Keep patient data inside institutional boundaries Comply with strict data protection regulations For healthcare systems, this is not optional, it is mandatory. Potential Use Cases That Actually Make Sense MedGemma is not a silver bullet, but it enables realistic, high-impact applications: 1. Clinical Documentation Support Drafting summaries from structured notes Translating between clinical and patient-friendly language Reducing physician burnout (quietly, which is how doctors prefer it) 2. Medical Education and Training Interactive case simulations Question-answering grounded in medical terminology Localized medical training tools in under-resourced regions 3. Research Acceleration Literature review assistance Hypothesis exploration Data annotation support for medical datasets 4. Decision Support (Not Decision Making) Flagging potential issues Surfacing relevant guidelines Assisting, not replacing, clinical judgment The distinction matters. MedGemma is positioned as a copilot, not an autopilot. Safety, Responsibility, and the Limits of AI Google has been explicit about one thing: MedGemma is not a diagnostic authority. This is important for two reasons: Legal and Ethical RealityMedicine requires accountability. AI cannot be held accountable, people can. Trust Through ConstraintModels that openly acknowledge their limits are more trustworthy than those that pretend omniscience. MedGemma’s real value lies in supporting human expertise, not competing with it. How MedGemma Could Shift the Healthcare AI Landscape From Products to Platforms Instead of buying opaque AI tools, hospitals can build their own systems on top of open foundations. From Vendor Lock-In to Ecosystems Researchers, startups, and institutions can collaborate on improvements rather than duplicating effort behind closed doors. From “AI Hype” to Clinical Reality Open evaluation encourages realistic benchmarking, failure analysis, and incremental improvement, exactly how medicine advances. The Bigger Picture: Democratizing Medical AI Healthcare inequality is not just about access to doctors, it is about access to knowledge. Open medical AI models: Lower barriers for low-resource regions Enable local innovation Reduce dependence on external vendors If used responsibly, MedGemma could help ensure that medical AI benefits are not limited to the few who can afford them. Final Thoughts Google’s MedGemma is not revolutionary because it is powerful. It is revolutionary because it is open, medical-first, and constrained by responsibility. In a field where trust matters more than raw capability, that may be exactly what healthcare AI needs. The real transformation will not come from AI replacing clinicians, but from clinicians finally having AI they can understand, adapt, and trust. Visit Our Data Annotation Service Visit Now Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Introduction For years, real-time object detection has followed the same rigid blueprint: define a closed set of classes, collect massive labeled datasets, train a detector, bolt on a segmenter, then attach a tracker for video. This pipeline worked—but it was fragile, expensive, and fundamentally limited. Any change in environment, object type, or task often meant starting over. Meta’s Segment Anything Model 3 (SAM 3) breaks this cycle entirely. As described in the Coding Nexus analysis, SAM 3 is not just an improvement in accuracy or speed—it is a structural rethinking of how object detection, segmentation, and tracking should work in modern computer vision systems . SAM 3 replaces class-based detection with concept-based understanding, enabling real-time segmentation and tracking using simple natural-language prompts. This shift has deep implications across robotics, AR/VR, video analytics, dataset creation, and interactive AI systems. 1. The Core Problem With Traditional Object Detection Before understanding why SAM 3 matters, it’s important to understand what was broken. 1.1 Rigid Class Definitions Classic detectors (YOLO, Faster R-CNN, SSD) operate on a fixed label set. If an object category is missing—or even slightly redefined—the model fails. “Dog” might work, but “small wet dog lying on the floor” does not. 1.2 Fragmented Pipelines A typical real-time vision system involves: A detector for bounding boxes A segmenter for pixel masks A tracker for temporal consistency Each component has its own failure modes, configuration overhead, and performance tradeoffs. 1.3 Data Dependency Every new task requires new annotations. Collecting and labeling data often costs more than training the model itself. SAM 3 directly targets all three issues. 2. SAM 3’s Conceptual Breakthrough: From Classes to Concepts The most important innovation in SAM 3 is the move from class-based detection to concept-based segmentation. Instead of asking: “Is there a car in this image?” SAM 3 answers: “Show me everything that matches this concept.” That concept can be expressed as: a short text phrase a descriptive noun group or a visual example This approach is called Promptable Concept Segmentation (PCS) . Why This Matters Concepts are open-ended No retraining is required The same model works across images and videos Semantic understanding replaces rigid taxonomy This fundamentally changes how humans interact with vision systems. 3. Unified Detection, Segmentation, and Tracking SAM 3 eliminates the traditional multi-stage pipeline. What SAM 3 Does in One Pass Detects all instances of a concept Produces pixel-accurate masks Assigns persistent identities across video frames Unlike earlier SAM versions, which segmented one object per prompt, SAM 3 returns all matching instances simultaneously, each with its own identity for tracking . This makes real-time video understanding far more robust, especially in crowded or dynamic scenes. 4. How SAM 3 Works (High-Level Architecture) While the Medium article avoids low-level math, it highlights several key architectural ideas: 4.1 Language–Vision Alignment Text prompts are embedded into the same representational space as visual features, allowing semantic matching between words and pixels. 4.2 Presence-Aware Detection SAM 3 doesn’t just segment—it first determines whether a concept exists in the scene, reducing false positives and improving precision. 4.3 Temporal Memory For video, SAM 3 maintains internal memory so objects remain consistent even when: partially occluded temporarily out of frame changing shape or scale This is why SAM 3 can replace standalone trackers. 5. Real-Time Performance Implications A key insight from the article is that real-time no longer means simplified models. SAM 3 demonstrates that: High-quality segmentation Open-vocabulary understanding Multi-object tracking can coexist in a single real-time system—provided the architecture is unified rather than modular . This redefines expectations for what “real-time” vision systems can deliver. 6. Impact on Dataset Creation and Annotation One of the most immediate consequences of SAM 3 is its effect on data pipelines. Traditional Annotation Manual labeling Long turnaround times High cost per image or frame With SAM 3 Prompt-based segmentation generates masks instantly Humans shift from labeling to verification Dataset creation scales dramatically faster This is especially relevant for industries like autonomous driving, medical imaging, and robotics, where labeled data is a bottleneck. 7. New Possibilities in Video and Interactive Media SAM 3 enables entirely new interaction patterns: Text-driven video editing Semantic search inside video streams Live AR effects based on descriptions, not predefined objects For example: “Highlight all moving objects except people.” Such instructions were impractical with classical detectors but become natural with SAM 3’s concept-based approach. 8. Comparison With Previous SAM Versions Feature SAM / SAM 2 SAM 3 Object count per prompt One All matching instances Video tracking Limited / external Native Vocabulary Implicit Open-ended Pipeline complexity Moderate Unified Real-time use Experimental Practical SAM 3 is not a refinement—it is a generational shift. 9. Current Limitations Despite its power, SAM 3 is not a silver bullet: Compute requirements are still significant Complex reasoning (multi-step instructions) requires external agents Edge deployment remains challenging without distillation However, these are engineering constraints, not conceptual ones. 10. Why SAM 3 Represents a Structural Shift in Computer Vision SAM 3 changes the role of object detection in AI systems: From rigid perception → flexible understanding From labels → language From pipelines → unified models As emphasized in the Coding Nexus article, this shift is comparable to the jump from keyword search to semantic search in NLP . Final Thoughts Meta’s SAM 3 doesn’t just improve object detection—it redefines how humans specify visual intent. By making language the interface and concepts the unit of understanding, SAM 3 pushes computer vision closer to how people naturally perceive the world. In the long run, SAM 3 is less about segmentation masks and more about a future where vision systems understand what we mean, not just what we label. Visit Our Data Annotation Service Visit Now

Introduction In computer vision, segmentation used to feel like the “manual labor” of AI: click here, draw a box there, correct that mask, repeat a few thousand times, try not to cry. Meta’s original Segment Anything Model (SAM) turned that grind into a point-and-click magic trick: tap a few pixels, get a clean object mask. SAM 2 pushed further to videos, bringing real-time promptable segmentation to moving scenes. Now SAM 3 arrives as the next major step: not just segmenting things you click, but segmenting concepts you describe. Instead of manually hinting at each object, you can say “all yellow taxis” or “players wearing red jerseys” and let the model find, segment, and track every matching instance in images and videos. This blog goes inside SAM 3—what it is, how it differs from its predecessors, what “Promptable Concept Segmentation” really means, and how it changes the way we think about visual foundation models. 1. From SAM to SAM 3: A short timeline Before diving into SAM 3, it helps to step back and see how we got here. SAM (v1): Click-to-segment The original SAM introduced a powerful idea: a large, generalist segmentation model that could segment “anything” given visual prompts—points, boxes, or rough masks. It was trained on a massive, diverse dataset and showed strong zero-shot segmentation performance across many domains. SAM 2: Images and videos, in real time SAM 2 extended the concept to video, treating an image as just a one-frame video and adding a streaming memory mechanism to support real-time segmentation over long sequences. Key improvements in SAM 2: Unified model for images and videos Streaming memory for efficient video processing Model-in-the-loop data engine to build a huge SA-V video segmentation dataset But SAM 2 still followed the same interaction pattern: you specify a particular location (point/box/mask) and get one object instance back at a time. SAM 3: From “this object” to “this concept” SAM 3 changes the game by introducing Promptable Concept Segmentation (PCS)—instead of saying “segment the thing under this click,” you can say “segment every dog in this video” and get: All instances of that concept Segmentation masks for each instance Consistent identities for each instance across frames (tracking) In other words, SAM 3 is no longer just a segmentation tool—it’s a unified, open-vocabulary detection, segmentation, and tracking model for images and videos. 2. What exactly is SAM 3? At its core, SAM 3 is a unified foundation model for promptable segmentation in images and videos that operates on concept prompts. Core capabilities According to Meta’s release and technical overview, SAM 3 can: Detect and segment objects Given a text or visual prompt, SAM 3 finds all matching object instances in an image or video and returns instance masks. Track objects over time For video, SAM 3 maintains stable identities, so the same object can be followed across frames. Work with multiple prompt types Text: “yellow school bus”, “person wearing a backpack” Image exemplars: example boxes/masks of an object Visual prompts: points, boxes, masks (SAM 2-style) Combined prompts: e.g., “red car” + one exemplar, for even sharper control Support open-vocabulary segmentation It doesn’t rely on a closed set of pre-defined classes. Instead, it uses language prompts and exemplars to generalize to new concepts. Scale to large image/video collections SAM 3 is explicitly designed to handle the “find everything like X” problem across large datasets, not just a single frame. Compared to SAM 2, SAM 3 formalizes PCS and adds language-driven concept understanding while preserving (and improving) the interactive segmentation capabilities of earlier versions. 3. Promptable Concept Segmentation (PCS): The big idea “Promptable Concept Segmentation” is the central new task that SAM 3 tackles. You provide a concept prompt, and the model returns masks + IDs for all objects matching that concept. Concept prompts can be: Text prompts Simple noun phrases like “red apple”, “striped cat”, “football player in blue”, “car in the left lane”. Image exemplars Positive/negative example boxes around objects you care about. Combined prompts Text + exemplars, e.g., “delivery truck” plus one example bounding box to steer the model. This is fundamentally different from classic SAM-style visual prompts: Feature SAM / SAM 2 SAM 3 (PCS) Prompt type Visual (points/boxes/masks) Text, exemplars, visual, or combinations Output per prompt One instance per interaction All instances of the concept Task scope Local, instance-level Global, concept-level across frame(s) Vocabulary Implicit, not language-driven Open-vocabulary via text + exemplars This means you can do things like: “Find every motorcycle in this 10-minute traffic video.” “Segment all people wearing helmets in a construction site dataset.” “Count all green apples versus red apples in a warehouse scan.” All without manually clicking each object. The dream of “query-like segmentation at scale” is much closer to reality. 4. Under the hood: How SAM 3 works (conceptually) Meta has published an overview and open-sourced the reference implementation via GitHub and model hubs such as Hugging Face. While the exact implementation details are in the official paper and code, the high-level ingredients look roughly like this: Vision backbone A powerful image/video encoder transforms each frame into a rich spatiotemporal feature representation. Concept encoder (language + exemplars) Text prompts are encoded using a language model or text encoder. Visual exemplars (e.g., boxes/masks around an example object) are encoded as visual features. The system fuses these into a concept embedding that represents “what you’re asking for”. Prompt–vision fusion The concept embedding interacts with the visual features (e.g., via attention) to highlight regions that correspond to the requested concept. Instance segmentation head From the fused feature map, the model produces: Binary/soft masks Instance IDs Optional detection boxes or scores Temporal component for tracking For video, SAM 3 uses mechanisms inspired by SAM 2’s streaming memory to maintain consistent identities for objects across frames, enabling efficient concept tracking over time. You can think of SAM 3 as “SAM 2 + a powerful vision-language concept engine,” wrapped into a single unified model. 5. SAM 3 vs SAM 2 and traditional detectors How does SAM 3 actually compare

Introduction Fine-tuning a YOLO model is a targeted effort to adapt powerful, pretrained detectors to a specific domain. The hard part is not the network. It is getting the right labelled data, at scale, with repeatable quality. An automated data-labeling pipeline combines model-assisted prelabels, active learning, pseudo-labeling, synthetic data and human verification to deliver that data quickly and cheaply. This guide shows why that pipeline matters, how its stages fit together, and which controls and metrics keep the loop reliable so you can move from a small seed dataset to a production-ready detector with predictable cost and measurable gains. Target audience and assumptions This guide assumes: You use YOLO (v8+ or similar Ultralytics family). You have access to modest GPU resources (1–8 GPUs). You can run a labeling UI with prelabel ingestion (CVAT, Label Studio, Roboflow, Supervisely). You aim for production deployment on cloud or edge. End-to-end pipeline (high level) Data ingestion: cameras, mobile, recorded video, public datasets, client uploads. Preprocess: frame extraction, deduplication, scene grouping, metadata capture. Prelabel: run a baseline detector to create model suggestions. Human-in-the-loop: annotators correct predictions. Active learning: select most informative images for human review. Pseudo-labeling: teacher model labels high-confidence unlabeled images. Combine, curate, augment, and convert to YOLO/COCO. Fine-tune model. Track experiments. Export, optimize, deploy. Monitor and retrain. Design each stage for automation via API hooks and version control for datasets and specs. Data collection and organization Inputs and signals to collect for every file: source id, timestamp, camera metadata, scene id, originating video id, uploader id. label metadata: annotator id, review pass, annotation confidence, label source (human/pseudo/prelabel/synthetic).Store provenance. Use scene/video grouping to create train/val splits that avoid leakage. Target datasets: Seed: 500–2,000 diverse images with human labels (task dependant). Scaling pool: 10k–100k+ unlabeled frames for pseudo/AL. Validation: 500–2,000 strictly human-verified images. Never mix pseudo labels into validation. Label ontology and specification Keep class set minimal and precise. Avoid overlapping classes. Produce a short spec: inclusion rules, occlusion thresholds, truncated objects, small object policy. Include 10–20 exemplar images per rule. Version the spec and require sign-off before mass labeling. Track label lineage in a lightweight DB or metadata store. Pre-labeling (model-assisted) Why: speeds annotators by 2–10x. How: Run a baseline YOLO (pretrained) across unlabeled pool. Save predictions in standard format (.txt or COCO JSON). Import predictions as an annotation layer in UI. Mark bounding boxes with prediction confidence. Present annotators only images above a minimum score threshold or with predicted classes absent in dataset to increase yield. Practical command (Ultralytics): yolo detect predict model=yolov8n.pt source=/data/pool imgsz=640 conf=0.15 save=True Adjust conf to control annotation effort. See Ultralytics fine-tuning docs for details. Human-in-the-loop workflow and QA Workflow: Pull top-K pre-labeled images into annotation UI. Present predicted boxes editable by annotator. Show model confidence. Enforce QA review on a stratified sample. Require second reviewer on disagreement. Flag images with ambiguous cases for specialist review. Quality controls: Inter-annotator agreement tracking. Random audit sampling. Automatic bounding-box sanity checks.Log QA metrics and use them in dataset weighting. Active learning: selection strategies Active learning reduces labeling needs by focusing human effort. Use a hybrid selection score: Selection score = α·uncertainty + β·novelty + γ·diversity Where: uncertainty = 1 − max_class_confidence across detections. novelty = distance in feature space from labeled set (use backbone features). diversity = clustering score to avoid redundant images. Common acquisition functions: Uncertainty sampling (low confidence). Margin sampling (difference between top two class scores). Core-set selection (max coverage). Density-weighted uncertainty (prioritize uncertain images in dense regions). Recent surveys on active learning show systematic gains and strong sample efficiency improvements. Use ensembles or MC-Dropout for improved uncertainty estimates. Pseudo-labeling and semi-supervised expansion Pseudo-labeling lets you expand labeled data cheaply. Risks: noisy boxes hurt learning. Controls: Teacher strength: prefer a high-quality teacher model (larger backbone or ensemble). Dual thresholds: classification_confidence ≥ T_cls (e.g., 0.9). localization_quality ≥ T_loc (e.g., IoU proxy or center-variance metric). Weighting: add pseudo samples with lower loss weight w_pseudo (e.g., 0.1–0.5) or use sample reweighting by teacher confidence. Filtering: apply density-guided or score-consistency filters to remove dense false positives. Consistency training: augment pseudo examples and enforce stable predictions (consistency loss). Seminal methods like PseCo and followups detail localization-aware pseudo labels and consistency training. These approaches improve pseudo-label reliability and downstream performance. Synthetic data and domain randomization When real data is rare or dangerous to collect, generate synthetic images. Best practices: Use domain randomization: vary lighting, textures, backgrounds, camera pose, noise, and occlusion. Mix synthetic and real: pretrain on synthetic, then fine-tune on small real set. Validate on held-out real validation set. Synthetic validation metrics often overestimate real performance; always check on real data. Recent studies in manufacturing and robotics confirm these tradeoffs. Tools: Blender+Python, Unity Perception, NVIDIA Omniverse Replicator. Save segmentation/mask/instance metadata for downstream tasks. Augmentation policy (practical) YOLO benefits from on-the-fly strong augmentation early in training, and reduced augmentation in final passes. Suggested phased policy: Phase 1 (warmup, epochs 0–20): aggressive augment. Mosaic, MixUp, random scale, color jitter, blur, JPEG corruption. Phase 2 (mid training, epochs 21–60): moderate augment. Keep Mosaic but lower probability. Phase 3 (final fine-tune, last 10–20% epochs): minimal augment to let model settle. Notes: Mosaic helps small object learning but may introduce unnatural context. Reduce mosaic probability in final phases. Use CutMix or copy-paste to balance rare classes. Do not augment validation or test splits. Ultralytics docs include augmentation specifics and recommended settings. YOLO fine-tuning recipes (detailed) Choose starting model based on latency/accuracy tradeoff: Iteration / prototyping: yolov8n (nano) or yolov8s (small). Production: yolov8m or yolov8l/x depending on target. Standard recipe: Prepare data.yaml: train: /data/train/images val: /data/val/images nc: names: [‘class0′,’class1’,…] 2. Stage 1 — head only: yolo detect train model=yolov8n.pt data=data.yaml epochs=25 imgsz=640 batch=32 freeze=10 lr0=0.001 3. Stage 2 — unfreeze full model: yolo detect train model=runs/train/weights/last.pt data=data.yaml epochs=75 imgsz=640 batch=16 lr0=0.0003 4. Final sweep: lower LR, turn off heavy augmentations, train few epochs to stabilize. Hyperparameter notes: Optimizer: SGD with momentum 0.9 usually generalizes better for detection. AdamW works for quick convergence. LR: warmup, cosine decay recommended. Start LR based

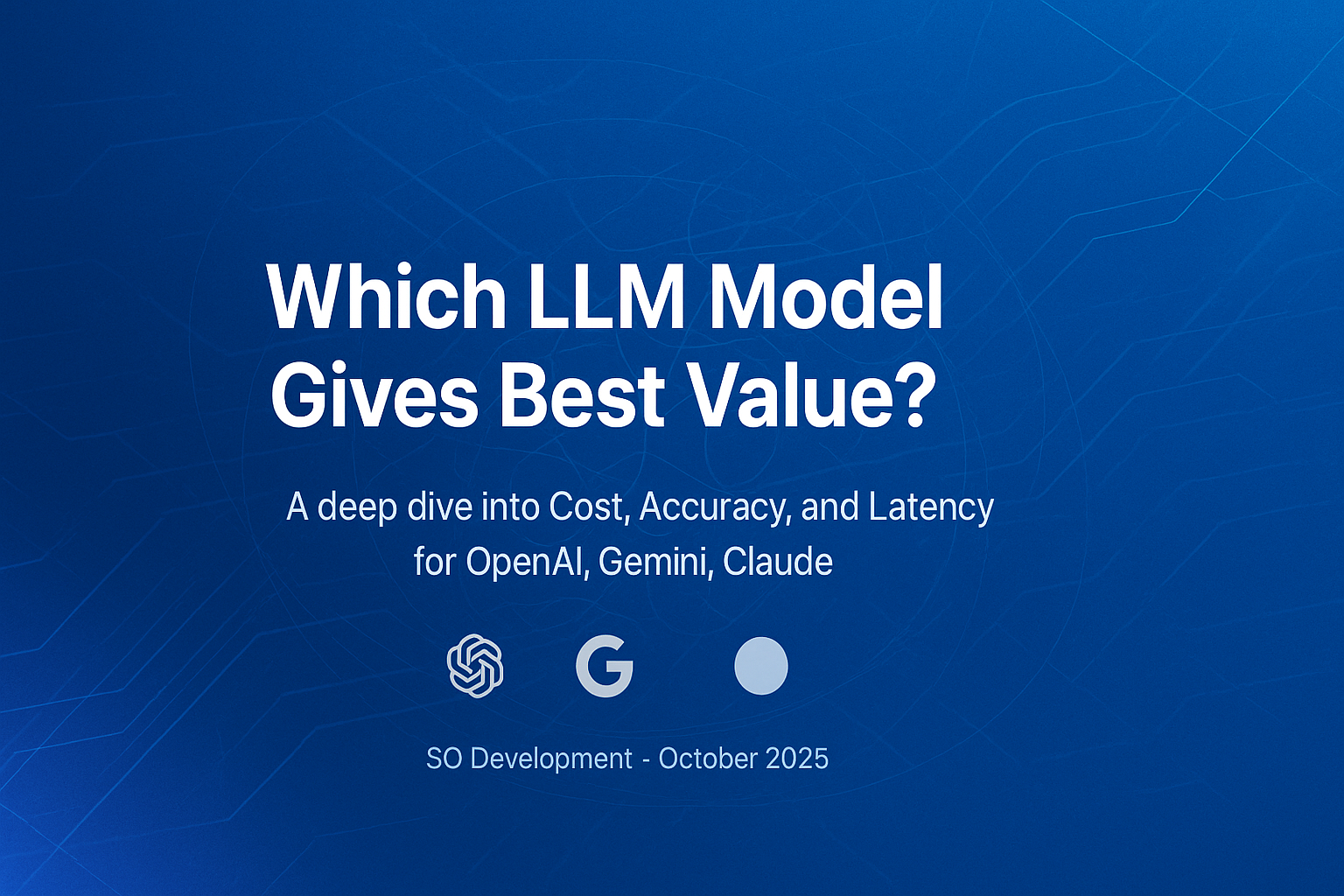

Introduction In 2025, choosing the right large language model (LLM) is about value, not hype. The true measure of performance is how well a model balances cost, accuracy, and latency under real workloads. Every token costs money, every delay affects user experience, and every wrong answer adds hidden rework. The market now centers on three leaders: OpenAI, Google, and Anthropic. OpenAI’s GPT-4o mini focuses on balanced efficiency, Google’s Gemini 2.5 lineup scales from high-end Pro to budget Flash tiers, and Anthropic’s Claude Sonnet 4.5 delivers top reasoning accuracy at a premium. This guide compares them side by side to show which model delivers the best performance per dollar for your specific use case. Pricing Snapshot (Representative) Provider Model / Tier Input ($/MTok) Output ($/MTok) Notes OpenAI GPT-4o mini $0.60 $2.40 Cached inputs available; balanced for chat and RAG. Anthropic Claude Sonnet 4.5 $3 $15 High output cost; excels on hard reasoning and long runs. Google Gemini 2.5 Pro $1.25 $10 Strong multimodal performance; tiered above 200k tokens. Google Gemini 2.5 Flash $0.30 $2.50 Low-latency, high-throughput. Batch discounts possible. Google Gemini 2.5 Flash-Lite $0.10 $0.40 Lowest-cost option for bulk transforms and tagging. Accuracy: Choose by Failure Cost Public leaderboards shift rapidly. Typical pattern: – Claude Sonnet 4.5 often wins on complex or long-horizon reasoning. Expect fewer ‘almost right’ answers.– Gemini 2.5 Pro is strong as a multimodal generalist and handles vision-heavy tasks well.– GPT-4o mini provides stable, ‘good enough’ accuracy for common RAG and chat flows at low unit cost. Rule of thumb: If an error forces expensive human review or customer churn, buy accuracy. Otherwise buy throughput. Latency and Throughput – Gemini Flash / Flash-Lite: engineered for low time-to-first-token and high decode rate. Good for high-volume real-time pipelines.– GPT-4o / 4o mini: fast and predictable streaming; strong for interactive chat UX.– Claude Sonnet 4.5: responsive in normal mode; extended ‘thinking’ modes trade latency for correctness. Use selectively. Value by Workload Workload Recommended Model(s) Why RAG chat / Support / FAQ GPT-4o mini; Gemini Flash Low output price; fast streaming; stable behavior. Bulk summarization / tagging Gemini Flash / Flash-Lite Lowest unit price and batch discounts for high throughput. Complex reasoning / multi-step agents Claude Sonnet 4.5 Higher first-pass correctness; fewer retries. Multimodal UX (text + images) Gemini 2.5 Pro; GPT-4o mini Gemini for vision; GPT-4o mini for balanced mixed-modal UX. Coding copilots Claude Sonnet 4.5; GPT-4.x Better for long edits and agentic behavior; validate on real repos. A Practical Evaluation Protocol 1. Define success per route: exactness, citation rate, pass@1, refusal rate, latency p95, and cost/correct task.2. Build a 100–300 item eval set from real tickets and edge cases.3. Test three budgets per model: short, medium, long outputs. Track cost and p95 latency.4. Add a retry budget of 1. If ‘retry-then-pass’ is common, the cheaper model may cost more overall.5. Lock a winner per route and re-run quarterly. Cost Examples (Ballpark) Scenario: 100k calls/day. 300 input / 250 output tokens each. – GPT-4o mini ≈ $66/day– Gemini 2.5 Flash-Lite ≈ $13/day– Claude Sonnet 4.5 ≈ $450/day These are illustrative. Focus on cost per correct task, not raw unit price. Deployment Playbook 1) Segment by stakes: low-risk -> Flash-Lite/Flash. General UX -> GPT-4o mini. High-stakes -> Claude Sonnet 4.5.2) Cap outputs: set hard generation caps and concise style guidelines.3) Cache aggressively: system prompts and RAG scaffolds are prime candidates.4) Guardrail and verify: lightweight validators for JSON schema, citations, and units.5) Observe everything: log tokens, latency p50/p95, pass@1, and cost per correct task.6) Negotiate enterprise levers: SLAs, reserved capacity, volume discounts. Model-specific Tips – GPT-4o mini: sweet spot for mixed RAG and chat. Use cached inputs for reusable prompts.– Gemini Flash / Flash-Lite: default for million-item pipelines. Combine Batch + caching.– Gemini 2.5 Pro: raise for vision-intensive or higher-accuracy needs above Flash.– Claude Sonnet 4.5: enable extended reasoning only when stakes justify slower output. FAQ Q: Can one model serve all routes?A: Yes, but you will overpay or under-deliver somewhere. Q: Do leaderboards settle it?A: Use them to shortlist. Your evals decide. Q: When to move up a tier?A: When pass@1 on your evals stalls below target and retries burn budget. Q: When to move down a tier?A: When outputs are short, stable, and user tolerance for minor variance is high. Conclusion Modern LLMs win with disciplined data curation, pragmatic architecture, and robust training. The best teams run a loop: deploy, observe, collect, synthesize, align, and redeploy. Retrieval grounds truth. Preference optimization shapes behavior. Quantization and batching deliver scale. Above all, evaluation must be continuous and business-aligned. Use the checklists to operationalize. Start small, instrument everything, and iterate the flywheel. Visit Our Data Collection Service Visit Now

Introduction In the fast-paced world of computer vision, object detection has always stood at the forefront of innovation. From basic sliding-window techniques to modern, transformer-powered detectors, the field has made monumental strides in accuracy, speed, and efficiency. Among the most transformative breakthroughs in this domain is the YOLO (You Only Look Once) family—an object detection architecture that revolutionized real-time detection. With each new iteration, YOLO has brought tangible improvements and redefined what’s possible in real-time detection. YOLOv12, released in late 2024, set a new benchmark in balancing speed and accuracy across edge devices and cloud environments. Fast forward to mid-2025, and YOLOv13 pushes the limits even further. This blog provides an in-depth, feature-by-feature comparison between YOLOv12 and YOLOv13, analyzing how YOLOv13 improves upon its predecessor, the core architectural changes, performance benchmarks, deployment use cases, and what these mean for researchers and developers. If you’re a data scientist, ML engineer, or AI enthusiast, this deep dive will give you the clarity to choose the best model for your needs—or even contribute to the future of real-time detection. Brief History of YOLO: From YOLOv1 to YOLOv12 The YOLO architecture was introduced by Joseph Redmon in 2016 with the promise of “You Only Look Once”—a radical departure from region proposal methods like R-CNN and Fast R-CNN. Unlike these, YOLO predicts bounding boxes and class probabilities directly from the input image in a single forward pass. The result: blazing speed with competitive accuracy. Since then, the family has evolved rapidly: YOLOv3 introduced multi-scale prediction and better backbone (Darknet-53). YOLOv4 added Mosaic augmentation, CIoU loss, and Cross Stage Partial connections. YOLOv5 (community-driven) emphasized modularity and deployment ease. YOLOv7 introduced E-ELAN modules and anchor-free detection. YOLOv8–YOLOv10 focused on integration with PyTorch, ONNX, quantization, and real-time streaming. YOLOv11 took a leap with self-supervised pretraining. YOLOv12, released in late 2024, added support for cross-modal data, large-context modeling, and efficient vision transformers. YOLOv13 is the culmination of all these efforts, building on the strong foundation of v12 with major improvements in architecture, context-awareness, and compute optimization. Overview of YOLOv12 YOLOv12 was a significant milestone. It introduced several novel components: Transformer-enhanced detection head with sparse attention for improved small object detection. Hybrid Backbone (Ghost + Swin Blocks) for efficient feature extraction. Support for multi-frame temporal detection, aiding video stream performance. Dynamic anchor generation using K-means++ during training. Lightweight quantization-aware training (QAT) enabled optimized edge deployment without retraining. It was the first YOLO version to target not just static images, but also real-time video pipelines, drone feeds, and IoT cameras using dynamic frame processing. Overview of YOLOv13 YOLOv13 represents a leap forward. The development team focused on three pillars: contextual intelligence, hardware adaptability, and training efficiency. Key innovations include: YOLO-TCM (Temporal-Context Modules) that learn spatio-temporal relationships across frames. Dynamic Task Routing (DTR) allowing conditional computation depending on scene complexity. Low-Rank Efficient Transformers (LoRET) for longer-range dependencies with fewer parameters. Zero-cost Quantization (ZQ) that enables near-lossless conversion to INT8 without fine-tuning. YOLO-Flex Scheduler, which adjusts inference complexity in real time based on battery or latency budget. Together, these enhancements make YOLOv13 suitable for adaptive real-time AI, edge computing, autonomous vehicles, and AR applications. Architectural Differences Component YOLOv12 YOLOv13 Backbone GhostNet + Swin Hybrid FlexFormer with dynamic depth Neck PANet + CBAM attention Dual-path FPN + Temporal Memory Detection Head Transformer with Sparse Attention LoRET Transformer + Dynamic Masking Anchor Mechanism Dynamic K-means++ Anchor-free + Adaptive Grid Input Pipeline Mosaic + MixUp + CutMix Vision Mixers + Frame Sampling Output Layer NMS + Confidence Filtering Soft-NMS + Query-based Decoding Performance Comparison: Speed, Accuracy, and Efficiency COCO Dataset Results Metric YOLOv12 (640px) YOLOv13 (640px) mAP@[0.5:0.95] 51.2% 55.8% FPS (Tesla T4) 88 93 Params 38M 36M FLOPs 94B 76B Mobile Deployment (Edge TPU) Model Variant YOLOv12-Tiny YOLOv13-Tiny mAP@0.5 42.1% 45.9% Latency (ms) 18ms 13ms Power Usage 2.3W 1.7W YOLOv13 offers better accuracy with fewer computations, making it ideal for power-constrained environments. Backbone Enhancements in YOLOv13 The new FlexFormer Backbone is central to YOLOv13’s success. It: Integrates convolutional stages for early spatial encoding Employs sparse attention layers in mid-depth for contextual awareness Uses a depth-dynamic scheduler, adapting model depth per image This dynamic structure means simpler images can pass through shallow paths, while complex ones utilize deeper layers—saving resources during inference. Transformer Integration and Feature Fusion YOLOv13 transitions from fixed-grid attention to query-based decoding heads using LoRET (Low-Rank Efficient Transformers). Key advantages: Handles occlusion better Improves long-tail object detection Maintains real-time inference (<10ms/frame) Additionally, the dual-path feature pyramid networks enable better fusion of multi-scale features without increasing memory usage. Improved Training Pipelines YOLOv13 introduces a more intelligent training pipeline: Adaptive Learning Rate Warmup Soft Label Distillation from previous versions Self-refinement Loops that adjust detection targets mid-training Dataset-aware Data Augmentation based on scene statistics As a result, training is 20–30% faster on large datasets and requires fewer epochs for convergence. Applications in Industry Autonomous Vehicles YOLO: Lane and pedestrian detection. Mask R-CNN: Object boundary detection. SAM: Complex environment understanding, rare object segmentation. Healthcare Mask R-CNN and DeepLab: Tumor detection, organ segmentation. SAM: Annotating rare anomalies in radiology scans with minimal data. Agriculture YOLO: Detecting pests, weeds, and crops. SAM: Counting fruits or segmenting plant parts for yield analysis. Retail & Surveillance YOLO: Real-time object tracking. SAM: Tagging items in inventory or crowd segmentation. Quantization and Edge Deployment YOLOv13 focuses heavily on real-world deployment: Supports ZQ (Zero-cost Quantization) directly from the full-precision model Deployable to ONNX, CoreML, TensorRT, and WebAssembly Works out-of-the-box with Edge TPUs, Jetson Nano, Snapdragon NPU, and even Raspberry Pi 5 YOLOv12 was already lightweight, but YOLOv13 expands deployment targets and simplifies conversion. Benchmarking Across Datasets Dataset YOLOv12 mAP YOLOv13 mAP Notable Gains COCO 51.2% 55.8% Better small object recall OpenImages 46.1% 49.5% Less label noise sensitivity BDD100K 62.8% 66.7% Temporal detection improved YOLOv13 consistently outperforms YOLOv12 on both standard and real-world datasets, with notable improvements in night, motion blur, and dense object scenes. Real-World Applications YOLOv12 excels in: Drone object tracking Static image analysis Lightweight surveillance systems YOLOv13 brings advantages to: Autonomous driving

Introduction In the era of real-time computer vision, YOLO (You Only Look Once) has revolutionized object detection with its speed, accuracy, and end-to-end simplicity. From surveillance systems to self-driving cars, YOLO models are at the heart of many vision applications today. Whether you’re a machine learning engineer, a hobbyist, or part of an enterprise AI team, getting YOLO to perform optimally on your custom dataset is both a science and an art. In this comprehensive guide, we’ll share the top 5 essential tips for training YOLO models, backed by practical insights, real-world examples, and code snippets that help you fine-tune your training process. Tip 1: Curate and Structure Your Dataset for Success 1.1 Labeling Quality Matters More Than Quantity ✅ Use tight bounding boxes — make sure your labels align precisely with the object edges. ✅ Avoid label noise — incorrect classes or inconsistent labels confuse your model. ❌ Don’t overlabel — avoid drawing boxes for background objects or ambiguous items. Recommended tools: LabelImg, Roboflow Annotate, CVAT. 1.2 Maintain Class Balance Resample underrepresented classes. Use weighted loss functions (YOLOv8 supports cls_weight). Augment minority class images more aggressively. 1.3 Follow the Right Folder Structure /dataset/ ├── images/ │ ├── train/ │ ├── val/ ├── labels/ │ ├── train/ │ ├── val/ Each label file should follow this format: <class_id> <x_center> <y_center> <width> <height> All values are normalized between 0 and 1. Tip 2: Master the Art of Data Augmentation The goal isn’t more data — it’s better variation. 2.1 Use Built-in YOLO Augmentations Mosaic augmentation HSV color-space shift Rotation and translation Random scaling and cropping MixUp (in YOLOv5) Sample configuration (YOLOv5 data/hyp.scratch.yaml): hsv_h: 0.015 hsv_s: 0.7 hsv_v: 0.4 degrees: 0.0 translate: 0.1 scale: 0.5 flipud: 0.0 fliplr: 0.5 2.2 Custom Augmentation with Albumentations import albumentations as A transform = A.Compose([ A.HorizontalFlip(p=0.5), A.RandomBrightnessContrast(p=0.2), A.Cutout(num_holes=8, max_h_size=16, max_w_size=16, p=0.3), ]) Tip 3: Optimize Hyperparameters Like a Pro 3.1 Learning Rate is King YOLOv5: 0.01 (default) YOLOv8: 0.001 to 0.01 depending on batch size/optimizer 💡 Tip: Use Cosine Decay or One Cycle LR for smoother convergence. 3.2 Batch Size and Image Resolution Batch Size: Max your GPU can handle. Image Size: 640×640 standard, 416×416 for speed, 1024×1024 for detail. 3.3 Use YOLO’s Hyperparameter Evolution python train.py –evolve 300 –data coco.yaml –weights yolov5s.pt Tip 4: Leverage Transfer Learning and Pretrained Models 4.1 Start with Pretrained Weights YOLOv5: yolov5s.pt, yolov5m.pt, yolov5l.pt, yolov5x.pt YOLOv8: yolov8n.pt, yolov8s.pt, yolov8m.pt, yolov8l.pt yolo task=detect mode=train model=yolov8s.pt data=data.yaml epochs=100 imgsz=640 4.2 Freeze Lower Layers (Fine-Tuning) yolo task=detect mode=train model=yolov8s.pt data=data.yaml epochs=50 freeze=10 Tip 5: Monitor, Evaluate, and Iterate Relentlessly 5.1 Key Metrics to Track mAP (mean Average Precision) Precision & Recall Loss curves: box loss, obj loss, cls loss 5.2 Visualize Predictions yolo mode=val model=best.pt data=data.yaml save=True 5.3 Use TensorBoard or ClearML tensorboard –logdir runs/train Other tools: ClearML, Weights & Biases, CometML 5.4 Validate on Real-World Data Always test on your real deployment conditions — lighting, angles, camera quality, etc. Bonus Tips 🔥 Perform Inference-Speed Optimization: yolo export model=best.pt format=onnx Use Smaller Models for Edge Deployment: YOLOv8n or YOLOv5n Final Thoughts Training YOLO is a process that blends good data, thoughtful configuration, and iterative learning. While the default settings may give you decent results, the real magic happens when you: Understand your data Customize your augmentation and training strategy Continuously evaluate and refine By applying these five tips, you’ll not only improve your YOLO model’s performance but also accelerate your development workflow with confidence. Further Resources YOLOv5 GitHub YOLOv8 GitHub Ultralytics Docs Roboflow Blog on YOLO Visit Our Data Annotation Service Visit Now

Introduction In the rapidly evolving world of computer vision, few tasks have garnered as much attention—and driven as much innovation—as object detection and segmentation. From early techniques reliant on hand-crafted features to today’s advanced AI models capable of segmenting anything, the journey has been nothing short of revolutionary. One of the most significant inflection points came with the release of the YOLO (You Only Look Once) family of object detectors, which emphasized real-time performance without significantly compromising accuracy. Fast forward to 2023, and another major breakthrough emerged: Meta AI’s Segment Anything Model (SAM). SAM represents a shift toward general-purpose models with zero-shot capabilities, capable of understanding and segmenting arbitrary objects—even ones they have never seen before. This blog explores the fascinating trajectory of object detection and segmentation, tracing its lineage from YOLO to SAM, and uncovering how the field has evolved to meet the growing demands of automation, autonomy, and intelligence. The Early Days of Object Detection Before the deep learning renaissance, object detection was a rule-based, computationally expensive process. The classic pipeline involved: Feature extraction using techniques like SIFT, HOG, or SURF. Region proposal using sliding windows or selective search. Classification using traditional machine learning models like SVMs or decision trees. The lack of end-to-end trainability and high computational cost meant that these methods were often slow and unreliable in real-world conditions. Viola-Jones Detector One of the earliest practical solutions for face detection was the Viola-Jones algorithm. It combined integral images and Haar-like features with a cascade of classifiers, demonstrating high speed for its time. However, it was specialized and not generalizable to other object classes. Deformable Part Models (DPM) DPMs introduced some flexibility, treating objects as compositions of parts. While they achieved respectable results on benchmarks like PASCAL VOC, their reliance on hand-crafted features and complex optimization hindered scalability. The YOLO Revolution The launch of YOLO in 2016 by Joseph Redmon marked a significant paradigm shift. YOLO introduced an end-to-end neural network that simultaneously performed classification and bounding box regression in a single forward pass. YOLOv1 (2016) Treated detection as a regression problem. Divided the image into a grid; each grid cell predicted bounding boxes and class probabilities. Achieved real-time speed (~45 FPS) with decent accuracy. Drawback: Struggled with small objects and multiple objects close together. YOLOv2 and YOLOv3 (2017-2018) Introduced anchor boxes for better localization. Used Darknet-19 (v2) and Darknet-53 (v3) as backbone networks. YOLOv3 adopted multi-scale detection, improving accuracy on varied object sizes. Outperformed earlier detectors like Faster R-CNN in speed and began closing the accuracy gap. YOLOv4 to YOLOv7: Community-Led Progress After Redmon stepped back from development, the community stepped up. YOLOv4 (2020): Introduced CSPDarknet, Mish activation, and Bag-of-Freebies/Bag-of-Specials techniques. YOLOv5 (2020): Though unofficial, Ultralytics’ YOLOv5 became popular due to its PyTorch base and plug-and-play usability. YOLOv6 and YOLOv7: Brought further optimizations, custom backbones, and increased mAP across COCO and VOC datasets. These iterations significantly narrowed the gap between real-time detectors and their slower, more accurate counterparts. YOLOv8 to YOLOv12: Toward Modern Architectures YOLOv8 (2023): Focused on modularity, instance segmentation, and usability. YOLOv9 to YOLOv12 (2024–2025): Integrated transformers, attention modules, and vision-language understanding, bringing YOLO closer to the capabilities of generalist models like SAM. Region-Based CNNs: The R-CNN Family Before YOLO, the dominant framework was R-CNN, developed by Ross Girshick and team. R-CNN (2014) Generated 2000 region proposals using selective search. Fed each region into a CNN (AlexNet) for feature extraction. SVMs classified features; regression refined bounding boxes. Accurate but painfully slow (~47s/image on GPU). Fast R-CNN (2015) Improved speed by using a shared CNN for the whole image. Used ROI Pooling to extract fixed-size features from proposals. Much faster, but still relied on external region proposal methods. Faster R-CNN (2016) Introduced Region Proposal Network (RPN). Fully end-to-end training. Became the gold standard for accuracy for several years. Mask R-CNN Extended Faster R-CNN by adding a segmentation branch. Enabled instance segmentation. Extremely influential, widely adopted in academia and industry. Anchor-Free Detectors: A New Era Anchor boxes were a crutch that added complexity. Researchers sought anchor-free approaches to simplify training and improve generalization. CornerNet and CenterNet Predicted object corners or centers directly. Reduced computation and improved performance on edge cases. FCOS (Fully Convolutional One-Stage Object Detection) Eliminated anchors, proposals, and post-processing. Treated detection as a per-pixel prediction problem. Inspired newer methods in autonomous driving and robotics. These models foreshadowed later advances in dense prediction and inspired more flexible segmentation approaches. The Rise of Vision Transformers The NLP revolution brought by transformers was soon mirrored in computer vision. ViT (Vision Transformer) Split images into patches, processed them like words in NLP. Demonstrated scalability with large datasets. DETR (DEtection TRansformer) End-to-end object detection using transformers. No NMS, anchors, or proposals—just direct set prediction. Slower but more robust and extensible. DETR variants now serve as a backbone for many segmentation models, including SAM. Segmentation in Focus: From Mask R-CNN to DeepLab Semantic vs. Instance vs. Panoptic Segmentation Semantic: Classifies every pixel (e.g., DeepLab). Instance: Distinguishes between multiple instances of the same class (e.g., Mask R-CNN). Panoptic: Combines both (e.g., Panoptic FPN). DeepLab Family (v1 to v3+) Used Atrous (dilated) convolutions for better context. Excellent semantic segmentation results. Often combined with backbone CNNs or transformers. These approaches excelled in structured environments but lacked generality. Enter SAM: Segment Anything Model by Meta AI Released in 2023, SAM (Segment Anything Model) by Meta AI broke new ground. Zero-Shot Generalization Trained on over 1 billion masks across 11 million images. Can segment any object with: Text prompt Point click Bounding box Freeform prompts Architecture Based on a ViT backbone. Features: Prompt encoder Image encoder Mask decoder Highly parallel and efficient. Key Strengths Works out-of-the-box on unseen datasets. Produces pixel-perfect masks. Excellent at interactive segmentation. Comparative Analysis: YOLO vs R-CNN vs SAM Feature YOLO Faster/Mask R-CNN SAM Speed Real-time Medium to Slow Medium Accuracy High Very High Extremely High (pixel-level) Segmentation Only in recent versions Strong instance segmentation General-purpose, zero-shot Usability Easy Requires tuning Plug-and-play Applications Real-time systems Research & medical All-purpose

Introduction In the rapidly evolving world of computer vision, few names resonate as strongly as YOLO — “You Only Look Once.” Since its original release, YOLO has seen numerous iterations: from YOLOv1 to v5, v7, and recently cutting-edge variants like YOLOv8 and YOLO-NAS. Now, another acronym is joining the family: YOLOE. But what exactly is YOLOE? Is it just another flavor of YOLO for AI enthusiasts to chase? Does it offer anything significantly new, or is it redundant? In this article, we break down what YOLOE is, why it exists, and whether you should pay attention. The Landscape of YOLO Variants: Why So Many? Before we dive into YOLOE specifically, it helps to understand why so many YOLO variants exist in the first place. YOLO started as an ultra-fast object detector that could run in real time, even on consumer GPUs. Over time, improvements focused on accuracy, flexibility, and expanding to edge devices (think mobile phones or embedded systems). The rise of transformer models, NAS (Neural Architecture Search), and improved training pipelines led to new branches like: YOLOv5 (by Ultralytics): community favorite, easy to use YOLOv7: high performance on large benchmarks YOLO-NAS: optimized via Neural Architecture Search YOLO-World: open-vocabulary detection PP-YOLO, YOLOX: alternative backbones and training tweaks Each new version typically optimizes for either speed, accuracy, or deployment flexibility. Introducing YOLOE: What Is It? YOLOE stands for “YOLO Efficient,” and it is a recent lightweight variant designed with efficiency as a core goal. It was introduced by Baai Technology (authors behind the open-source library PPYOLOE), mainly targeted at edge devices and real-time industrial applications. Key Characteristics of YOLOE: Highly Efficient Architecture The architecture uses a blend of MobileNetV3-style efficient blocks, or sometimes GhostNet blocks, focusing on fewer parameters and FLOPs (floating point operations). Tailored for Edge and IoT Unlike large models like YOLOv7 or YOLO-NAS, YOLOE is intended for devices with limited compute power: smartphones, drones, AR/VR headsets, embedded systems. Speed vs Accuracy Balance Typically achieves very high FPS (frames per second) on lower-power hardware, with acceptable accuracy — often competitive with YOLOv5n or YOLOv8n. Small Model Size Weights are often under 10 MB or even smaller. YOLOE vs YOLOv8 / YOLO-NAS / YOLOv7: How Does It Compare? Model Target Strengths Weaknesses YOLOv8 General purpose, flexible SOTA accuracy, scalable Slightly larger YOLO-NAS High-end servers, optimized Superior accuracy-speed tradeoff Requires more compute YOLOv7 High accuracy for general use Well-balanced, battle-tested Larger, complex YOLOE Edge/IoT devices Tiny size, super fast, efficient Lower accuracy ceiling Do You Need YOLOE? When YOLOE Makes Sense: ✅ You are deploying on microcontrollers, edge AI chips (like RK3399, Jetson Nano), or mobile apps✅ You need ultra-low latency detection✅ You want tiny model size to fit into limited flash/RAM✅ Real-time video streaming on constrained hardware When YOLOE is Not Ideal: ❌ You want highest detection accuracy for research or competition❌ You are working with large server-based pipelines (YOLOv8 or YOLO-NAS may be better)❌ You need open-vocabulary or zero-shot detection (look at YOLO-World or DETR-based models) Conclusion: Another YOLO? Yes, But With a Niche YOLOE is not meant to “replace” YOLOv8 or NAS or other large variants — it fills an important niche for lightweight, efficient deployment. If you’re building for mobile, drones, robotics, or smart cameras, YOLOE could be an excellent choice. If you’re doing research or high-stakes applications where accuracy trumps latency, you’ll likely want one of the larger YOLO variants or transformer-based models. In short:YOLOE is not just another YOLO. It is a YOLO for where efficiency really matters. Visit Our Generative AI Service Visit Now

Introduction: The Rise of Autonomous AI Agents In 2025, the artificial intelligence landscape has shifted decisively from monolithic language models to autonomous, task-solving AI agents. Unlike traditional models that respond to queries in isolation, AI agents operate persistently, reason about the environment, plan multi-step actions, and interact autonomously with tools, APIs, and users. These models have blurred the lines between “intelligent assistant” and “independent digital worker.” So, what is an AI agent? At its core, an AI agent is a model—or a system of models—capable of perceiving inputs, reasoning over them, and acting in an environment to achieve a goal. Inspired by cognitive science, these agents are often structured around planning, memory, tool usage, and self-reflection. AI agents are becoming vital across industries: In software engineering, agents autonomously write and debug code. In enterprise automation, agents optimize workflows, schedule tasks, and interact with databases. In healthcare, agents assist doctors by triaging symptoms and suggesting diagnostic steps. In research, agents summarize papers, run simulations, and propose experiments. This blog takes a deep dive into the most important AI agent models as of 2025—examining how they work, where they shine, and what the future holds. What Sets AI Agents Apart? A good AI agent isn’t just a chatbot. It’s an autonomous decision-maker with several cognitive faculties: Perception: Ability to process multimodal inputs (text, image, video, audio, or code). Reasoning: Logical deduction, chain-of-thought reasoning, symbolic computation. Planning: Breaking complex goals into actionable steps. Memory: Short-term context handling and long-term retrieval augmentation. Action: Executing steps via APIs, browsers, code, or robotic limbs. Learning: Adapting via feedback, environment signals, or new data. Agents may be powered by a single monolithic model (like GPT-4o) or consist of multiple interacting modules—a planner, a retriever, a policy network, etc. In short, agents are to LLMs what robots are to engines. They embed LLMs into functional shells with autonomy, memory, and tool use. Top AI Agent Models in 2025 Let’s explore the standout AI agent models powering the revolution. OpenAI’s GPT Agents (GPT-4o-based) OpenAI’s GPT-4o introduced a fully multimodal model capable of real-time reasoning across voice, text, images, and video. Combined with the Assistant API, users can instantiate agents with: Tool use (browser, code interpreter, database) Memory (persistent across sessions) Function calling & self-reflection OpenAI also powers Auto-GPT-style systems, where GPT-4o is embedded into recursive loops that autonomously plan and execute tasks. Google DeepMind’s Gemini Agents The Gemini family—especially Gemini 1.5 Pro—excels in planning and memory. DeepMind’s vision combines the planning strengths of AlphaZero with the language fluency of PaLM and Gemini. Gemini agents in Google Workspace act as task-level assistants: Compose emails, generate documents Navigate multiple apps intelligently Interact with users via voice or text Gemini’s planning agents are also used in robotics (via RT-2 and SayCan) and simulated environments like MuJoCo. Meta’s CICERO and Beyond Meta made waves with CICERO, the first agent to master diplomacy via natural language negotiation. In 2025, successors to CICERO apply social reasoning in: Multi-agent environments (games, simulations) Strategic planning (negotiation, bidding, alignment) Alignment research (theory of mind, deception detection) Meta’s open-source tools like AgentCraft are used to build agents that reason about social intent, useful in HR bots, tutors, and economic simulations. Anthropic’s Claude Agent Models Claude 3 models are known for their robust alignment, long context (up to 200K tokens), and chain-of-thought precision. Claude Agents focus on: Enterprise automation (workflows, legal review) High-stakes environments (compliance, safety) Multi-step problem-solving Anthropic’s strong safety emphasis makes Claude agents ideal for sensitive domains. DeepMind’s Gato & Gemini Evolution Originally released in 2022, Gato was a generalist agent trained on text, images, and control. In 2025, Gato’s successors are now part of Gemini Evolution, handling: Embodied robotics tasks Real-world simulations Game environments (Minecraft, StarCraft II) Gato-like models are embedded in agents that plan physical actions and adapt to real-time environments, critical in smart home devices and autonomous vehicles. Mistral/Mixtral Agents Mistral and its Mixture-of-Experts model Mixtral have been open-sourced, enabling developers to run powerful agent models locally. These agents are favored for: On-device use (privacy, speed) Custom agent loops with LangChain, AutoGen Decentralized agent networks Strength: Open-source, highly modular, cost-efficient. Hugging Face Transformers + Autonomy Stack Hugging Face provides tools like transformers-agent, auto-gptq, and LangChain integration, which let users build agents from any open LLM (like LLaMA, Falcon, or Mistral). Popular features: Tool use via LangChain tools or Hugging Face endpoints Fine-tuned agents for niche tasks (biomedicine, legal, etc.) Local deployment and custom training xAI’s Grok Agents Elon Musk’s xAI developed Grok, a witty and internet-savvy agent integrated into X (formerly Twitter). In 2025, Grok Agents power: Social media management Meme generation Opinion summarization Though often dismissed as humorous, Grok Agents are pushing boundaries in personality, satire, and dynamic opinion reasoning. Cohere’s Command-R+ Agents Cohere’s Command-R+ is optimized for retrieval-augmented generation (RAG) and enterprise search. Their agents excel in: Customer support automation Document Q&A Legal search and research Command-R agents are known for their factuality and search integration. AgentVerse, AutoGen, and LangGraph Ecosystems Frameworks like Microsoft AutoGen, AgentVerse, and LangGraph enable agent orchestration: Multi-agent collaboration (debate, voting, task division) Memory persistence Workflow integration These frameworks are often used to wrap top models (e.g., GPT-4o, Claude 3) into agent collectives that cooperate to solve big problems. Model Architecture Comparison As AI agents evolve, so do the ways they’re built. Behind every capable AI agent lies a carefully crafted architecture that balances modularity, efficiency, and adaptability. In 2025, most leading agents are based on one of two design philosophies: Monolithic Agents (All-in-One Models) These agents rely on a single, large model to perform perception, reasoning, and action planning. Examples: GPT-4o by OpenAI Claude 3 by Anthropic Gemini 1.5 Pro by Google Strengths: Simplicity in deployment Fast response time (no orchestration overhead) Ideal for short tasks or chatbot-like interactions Limitations: Limited long-term memory and persistence Hard to scale across distributed environments Less control over intermediate reasoning steps Modular Agents (Multi-Component Systems) These agents are built from multiple subsystems: Planner: Determines multi-step goals Retriever: Gathers relevant information or

Introduction In the fast-paced world of computer vision, object detection remains a fundamental task. From autonomous vehicles to security surveillance and healthcare, the need to identify and localize objects in images is essential. One architecture that has consistently pushed the boundaries in real-time object detection is YOLO – You Only Look Once. YOLOv12 is the latest and most advanced iteration in the YOLO family. Built upon the strengths of its predecessors, YOLOv12 delivers outstanding speed and accuracy, making it ideal for both research and industrial applications. Whether you’re a total beginner or an AI practitioner looking to sharpen your skills. In this guide will walk you through the essentials of YOLOv12—from installation and training to advanced fine-tuning techniques. We’ll start with the basics: What is YOLOv12? Why is it important? And how is it different from previous versions? What Makes YOLOv12 Unique? YOLOv12 introduces a range of improvements that distinguish it from YOLOv8, v7, and earlier versions: Key Features: Modular Transformer-based Backbone: Leveraging Swin Transformer for hierarchical feature extraction. Dynamic Head Module: Improves context-awareness for better detection accuracy in complex scenes. RepOptimizer: A new optimizer that improves convergence rates. Cross-Stage Partial Networks v3 (CSPv3): Reduces model complexity while maintaining performance. Scalable Architecture: Supports deployment from edge devices to cloud servers seamlessly. YOLOv12 vs YOLOv8: Feature YOLOv8 YOLOv12 Backbone CSPDarknet53 Swin Transformer v2 Optimizer AdamW RepOptimizer Performance High Higher Speed Very Fast Faster Deployment Options Edge, Web Edge, Web, Cloud Installing YOLOv12: Getting Started Getting started with YOLOv12 is easier than ever before, especially with open-source repositories and detailed documentation. Follow these steps to set up YOLOv12 on your local machine. Step 1: System Requirements Python 3.8+ PyTorch 2.x CUDA 11.8+ (for GPU) OpenCV, torchvision Step 2: Clone YOLOv12 Repository git clone https://github.com/WongKinYiu/YOLOv12.git cd YOLOv12 Step 3: Create Virtual Environment python -m venv yolov12-env source yolov12-env/bin/activate # Linux/Mac yolov12-envScriptsactivate # Windows Step 4: Install Dependencies pip install -r requirements.txt Step 5: Download Pretrained Weights YOLOv12 supports pretrained weights. You can use them as a starting point for transfer learning: wget https://github.com/WongKinYiu/YOLOv12/releases/download/v1.0/yolov12.pt Understanding YOLOv12 Architecture YOLOv12 is engineered to balance accuracy and speed through its novel architecture. Components: Backbone (Swin Transformer v2): Processes input images and extracts features. Neck (PANet + BiFPN): Aggregates features at different scales. Head (Dynamic Head): Detects object classes and bounding boxes. Each component is customizable, making YOLOv12 suitable for a wide range of use cases. Innovations: Transformer Integration: Brings better attention mechanisms. RepOptimizer: Trains models with fewer iterations. Flexible Input Resolution: You can train with 640×640 or 1280×1280 images without major modifications. Preparing Your Dataset Before you can train YOLOv12, you need a properly labeled dataset. YOLOv12 supports the YOLO format, which includes a .txt file for each image containing bounding box coordinates and class labels. Step-by-Step Data Preparation: A. Dataset Structure: /dataset /images /train img1.jpg img2.jpg /val img1.jpg img2.jpg /labels /train img1.txt img2.txt /val img1.txt img2.txt B. YOLO Label Format: Each label file contains: All values are normalized between 0 and 1. For example: 0 0.5 0.5 0.2 0.3 C. Tools to Create Annotations: Roboflow: Drag-and-drop interface to label and export in YOLO format. LabelImg: Free, open-source tool with simple UI. CVAT: Great for large datasets and team collaboration. D. Creating data.yaml: This YAML file is required for training and should look like this: train: ./dataset/images/train val: ./dataset/images/val nc: 3 names: [‘car’, ‘person’, ‘bicycle’] Training YOLOv12 on a Custom Dataset Now that your dataset is ready, let’s move to training. A. Training Script YOLOv12 uses a training script similar to previous versions: python train.py –data data.yaml –cfg yolov12.yaml –weights yolov12.pt –epochs 100 –batch-size 16 –img 640 B. Key Parameters Explained: –data: Path to the data.yaml. –cfg: YOLOv12 model configuration. –weights: Starting weights (use ” for training from scratch). –epochs: Number of training cycles. –batch-size: Number of images per batch. –img: Image resolution (e.g., 640×640). C. Monitor Training YOLOv12 integrates with: TensorBoard: tensorboard –logdir runs/train Weights & Biases (wandb): Logs loss curves, precision, recall, and more. D. Training Tips: Use GPU if available; it reduces training time significantly. Start with lower epochs (~50) to test quickly, then increase. Tune batch size based on your system’s memory. E. Saving Checkpoints: By default, YOLOv12 saves model weights every epoch in /runs/train/exp/weights/. Evaluating and Tuning the Model Once training is done, it’s time to evaluate your model. A. Evaluation Metrics: Precision: How accurate the predictions are. Recall: How many objects were detected. mAP (mean Average Precision): Balanced view of precision and recall. YOLOv12 generates a report automatically after training: results.png B. Command to Evaluate: python val.py –weights runs/train/exp/weights/best.pt –data data.yaml –img 640 C. Tuning for Better Accuracy: Augmentations: Enable mixup, mosaic, and HSV shifts. Learning Rate: Lower if the model is unstable. Anchor Optimization: YOLOv12 can auto-calculate optimal anchors for your dataset. Real-Time Inference with YOLOv12 YOLOv12 shines in real-time applications. Here’s how to run inference on images, videos, and webcam feeds. A. Inference on Images: python detect.py –weights best.pt –source data/images/test.jpg –img 640 B. Inference on Videos: python detect.py –weights best.pt –source video.mp4 C. Live Inference via Webcam: python detect.py –weights best.pt –source 0 D. Output: Detected objects are saved in runs/detect/exp/. The script will draw bounding boxes and labels on the images. E. Confidence Threshold: Add –conf 0.4 to increase or decrease sensitivity. Advanced Features and Expert Tweaks YOLOv12 is powerful out of the box, but fine-tuning can unlock even more potential. A. Custom Backbone: Switch to MobileNet or EfficientNet for edge deployment by modifying the yolov12.yaml. B. Hyperparameter Evolution: YOLOv12 includes an automated evolution script: python evolve.py –data data.yaml –img 640 –epochs 50 C. Quantization: Post-training quantization (INT8/FP16) using: TensorRT ONNX OpenVINO D. Multi-GPU Training: Use: python -m torch.distributed.launch –nproc_per_node 2 train.py … E. Exporting the Model: python export.py –weights best.pt –include onnx torchscript YOLOv12 Use Cases in Real Life Here are popular use cases where YOLOv12 is being deployed: A. Autonomous Vehicles Detects pedestrians, cars, road signs in real time at high FPS. B. Smart Surveillance Recognizes weapons, intruders, and suspicious behaviors with minimal delay.