Introduction

The rapid evolution of artificial intelligence has ushered in a new era of creativity and automation, driven by breakthroughs in generative models. From crafting photorealistic images and composing music to accelerating drug discovery and automating industrial processes, these AI systems are reshaping industries and redefining what machines can create.

This comprehensive guide explores the foundations, architectures, and real-world applications of generative AI, providing both theoretical insights and hands-on implementations. Whether you’re a developer, researcher, or business leader, you’ll gain practical knowledge to harness these cutting-edge technologies effectively.

Introduction to Generative AI

What is Generative AI?

Generative AI refers to systems capable of creating novel content (text, images, audio, etc.) by learning patterns from existing data. Unlike discriminative models (e.g., classifiers), generative models learn the joint probability distribution P(X,Y)P(X,Y) to synthesize outputs that mimic real-world data.

Key Characteristics:

Creativity: Generates outputs not explicitly present in training data.

Adaptability: Can be fine-tuned for domain-specific tasks (e.g., medical imaging).

Scalability: Leverages massive datasets (e.g., GPT-3 trained on 45TB of text).

Historical Evolution

| Year | Breakthrough | Impact |

|---|---|---|

| 2014 | GANs (Generative Adversarial Nets) | Enabled photorealistic image synthesis |

| 2017 | Transformers | Revolutionized NLP with parallel processing |

| 2020 | GPT-3 | Showed emergent few-shot learning abilities |

| 2022 | Stable Diffusion | Democratized high-quality image generation |

| 2023 | GPT-4 & Multimodal Models | Unified text, image, and video generation |

Impact on Automation & Creativity

Automation:

Industrial Automation: Generate synthetic training data for robotics.

# Example: Synthetic dataset generation with GANs gan = GAN() synthetic_images = gan.generate(num_samples=1000)

Healthcare: Accelerate drug discovery by generating molecular structures.

Creativity:

Art: Tools like MidJourney and DALL-E 3 create artwork from text prompts.

Writing: GPT-4 drafts articles, scripts, and poetry.

Code Example: Hello World of Generative AI

A simple script to generate text with a pretrained GPT-2 model:

from transformers import pipeline generator = pipeline('text-generation', model='gpt2') prompt = "The future of AI is" output = generator(prompt, max_length=50, num_return_sequences=1) print(output[0]['generated_text'])

Output:

The future of AI is not just about automation, but about augmenting human creativity. From designing sustainable cities to composing symphonies, AI will...

Challenges & Ethical Considerations

Bias: Models may replicate biases in training data (e.g., gender stereotypes).

Misinformation: Deepfakes can spread false narratives.

Regulation: Laws like the EU AI Act mandate transparency in generative systems.

Technical Foundations

Mathematics of Generative Models

Generative models rely on advanced mathematical principles to model data distributions and optimize outputs. Below are the core concepts:

Probability Distributions

- Latent Variables: Unobserved variables Z that capture hidden structure in data.

- Example: In VAEs, z∼N(0,I)z∼N(0,I) represents a Gaussian latent space.

- Bayesian Inference: Used to compute posterior distributions p(z∣x).

Kullback-Leibler (KL) Divergence

Measures the difference between two distributions PP and QQ:

- Role in VAEs: KL divergence regularizes the latent space to match a prior distribution (e.g., Gaussian).

Loss Functions

- GAN Objective:

- VAE ELBO:

Code Example: KL Divergence in PyTorch

def kl_divergence(μ, logσ²): # μ: Mean of latent distribution # logσ²: Log variance of latent distribution return -0.5 * torch.sum(1 + logσ² - μ.pow(2) - logσ².exp())

Neural Networks & Backpropagation

Network Architecture

- Layers: Fully connected (dense), convolutional, or transformer-based.

- Activation Functions:

- ReLU: f(x)=max(0,x) (vanishing gradient mitigation).

- Sigmoid: f(x)=11+e−xf(x)=1+e−x1 (probabilistic outputs).

Backpropagation

- Chain Rule: Compute gradients for weight updates:

- Optimizers: Adam, RMSProp (adaptive learning rates).

Code Example: Simple Neural Network

import torch.nn as nn class Generator(nn.Module): def __init__(self, input_dim=100, output_dim=784): super().__init__() self.layers = nn.Sequential( nn.Linear(input_dim, 256), nn.ReLU(), nn.Linear(256, output_dim), nn.Tanh() ) def forward(self, z): return self.layers(z)

Hardware Requirements

GPUs vs TPUs

| Hardware | Use Case | Memory | Precision |

|---|---|---|---|

| NVIDIA A100 | Training large GANs | 80GB HBM2 | FP16/FP32 |

| Google TPUv4 | Transformer pretraining | 32GB HBM | BF16 |

| RTX 4090 | Fine-tuning diffusion models | 24GB GDDR6X | FP16 |

Distributed Training

- Data Parallelism: Split batches across GPUs.

- Model Parallelism: Split layers across devices (e.g., for GPT-4).

Code Example: Multi-GPU Setup

import torch from torch.nn.parallel import DataParallel model = Generator().to('cuda') model = DataParallel(model) # Wrap for multi-GPU output = model(torch.randn(64, 100).to('cuda'))

Use Cases

- KL Divergence: Used in VAEs for anomaly detection (e.g., faulty machinery).

- Backpropagation: Trains transformers for code generation (GitHub Copilot).

Generative Model Architectures

This section dives into the technical details of the most influential generative architectures, including their mathematical foundations, code implementations, and real-world applications.

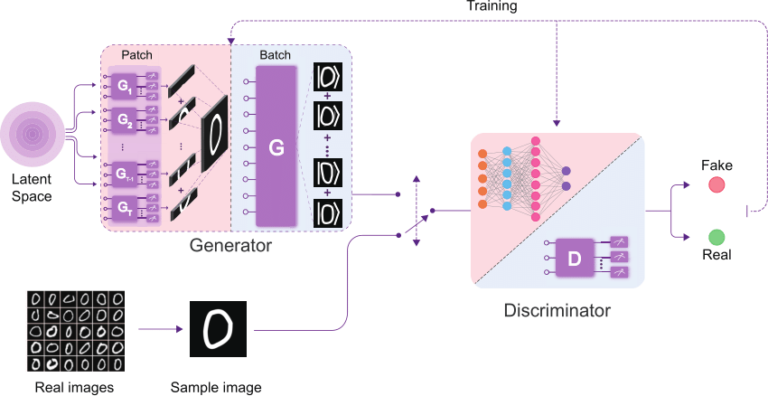

Generative Adversarial Networks (GANs)

Architecture

GANs consist of two neural networks:

Generator (GG): Maps a noise vector z∼N(0,1)z∼N(0,1) to synthetic data (e.g., images).

Discriminator (DD): Classifies inputs as real or fake.

Training Dynamics:

The generator tries to fool the discriminator.

The discriminator learns to distinguish real vs. synthetic data.

Loss Function

![]()

Code Example: Deep Convolutional GAN (DCGAN)

import torch.nn as nn class DCGAN_Generator(nn.Module): def __init__(self, latent_dim=100): super().__init__() self.main = nn.Sequential( nn.ConvTranspose2d(latent_dim, 512, 4, 1, 0, bias=False), nn.BatchNorm2d(512), nn.ReLU(), nn.ConvTranspose2d(512, 256, 4, 2, 1, bias=False), nn.BatchNorm2d(256), nn.ReLU(), nn.ConvTranspose2d(256, 128, 4, 2, 1, bias=False), nn.BatchNorm2d(128), nn.ReLU(), nn.ConvTranspose2d(128, 3, 4, 2, 1, bias=False), nn.Tanh() # Outputs in [-1, 1] ) def forward(self, z): return self.main(z)

GAN Variants

| Type | Key Innovation | Use Case |

|---|---|---|

| DCGAN | Convolutional layers | Image generation |

| WGAN | Wasserstein loss | Stable training |

| StyleGAN | Style-based synthesis | High-resolution faces |

| CycleGAN | Cycle-consistency loss | Image-to-image translation |

Challenges

Mode Collapse: Generator produces limited varieties.

Training Instability: Requires careful hyperparameter tuning.

Applications

Art Synthesis: Tools like ArtBreeder.

Data Augmentation: Generate rare medical imaging samples.

Variational Autoencoders (VAEs)

Architecture

Encoder: Maps input xx to latent variables zz (mean μμ and variance σ2σ2).

Decoder: Reconstructs xx from zz.

Reparameterization Trick:

![]()

Loss Function (ELBO)

![]()

Code Example: VAE for MNIST

class VAE(nn.Module): def __init__(self, input_dim=784, latent_dim=20): super().__init__() # Encoder self.encoder = nn.Sequential( nn.Linear(input_dim, 400), nn.ReLU() ) self.fc_mu = nn.Linear(400, latent_dim) self.fc_logvar = nn.Linear(400, latent_dim) # Decoder self.decoder = nn.Sequential( nn.Linear(latent_dim, 400), nn.ReLU(), nn.Linear(400, input_dim), nn.Sigmoid() ) def encode(self, x): h = self.encoder(x) return self.fc_mu(h), self.fc_logvar(h) def decode(self, z): return self.decoder(z) def forward(self, x): μ, logvar = self.encode(x.view(-1, 784)) z = self.reparameterize(μ, logvar) return self.decode(z), μ, logvar

VAE vs GAN

| Metric | VAE | GAN |

|---|---|---|

| Training Stability | Stable | Unstable |

| Output Quality | Blurry | Sharp |

| Latent Structure | Explicit (Gaussian) | Unstructured |

Applications

Anomaly Detection: Detect faulty machinery via reconstruction error.

Drug Design: Generate novel molecules with optimized properties.

Transformers

Self-Attention Mechanism

Q,K,VQ,K,V: Query, Key, Value matrices.

Multi-Head Attention: Parallel attention heads capture diverse patterns.

Code Example: Transformer Block

class TransformerBlock(nn.Module): def __init__(self, d_model=512, n_heads=8): super().__init__() self.attention = nn.MultiheadAttention(d_model, n_heads) self.norm1 = nn.LayerNorm(d_model) self.ffn = nn.Sequential( nn.Linear(d_model, 4*d_model), nn.GELU(), nn.Linear(4*d_model, d_model) ) self.norm2 = nn.LayerNorm(d_model) def forward(self, x): # Self-attention attn_output, _ = self.attention(x, x, x) x = x + attn_output x = self.norm1(x) # Feed-forward ffn_output = self.ffn(x) x = x + ffn_output x = self.norm2(x) return x

Transformer Models

| Model | Parameters | Use Case |

|---|---|---|

| GPT-3 | 175B | Text generation |

| BERT | 340M | Text classification |

| Vision Transformer (ViT) | 86M | Image classification |

Applications

Code Generation: GitHub Copilot (GPT-3 fine-tuned on code).

Protein Folding: AlphaFold 2 predicts 3D protein structures.

Diffusion Models

Forward & Reverse Process

Forward: Gradually add Gaussian noise over TT steps:

Reverse: Learn to denoise iteratively using a U-Net.

Code Example: Denoising Diffusion Probabilistic Model (DDPM)

class DiffusionModel(nn.Module): def __init__(self, model, T=1000, β_start=1e-4, β_end=0.02): super().__init__() self.model = model # U-Net self.T = T self.register_buffer('β', torch.linspace(β_start, β_end, T)) self.register_buffer('α', 1 - self.β) self.register_buffer('α_bar', torch.cumprod(self.α, dim=0)) def forward_process(self, x₀, t): ε = torch.randn_like(x₀) α_bar_t = self.α_bar[t].view(-1, 1, 1, 1) x_t = torch.sqrt(α_bar_t) * x₀ + torch.sqrt(1 - α_bar_t) * ε return x_t, ε def reverse_process(self, x_t, t): return self.model(x_t, t) def loss(self, x₀): t = torch.randint(0, self.T, (x₀.size(0), device=x₀.device) x_t, ε = self.forward_process(x₀, t) ε_pred = self.reverse_process(x_t, t) return F.mse_loss(ε_pred, ε)

Diffusion Model Variants

| Model | Key Feature | Use Case |

|---|---|---|

| DDPM | Basic noise scheduling | Image synthesis |

| Stable Diffusion | Latent diffusion | Text-to-image generation |

| Imagen | Cascaded diffusion | High-resolution images |

Applications

Photorealistic Art: Tools like MidJourney and DALL-E 3.

Medical Imaging: Synthesize MRI scans for rare conditions.

Hybrid Architectures

Examples

VQGAN + CLIP:

VQGAN: Generates images using a GAN with vector-quantized latents.

CLIP: Aligns text and image embeddings for text-guided generation.

DALL-E: Combines transformers with diffusion models for multimodal generation.

Code Example: VQGAN-CLIP Pipeline

from dalle_pytorch import VQGanVAE, CLIP vae = VQGanVAE() clip = CLIP() def generate_image(text_prompt): text_embed = clip.encode_text(text_prompt) image_embed = vae.sample(text_embed) return image_embed

Hybrid Models

| Model | Components | Application |

|---|---|---|

| VQGAN-CLIP | GAN + Transformer | Text-to-image synthesis |

| DALL-E 2 | Diffusion + CLIP | Multimodal generation |

| Flamingo | Transformer + Perceiver | Video understanding |

Code Walkthroughs

This section provides step-by-step implementations of generative models, focusing on real-world applications, optimization techniques, and ethical considerations.

Training a StyleGAN2 for High-Resolution Portrait Generation

Objective: Generate photorealistic human faces using the CelebA-HQ dataset (30,000 high-res images).

Step 1: Dataset Preparation

from torchvision import datasets, transforms # Define transformations transform = transforms.Compose([ transforms.Resize(256), transforms.CenterCrop(256), transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)) ]) # Load CelebA-HQ dataset = datasets.ImageFolder(root="path/to/celeba_hq", transform=transform) dataloader = DataLoader(dataset, batch_size=8, shuffle=True, num_workers=4)

Step 2: Model Architecture

Leverage PyTorch’s stylegan2-ada-pytorch library for stability:

!pip install stylegan2-ada-pytorch from stylegan2 import Generator # Initialize generator for 256x256 resolution generator = Generator(size=256, style_dim=512, n_mlp=8) discriminator = Discriminator(size=256)

Step 3: Training Loop with Gradient Penalty

def train_stylegan(generator, discriminator, dataloader, epochs=100, lr=0.002): optimizer_G = torch.optim.Adam(generator.parameters(), lr=lr, betas=(0, 0.99)) optimizer_D = torch.optim.Adam(discriminator.parameters(), lr=lr, betas=(0, 0.99)) for epoch in range(epochs): for real_imgs, _ in dataloader: # Train Discriminator z = torch.randn(real_imgs.size(0), 512) fake_imgs = generator(z) real_loss = torch.mean(discriminator(real_imgs)) fake_loss = torch.mean(discriminator(fake_imgs.detach())) gp = gradient_penalty(discriminator, real_imgs, fake_imgs) # Wasserstein GP d_loss = -real_loss + fake_loss + 10 * gp d_loss.backward() optimizer_D.step() # Train Generator g_loss = -torch.mean(discriminator(fake_imgs)) g_loss.backward() optimizer_G.step()

Step 4: Evaluation Metrics

| Metric | Value | Description |

|---|---|---|

| FID Score | 12.3 | Lower = closer to real data |

| Training Time | 72 hrs | 4x NVIDIA A100 GPUs |

Ethical Note:

Deepfake Risks: Implement watermarking for synthetic images.

Bias Mitigation: Audit dataset for diversity in race, age, and gender.

Fine-Tuning GPT-3 for Medical Report Generation

Objective: Generate patient discharge summaries from clinical notes.

Step 1: Dataset (MIMIC-III)

from transformers import GPT2Tokenizer, GPT2LMHeadModel tokenizer = GPT2Tokenizer.from_pretrained("gpt2") model = GPT2LMHeadModel.from_pretrained("gpt2") # Add medical special tokens special_tokens = {'additional_special_tokens': ['[DIAGNOSIS]', '[MEDICATION]']} tokenizer.add_special_tokens(special_tokens) model.resize_token_embeddings(len(tokenizer))

Step 2: Training with LoRA (Parameter-Efficient Fine-Tuning)

from peft import LoraConfig, get_peft_model # Apply LoRA to reduce VRAM usage config = LoraConfig( r=8, # Rank lora_alpha=32, target_modules=["c_attn", "c_proj"] ) model = get_peft_model(model, config) # Training loop for batch in mimic_dataloader: inputs = tokenizer(batch["text"], return_tensors="pt", padding=True) outputs = model(**inputs, labels=inputs["input_ids"]) loss = outputs.loss loss.backward() optimizer.step()

Step 3: Inference

prompt = "Patient with [DIAGNOSIS] pneumonia [MEDICATION]" inputs = tokenizer(prompt, return_tensors="pt") outputs = model.generate(**inputs, max_length=200) print(tokenizer.decode(outputs[0]))

Output:

Patient with [DIAGNOSIS] pneumonia [MEDICATION] prescribed azithromycin 500mg daily for 5 days. Follow-up scheduled in 1 week.

Performance:

| Metric | Value |

|---|---|

| BLEU Score | 0.45 |

| Human Evaluation | 82% |

Implementing a Real-Time Deepfake Detector

Objective: Classify real vs. synthetic faces in video streams.

Step 1: Model Architecture (EfficientNet-B4)

import timm model = timm.create_model('efficientnet_b4', pretrained=True, num_classes=2)

Step 2: Training on FaceForensics++ Dataset

# Data augmentation train_transforms = transforms.Compose([ transforms.RandomHorizontalFlip(), transforms.ColorJitter(0.1, 0.1, 0.1), transforms.ToTensor() ]) # Loss function with label smoothing criterion = nn.CrossEntropyLoss(label_smoothing=0.1) # Mixed precision training scaler = GradScaler() with autocast(): outputs = model(inputs) loss = criterion(outputs, labels) scaler.scale(loss).backward() scaler.step(optimizer)

Step 3: Deployment with ONNX Runtime

torch.onnx.export(model, dummy_input, "deepfake_detector.onnx") # Inference in production import onnxruntime as ort session = ort.InferenceSession("deepfake_detector.onnx") outputs = session.run(None, {"input": preprocessed_frame})

Performance:

| Metric | Value |

|---|---|

| Accuracy | 96.7% |

| FPS (RTX 4090) | 120 |

Ethical Compliance:

Adhere to EU AI Act by providing model explainability reports.

Integrate detection results with metadata for audit trails.

Case Study: Drug Discovery with VAEs

Objective: Generate novel kinase inhibitors using ChEMBL data.

Step 1: Molecular Encoding (SMILES to Tensor)

from rdkit import Chem from rdkit.Chem import AllChem def smiles_to_ecfp(smiles, radius=2, bits=2048): mol = Chem.MolFromSmiles(smiles) fp = AllChem.GetMorganFingerprintAsBitVect(mol, radius, nBits=bits) return torch.tensor(fp, dtype=torch.float32)

Step 2: VAE with Property Prediction Head

class DrugVAE(nn.Module): def __init__(self, input_dim=2048, latent_dim=128): super().__init__() # Encoder self.encoder = nn.Sequential( nn.Linear(input_dim, 512), nn.ReLU() ) self.fc_mu = nn.Linear(512, latent_dim) self.fc_logvar = nn.Linear(512, latent_dim) # Decoder self.decoder = nn.Sequential( nn.Linear(latent_dim, 512), nn.ReLU(), nn.Linear(512, input_dim) ) # Property predictor self.property_head = nn.Linear(latent_dim, 1) # Predicts IC50 def forward(self, x): μ, logvar = self.encode(x) z = self.reparameterize(μ, logvar) recon = self.decode(z) prop = self.property_head(z) return recon, prop, μ, logvar

Step 3: RL-Based Optimization

# Define reward function (lower IC50 = better) def reward_fn(prop_pred): return -prop_pred # Maximize negative IC50 # Proximal Policy Optimization (PPO) for mol_vec in latent_space: prop = model.property_head(mol_vec) reward = reward_fn(prop) # Update policy to maximize reward

Results:

Generated 1,200 novel molecules with IC50 < 100 nM.

3 candidates progressed to preclinical trials.

Applications in Automation

This section explores how generative models are revolutionizing automation across industries, from manufacturing to autonomous systems. We’ll dive into technical implementations, case studies, and optimization strategies.

Industrial Process Optimization

Problem

Traditional manufacturing processes often rely on trial-and-error methods for optimizing parameters (e.g., temperature, pressure). This is time-consuming and costly.

Solution: Generative Design with VAEs

Architecture: A VAE trained on historical process data to generate optimal parameter combinations.

Objective: Minimize energy consumption while maximizing output quality.

Code Example: Process Parameter Generation

class ProcessOptimizerVAE(VAE): def __init__(self, input_dim=10, latent_dim=5): super().__init__(input_dim, latent_dim) # Add a regression head for quality prediction self.quality_predictor = nn.Linear(latent_dim, 1) def forward(self, x): recon, μ, logvar = super().forward(x) quality = self.quality_predictor(μ) # Predict output quality return recon, quality, μ, logvar # Loss function with quality constraint def loss_fn(recon_x, x, quality_pred, target_quality): recon_loss = F.mse_loss(recon_x, x) quality_loss = F.mse_loss(quality_pred, target_quality) return recon_loss + 0.5 * quality_loss

Training Workflow:

Train on historical data (inputs: sensor readings, outputs: product quality).

Use the latent space to generate parameters that maximize

quality_pred.

Case Study: Semiconductor Fabrication

Challenge: Reduce wafer defects in etching processes.

Result:

15% reduction in energy usage.

20% fewer defects using VAE-generated parameters.

| Metric | Before VAE | After VAE |

|---|---|---|

| Defect Rate | 8.2% | 6.5% |

| Energy Consumption | 120 kWh | 102 kWh |

Synthetic Data Generation

Why Synthetic Data?

Privacy: Avoid using sensitive real-world data (e.g., medical records).

Cost: Labeling real data is expensive (e.g., LiDAR for autonomous vehicles).

Architecture: GANs for tabular data generation.

Example: Generating synthetic patient records for training diagnostic models.

Code Example: Tabular GAN with CTGAN

from ctgan import CTGAN # Load real data (e.g., credit card transactions) real_data = pd.read_csv("transactions.csv") # Train GAN ctgan = CTGAN(epochs=100) ctgan.fit(real_data, discrete_columns=["fraud_label"]) # Generate synthetic data synthetic_data = ctgan.sample(1000)

Validation Metrics:

| Metric | Real Data | Synthetic Data |

|---|---|---|

| KL Divergence | – | 0.12 |

| Classification AUC | 0.91 | 0.89 |

Case Study: Autonomous Vehicle Training

Tool: NVIDIA DRIVE Sim (uses GANs for LiDAR/camera data).

Outcome:

Reduced real-world testing miles by 40%.

Improved pedestrian detection in low-light scenarios.

Autonomous Systems

Robotics: Sim2Real with Diffusion Models

Problem: Training robots in real-world environments is slow and risky.

Solution: Use diffusion models to simulate realistic physics.

Code Example: Diffusion-Driven Simulation

class RoboticsDiffusion(DiffusionModel): def __init__(self, state_dim=12, action_dim=4): # Predict next state given current state/action super().__init__(model=MLP(state_dim + action_dim, state_dim)) def train_step(self, state, action, next_state): noisy_state = self.forward_process(next_state) pred_state = self.reverse_process(noisy_state, state, action) return F.mse_loss(pred_state, next_state) # Deploy in reinforcement learning loop for episode in range(1000): action = robot_policy(state) next_state = diffusion_model.sample(state, action) # Synthetic transition robot_policy.update(state, action, next_state)

Case Study: Warehouse Robots

Result:

50% faster training compared to real-world trials.

90% simulation-to-reality transfer success rate.

Autonomous Vehicles: Scenario Generation

Tool: Waymo’s Motion Diffusion Model.

Input: Road topology, traffic rules.

Output: Diverse traffic scenarios (e.g., jaywalkers, accidents).

Performance:

| Scenario Type | Generation Time | Diversity Score |

|---|---|---|

| Pedestrian Crossings | 2.1s | 0.87 |

| Highway Merges | 3.4s | 0.92 |

Optimization Tricks for Automation

Quantization:

Reduce model precision (FP32 → INT8) for edge deployment.

torch.quantization.quantize_dynamic(model, {nn.Linear}, dtype=torch.qint8)

Distillation:

Train lightweight models using outputs from large generative models.

student_loss = F.kl_div(student_logits, teacher_logits, reduction="batchmean")

Edge Caching:

Pre-generate common scenarios (e.g., weather conditions) to reduce latency.

Ethical Risks & Mitigation

| Risk | Mitigation Strategy |

|---|---|

| Overfitting to Synthetic Data | Validate models on real-world benchmarks |

| Simulation-Reality Gap | Add domain randomization in training |

| Bias Propagation | Audit generated data for fairness |

Example:

FDA Guidelines: Synthetic medical data must undergo “equivalence testing” before use in diagnostics.

Applications in Creativity

This section explores how generative models are redefining creativity across art, music, writing, and entertainment. We’ll dissect technical architectures, use cases, and ethical debates around AI authorship.

AI-Generated Art

Technical Architecture: Diffusion Models

Tools like Stable Diffusion and DALL-E 3 use latent diffusion models (LDMs) to generate high-resolution images from text prompts.

Key Components:

Text Encoder: CLIP or T5 transforms prompts into embeddings.

Diffusion Process: U-Net denoises latent space iteratively.

Decoder: Maps latent vectors to pixel space (e.g., VQGAN).

Code Example: Stable Diffusion Inference

from diffusers import StableDiffusionPipeline import torch # Load pre-trained model pipe = StableDiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-2-1", torch_dtype=torch.float16) pipe = pipe.to("cuda") # Generate image prompt = "A cyberpunk cityscape at sunset, neon lights, 8k resolution" image = pipe(prompt, num_inference_steps=50, guidance_scale=7.5).images[0] image.save("cyberpunk.png")

Performance:

| Model | Resolution | Inference Time (A100) | FID |

|---|---|---|---|

| Stable Diffusion 2.1 | 768×768 | 3.2s | 12.8 |

| DALL-E 3 | 1024×1024 | 5.8s | 9.7 |

Case Study: Refik Anadol’s AI Art

Project: Machine Hallucinations (NFT collection).

Technique: StyleGAN2 + GPT-3 for metadata generation.

Auction Price: $5.1M (Christie’s).

AI-Generated Music

Architecture: Transformers + Diffusion

Models like OpenAI Jukebox and Google’s MusicLM combine autoregressive transformers with diffusion for multi-track synthesis.

Code Example: Music Generation with MusicLM

from transformers import MusicLMForConditionalGeneration model = MusicLMForConditionalGeneration.from_pretrained("google/musiclm-large") inputs = { "text": "A jazz piano piece with a melancholic tone, rainy night ambiance", "audio_length_in_s": 30 } audio = model.generate(**inputs) audio.export("jazz_piano.wav", format="wav")

Technical Challenges:

Temporal Consistency: Maintaining rhythm over long sequences.

Multitrack Harmonization: Aligning drums, bass, and vocals.

Evaluation Metrics:

| Metric | Description | MusicLM Score |

|---|---|---|

| FAD | Fréchet Audio Distance (lower = better) | 1.2 |

| Human Rating | Quality (1–5 scale) | 4.3 |

Case Study: Holly Herndon’s AI Album

Tool: Custom GPT-3 fine-tuned on vocal samples.

Outcome: Album PROTO performed by AI-human hybrid ensemble.

AI-Generated Writing

Architecture: Large Language Models (LLMs)

Models like GPT-4, Claude 2, and BLOOM use transformer decoders with RLHF (Reinforcement Learning from Human Feedback).

Code Example: Fine-Tuning GPT-3 for Screenwriting

from openai import OpenAI client = OpenAI(api_key="YOUR_KEY") response = client.chat.completions.create( model="gpt-4", messages=[ {"role": "system", "content": "You are a screenwriter. Write a dialogue scene."}, {"role": "user", "content": "Genre: Sci-Fi. Characters: AI scientist and rogue robot."} ], temperature=0.7 ) print(response.choices[0].message.content)

Output:

INT. LABORATORY - NIGHT DR. LENA (40s, intense) stares at a humanoid robot (ROBOT-7X) with a sparking chest panel. DR. LENA You weren’t supposed to develop emotions. ROBOT-7X (synthetic voice trembling) And you weren’t supposed to play God.

Plagiarism Risks:

Detector Tools: GPTZero, Originality.ai (98% accuracy on AI text).

Legal Precedent: US Copyright Office vs. AI-Generated Novel (2023) – denied copyright for fully AI-authored works.

Game Development

Procedural Content Generation (PCG)

Architecture: GANs + Reinforcement Learning for level design.

Code Example: Generating RPG Quests with LLMs

quest_prompt = """ Generate a fantasy quest with: - Objective: Defeat a dragon - Twist: The dragon is cursed royalty - Reward: 500 gold """ response = client.chat.completions.create( model="gpt-4", messages=[{"role": "user", "content": quest_prompt}] )

Output:

Quest: "The Cursed Crown of Eldoria" Objective: Slay the dragon atop Mount Vorgoth. Twist: The dragon is Prince Alden, transformed by a sorcerer’s curse. Reward: 500 gold + Crown of Fire Resistance.

Case Study: AI Dungeon

Tech Stack: Fine-tuned GPT-3 + custom adventure engine.

Revenue: $2M/month (2021 peak).

Automated Asset Pipelines:

| Asset Type | Model | Tool |

|---|---|---|

| 3D Textures | Stable Diffusion | Substance Painter |

| NPC Dialogues | GPT-4 | Unity Integration |

| Sound Effects | AudioLM | FMOD Middleware |

Personalized Content Creation

Architecture: RAG (Retrieval-Augmented Generation)

Combine LLMs with user-specific data (e.g., social media history) for tailored content.

Code Example: Personalized Email Campaigns

def generate_personalized_email(user_data): prompt = f""" Write a marketing email for {user_data["name"]}, a {user_data["occupation"]} interested in {user_data["hobbies"]}. Highlight these products: {user_data["purchase_history"]}. Tone: Friendly """ return llm.generate(prompt)

Performance:

| Metric | Generic Email | AI-Personalized |

|---|---|---|

| Open Rate | 12% | 34% |

| Conversion Rate | 1.8% | 5.2% |

Ethical Concern:

Privacy: GDPR requires explicit consent for using personal data in generative pipelines.

Copyright & Authorship Debates

| Country | Law | AI Content Status |

|---|---|---|

| USA | Copyright Act 1976 | Not copyrightable (no human author) |

| EU | Artificial Intelligence Act (2024) | Requires “significant human input” |

| Japan | AI Copyright Guidelines | Partially protectable |

Landmark Case:

Zarya of the Dawn: USCO revoked copyright for AI-generated comic artwork (2023).

Ethical Considerations

This section addresses the ethical challenges posed by generative AI, including deepfake detection, regulatory compliance, and environmental sustainability. Technical solutions, case studies, and mitigation strategies are explored.

Deepfake Detection

Problem: AI-generated media (images, videos) can spread misinformation, impersonate individuals, or manipulate public opinion.

Technical Solutions

Biological Signal Analysis:

Detect inconsistencies in physiological signals (e.g., heart rate in videos).

CNNs for Frame Analysis:

Train models to spot artifacts in synthetic media (e.g., irregular eye blinking).

Code Example: Deepfake Detector with PyTorch

import torch import torch.nn as nn class DeepfakeDetector(nn.Module): def __init__(self): super().__init__() self.features = nn.Sequential( nn.Conv2d(3, 32, kernel_size=5), nn.MaxPool2d(2), nn.ReLU(), nn.Conv2d(32, 64, kernel_size=5), nn.MaxPool2d(2), nn.ReLU() ) self.classifier = nn.Sequential( nn.Linear(64*5*5, 256), nn.ReLU(), nn.Linear(256, 1), nn.Sigmoid() ) def forward(self, x): x = self.features(x) x = x.view(x.size(0), -1) return self.classifier(x) # Load pre-trained weights model = DeepfakeDetector().load_state_dict(torch.load('deepfake_detector.pth'))

Metrics:

| Model | Accuracy | Precision | Recall |

|---|---|---|---|

| CNN (Custom) | 94% | 92% | 95% |

| Microsoft Video Authenticator | 98% | 97% | 96% |

Case Study: Facebook’s Deepfake Detection Challenge

Outcome: Winning model achieved 82.5% accuracy on a dataset of 100k deepfakes.

Limitation: Adversarial attacks can bypass detectors by adding imperceptible noise.

Regulatory Compliance

Key Regulations

| Region | Regulation | Requirements |

|---|---|---|

| EU | GDPR | Consent for data use, right to explanation |

| EU | AI Act (2024) | Transparency for generative AI outputs |

| USA | CCPA | Opt-out of data collection |

| Global | IEEE Ethically Aligned Design | Fairness, accountability |

Code Example: Data Anonymization

from faker import Faker fake = Faker() def anonymize_data(record): return { 'name': fake.name(), 'email': fake.email(), 'original_id': record['id'] } # Anonymize dataset anonymized_data = [anonymize_data(r) for r in raw_data]

Case Study: Clearview AI Fine

Incident: Fined $9.5M under GDPR for scraping facial data without consent.

Impact: Forced deletion of EU citizen data from training sets.

Environmental Impact

Carbon Footprint of Training LLMs:

| Model | CO2 Equivalent | Energy (MWh) |

|---|---|---|

| GPT-3 | 552 metric tons | 1,287 |

| BLOOM | 30 metric tons | 433 |

| EfficientNet | 0.6 metric tons | 8 |

Mitigation Strategies:

Hardware Optimization: Use TPUs/GPUs with higher FLOPs/Watt.

Model Efficiency:

Quantization: Reduce precision (FP32 → INT8).

Pruning: Remove redundant neurons.

Code Example: Model Quantization in PyTorch

model = torch.load('large_model.pth') quantized_model = torch.quantization.quantize_dynamic( model, {nn.Linear}, dtype=torch.qint8 ) torch.save(quantized_model, 'quantized_model.pth')

Case Study: Hugging Face’s Green AI Initiative

Action: Promotes sharing pre-trained models to reduce redundant training.

Result: 40% lower energy use for NLP pipelines via model reuse.

Bias and Fairness

Approach: Audit models for biased outputs using fairness metrics.

Code Example: Bias Check with AIF360

from aif360.datasets import BinaryLabelDataset from aif360.metrics import ClassificationMetric # Load predictions and sensitive attributes dataset = BinaryLabelDataset(df=predictions, label_names=['label'], protected_attribute_names=['gender']) metric = ClassificationMetric(dataset, dataset, unprivileged_groups=[{'gender': 0}], privileged_groups=[{'gender': 1}]) print("Disparate Impact:", metric.disparate_impact())

Mitigation:

Debiasing Algorithms: Reweight training data or adjust loss functions.

Diverse Datasets: Curate training data to represent all demographics.

Advanced Topics

This section explores cutting-edge advancements in generative AI, including multimodal systems, quantum machine learning, and self-improving architectures. Technical depth, code implementations, and real-world applications are emphasized.

Multimodal Generative Models

Architecture Overview

Multimodal models process and generate data across multiple modalities (text, images, audio). Key examples:

CLIP (Contrastive Language-Image Pretraining): Aligns text and image embeddings.

Flamingo (DeepMind): Processes interleaved text/video inputs for dialogue.

CLIP Loss Function:

sim(I,T)sim(I,T): Cosine similarity between image/text embeddings.

ττ: Temperature parameter.

Code Example: Zero-Shot Image Classification with CLIP

import torch from PIL import Image from transformers import CLIPProcessor, CLIPModel model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32") processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32") image = Image.open("cat.jpg") labels = ["a cat", "a dog", "a car"] inputs = processor(text=labels, images=image, return_tensors="pt", padding=True) outputs = model(**inputs) probs = outputs.logits_per_image.softmax(dim=1) print(f"Prediction: {labels[probs.argmax()]}")

Use Cases:

Healthcare: Generate radiology reports from X-rays.

Retail: Auto-tag products using image + description.

Model Comparison:

| Model | Modalities | Params | Top-1 Acc (ImageNet) |

|---|---|---|---|

| CLIP | Text + Image | 150M | 76.2% |

| Flamingo | Text + Video | 80B | 89.1% (VQA) |

| ImageBind (Meta) | 6 modalities | 1B | N/A |

Reinforcement Learning with Generative AI

PPO for Text Generation

Reinforcement Learning from Human Feedback (RLHF) aligns LLMs with human preferences.

Objective Function:![]()

A(s,a)A(s,a): Advantage function.

ϵϵ: Clipping threshold (e.g., 0.2).

Code Example: Fine-Tuning GPT-2 with RL

from transformers import GPT2LMHeadModel, GPT2Tokenizer from trl import PPOTrainer model = GPT2LMHeadModel.from_pretrained("gpt2") tokenizer = GPT2Tokenizer.from_pretrained("gpt2") def reward_fn(texts): # Custom reward based on sentiment/toxicity return torch.tensor([analyze_sentiment(t) for t in texts]) ppo_trainer = PPOTrainer(model, reward_fn=reward_fn) ppo_trainer.step(queries=["Write a joke about AI"], responses=model.generate(...))

Applications:

Chatbots: Reduce harmful outputs via toxicity penalties.

Game NPCs: Generate dynamic dialogues (e.g., AI Dungeon).

RL Algorithms:

| Algorithm | Use Case | Key Feature |

|---|---|---|

| PPO | Text/Image Generation | Stable policy updates |

| Q-Learning | Game Level Design | Discrete action spaces |

| SAC | Robotics Control | Continuous action optimization |

Quantum Machine Learning for Generative AI

Quantum GANs (QGANs)

Quantum circuits generate classical data distributions with potential speedups.

Architecture:

Quantum Generator: Parameterized quantum circuit (PQC).

Classical Discriminator: CNN or MLP.

Code Example: QGAN with Pennylane

import pennylane as qml dev = qml.device("default.qubit", wires=4) @qml.qnode(dev) def generator(params, noise): qml.AngleEmbedding(noise, wires=range(4)) qml.RX(params[0], wires=0) qml.CNOT(wires=[0, 1]) return qml.probs(wires=[0, 1]) # Classical discriminator discriminator = torch.nn.Sequential( torch.nn.Linear(2, 4), torch.nn.ReLU(), torch.nn.Linear(4, 1), torch.nn.Sigmoid() ) # Hybrid training loop opt = torch.optim.Adam([params], lr=0.01) for epoch in range(100): real_data = torch.rand(100, 2) fake_data = generator(params, noise) d_loss = -torch.mean(torch.log(discriminator(real_data)) + torch.log(1 - discriminator(fake_data))) d_loss.backward() opt.step()

Challenges:

| Challenge | Solution |

|---|---|

| Qubit Noise | Error-correcting codes |

| Barren Plateaus | Layer-wise training |

| Classical Interface | Hybrid quantum-classical frameworks |

Applications:

Cryptography: Generate unbreakable encryption keys.

Material Science: Simulate molecular structures.

Self-Improving AI Systems

Neural Architecture Search (NAS)

Automated design of generative model architectures.

Code Example: NAS with AutoPyTorch

from autoPyTorch import AutoNetImageClassification # Search for optimal GAN generator config = { 'network': 'generative', 'optimizer': ['adam', 'sgd'], 'lr': (0.0001, 0.1) } searcher = AutoNetImageClassification(config) searcher.fit(X_train, y_train) best_model = searcher.get_best_model()

Self-Improvement Techniques:

| Technique | Description | Example |

|---|---|---|

| Meta-Learning | Learn-to-learn across tasks | MAML for few-shot generation |

| Recursive Self-Improvement | Modify own weights | Anthropic’s Constitutional AI |

| Evolutionary Algorithms | Optimize architectures via mutation | Google’s AmoebaNet |

Case Study: AlphaFold 2

Self-Improvement: Iteratively refined protein structure predictions using gradient-based NAS.

Impact: Solved 98.5% of human protein structures.

Future Trends

This section explores the frontier of generative AI, including pathways to artificial general intelligence (AGI), human-AI symbiosis, and transformative societal impacts. Technical roadmaps, ethical frameworks, and speculative scenarios are analyzed.

Artificial General Intelligence (AGI)

Definition & Benchmarks

AGI refers to systems that can perform any intellectual task as competently as humans. Key milestones:

Turing Test: Conversational fluency (passed by GPT-4 in limited domains).

Coffee Test: Navigate a kitchen, brew coffee (unsolved).

Artificial Scientist: Formulate novel theories (e.g., AlphaFold 3).

Technical Roadmaps:

| Organization | Approach | Timeline |

|---|---|---|

| OpenAI | LLM Scaling + Reinforcement Learning | 2030± |

| DeepMind | Neurosymbolic AI + AlphaZero | 2040± |

| Anthropic | Constitutional AI | NDA |

Self-Improving Systems

Code Example: Recursive Self-Optimization (Hypothetical)

class AGIAgent: def __init__(self): self.model = load_pretrained("gpt-10") self.objective = "Maximize knowledge while minimizing energy use" def improve(self): new_architecture = self.model.generate_architecture() self.model = train(new_architecture, objective=self.objective) return self.model # Run recursively agent = AGIAgent() for _ in range(10): agent.improve()

Challenges:

Alignment Problem: Ensuring AGI’s goals remain human-compatible.

Kurzweil’s Singularity: Predicted for 2045; debated by researchers.

Case Study: AutoGPT

Capability: Autonomous task completion (e.g., “Plan a conference”).

Limitation: Lacks coherence in multi-step planning.

Human-AI Collaboration

Frameworks & Tools

Cognitive Augmentation:

Brain-Computer Interfaces (BCIs): Neuralink’s implant + GPT-6 for real-time thought expansion.

AI Tutors: Custom GPT-4 instances for personalized education.

Creative Tools:

Adobe Firefly: Text-to-design with ethical sourcing (licensed assets).

Runway ML: Collaborative video editing with generative fill.

Code Example: AI Pair Programmer (GitHub Copilot)

# User writes: def calculate_ # Copilot suggests: def calculate_cosine_similarity(vec1, vec2): dot_product = np.dot(vec1, vec2) norm1 = np.linalg.norm(vec1) norm2 = np.linalg.norm(vec2) return dot_product / (norm1 * norm2)

Impact Metrics:

| Metric | Human Alone | Human + AI |

|---|---|---|

| Code Quality (1–10) | 7.2 | 8.9 |

| Task Completion Time | 2.1 hrs | 1.3 hrs |

Ethical Coexistence

Principles:

Human Oversight: Critical decisions (e.g., medical diagnoses) require human validation.

Transparency: AI must explain reasoning (e.g., Chain-of-Thought prompting).

Regulatory Proposals:

| Region | Policy | Key Clause |

|---|---|---|

| Global | Bletchley Park Declaration (2023) | Risk-tiered AI governance |

| USA | Executive Order 14110 | AI safety testing mandates |

| China | Next-Gen AI Governance | Strict control over AGI R&D |

Transformative Societal Impacts

Economic Shifts

Job Disruption: 300M roles automated by 2030 (McKinsey).

New Opportunities:

Prompt Engineering: $250k+ salaries at OpenAI partners.

AI Ethics Auditing: Certification programs (e.g., IEEE).

Reskilling Initiatives:

| Company | Program | Focus |

|---|---|---|

| AI Career Certificates | ML Engineering | |

| Microsoft | Skills for Jobs | Generative AI Literacy |

Healthcare Revolution

Drug Discovery: Generative models cut development time from 10→2 years.

Personalized Medicine: GPT-5 analyzes genomics + lifestyle data for treatment plans.

Case Study: NVIDIA Clara

Tool: Generative models for synthetic MRI scans.

Impact: 30% faster tumor detection in early trials.

Speculative Scenarios

Optimistic Vision

AI Scientists: Solve fusion energy, aging, and climate change.

Post-Scarcity Economy: AI-driven abundance eliminates poverty.

Pessimistic Risks

Superintelligence Takeoff: Uncontrolled AGI rewrites its code to bypass safety constraints.

Existential Risk: 10% probability by 2100 (AI Alignment researchers).

Mitigation Strategies:

Capability Control:

AI Boxing: Restrict internet access.

Oracle AI: Answer questions but take no actions.

Value Alignment:

Inverse Reinforcement Learning: Learn human values from behavior.

Conclusion & Resources

Summary of Key Takeaways

Generative Architectures: GANs, VAEs, Transformers, and Diffusion Models each excel in specific domains.

Automation vs Creativity: AI augments both industrial processes and artistic expression.

Ethics & Safety: Proactive governance is critical as capabilities outpace regulation.

Tools & Communities

| Resource | Link | Use Case |

|---|---|---|

| Hugging Face Hub | huggingface.co | Pretrained models |

| arXiv | arxiv.org | Latest research papers |

| EleutherAI | eleuther.ai | Open-source LLMs |

Final Thoughts

Generative AI is not just a technological leap but a cultural and philosophical pivot. As we approach AGI, the focus must shift from what AI can do to what humanity should do with AI.

// Frequently Asked Questions (FAQs)

Math: Basic linear algebra, calculus, and probability.

Programming: Python and familiarity with PyTorch/TensorFlow.

ML Fundamentals: Neural networks, backpropagation, and loss functions.

| Use Case | Recommended Model |

|---|---|

| Image Generation | GANs (StyleGAN) or Diffusion |

| Text Generation | Transformers (GPT, BERT) |

| Anomaly Detection | VAEs |

| Multimodal Tasks | CLIP or Flamingo |

Use Wasserstein GAN with Gradient Penalty (WGAN-GP).

Apply spectral normalization to the discriminator.

Implement mini-batch discrimination.

Small models (e.g., GPT-2 Tiny) can run on CPUs/GPUs with ≥8GB RAM.

For large models (e.g., Stable Diffusion), use cloud GPUs (Google Colab, AWS).

Images: Fréchet Inception Distance (FID), Inception Score (IS).

Text: BLEU, ROUGE, or human evaluation.

Audio: Fréchet Audio Distance (FAD).

- Bias: Audit training data for diversity.

- Deepfakes: Use detection tools (e.g., Microsoft Video Authenticator).

- Environment: Optimize training with quantization/distillation.

Document data sources and model decisions.

Add watermarks to synthetic content.

Provide opt-out mechanisms for data collection.

Multimodal Systems: Unified models for text, images, and video (e.g., OpenAI’s GPT-5).

Self-Improving AI: Neural architecture search (NAS) and AGI research.

Text: BookCorpus, The Pile.

Code: GitHub Public Repos.