Introduction

Data annotation is often described as the “easy part” of artificial intelligence. Draw a box, label an image, tag a sentence, done. In reality, data annotation is one of the most underestimated, labor-intensive, and intellectually demanding stages of any AI system. Many modern AI failures can be traced not to weak models, but to weak or inconsistent annotation.

This article explores why data annotation is far more complex than it appears, what makes it so critical, and how real-world experience exposes its hidden challenges.

1. Annotation Is Not Mechanical Work

At first glance, annotation looks like repetitive manual labor. In practice, every annotation is a decision.

Even simple tasks raise difficult questions:

Where exactly does an object begin and end?

Is this object partially occluded or fully visible?

Does this text express sarcasm or literal meaning?

Is this medical structure normal or pathological?

These decisions require context, judgment, and often domain knowledge. Two annotators can look at the same data and produce different “correct” answers, both defensible and both problematic for model training.

2. Ambiguity Is the Default, Not the Exception

Real-world data is messy by nature. Images are blurry, audio is noisy, language is vague, and human behavior rarely fits clean categories.

Annotation guidelines attempt to reduce ambiguity, but they can never eliminate it. Edge cases appear constantly:

Is a pedestrian behind glass still a pedestrian?

Does a cracked bone count as fractured or intact?

Is a social media post hate speech or quoted hate speech?

Every edge case forces annotators to interpret intent, context, and consequences, something no checkbox can fully capture.

3. Quality Depends on Consistency, Not Just Accuracy

A single correct annotation is not enough. Models learn patterns across millions of examples, which means consistency matters more than individual brilliance.

Problems arise when:

Guidelines are interpreted differently across teams

Multiple vendors annotate the same dataset

Annotation rules evolve mid-project

Cultural or linguistic differences affect judgment

Inconsistent annotation introduces noise that models quietly absorb, leading to unpredictable behavior in production. The model does not know which annotator was “right”. It only knows patterns.

3. Quality Depends on Consistency, Not Just Accuracy

A single correct annotation is not enough. Models learn patterns across millions of examples, which means consistency matters more than individual brilliance.

Problems arise when:

Guidelines are interpreted differently across teams

Multiple vendors annotate the same dataset

Annotation rules evolve mid-project

Cultural or linguistic differences affect judgment

Inconsistent annotation introduces noise that models quietly absorb, leading to unpredictable behavior in production. The model does not know which annotator was “right”. It only knows patterns.

5. Scale Introduces New Problems

As annotation projects grow, complexity compounds:

Thousands of annotators

Millions of samples

Tight deadlines

Continuous dataset updates

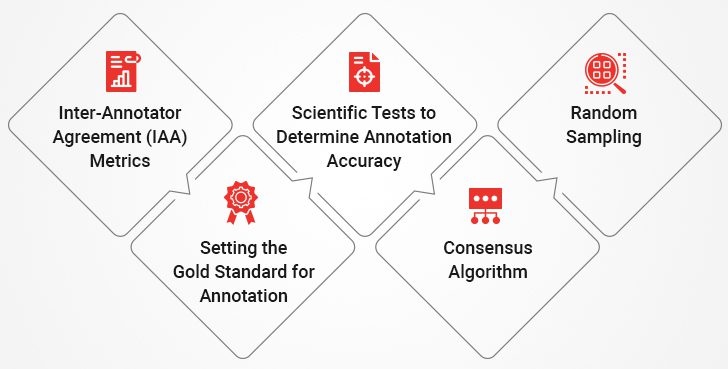

Maintaining quality at scale requires audits, consensus scoring, gold standards, retraining, and constant feedback loops. Without this infrastructure, annotation quality degrades silently while costs continue to rise.

6. The Human Cost Is Often Ignored

Annotation is cognitively demanding and, in some cases, emotionally exhausting. Content moderation, medical data, accident footage, or sensitive text can take a real psychological toll.

Yet annotation work is frequently undervalued, underpaid, and invisible. This leads to high turnover, rushed decisions, and reduced quality, directly impacting AI performance.

7. A Real Experience from the Field

“At the beginning, I thought annotation was just drawing boxes,” says Ahmed, a data annotator who worked on a medical imaging project for over two years.

“After the first week, I realized every image was an argument. Radiologists disagreed with each other. Guidelines changed. What was ‘correct’ on Monday was ‘wrong’ by Friday.”

He explains that the hardest part was not speed, but confidence.

“You’re constantly asking yourself: am I helping the model learn the right thing, or am I baking in confusion? When mistakes show up months later in model evaluation, you don’t even know which annotation caused it.”

For Ahmed, annotation stopped being a task and became a responsibility.

“Once you understand that models trust your labels blindly, you stop calling it simple work.”

8. Why This Matters More Than Ever

As AI systems move into healthcare, transportation, education, and governance, annotation quality becomes a foundation issue. Bigger models cannot compensate for unclear or biased labels. More data does not fix inconsistent data.

The industry’s focus on model size and architecture often distracts from a basic truth:

AI systems are only as good as the data they are taught to trust.

Conclusion

Data annotation is not a preliminary step. It is core infrastructure. It demands judgment, consistency, domain expertise, and human care. Calling it “simple” minimizes the complexity of real-world data and the people who shape it.

The next time an AI system fails in an unexpected way, the answer may not be in the model at all, but in the labels it learned from.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.