Introduction

When people hear “AI-powered driving,” many instinctively think of Large Language Models (LLMs). After all, LLMs can write essays, generate code, and argue philosophy at 2 a.m. But putting a car safely through a busy intersection is a very different problem.

Waymo, Google’s autonomous driving company, operates far beyond the scope of LLMs. Its vehicles rely on a deeply integrated robotics and AI stack, combining sensors, real-time perception, probabilistic reasoning, and control systems that must work flawlessly in the physical world, where mistakes are measured in metal, not tokens.

In short: Waymo doesn’t talk its way through traffic. It computes its way through it.

The Big Picture: The Waymo Autonomous Driving Stack

Waymo’s system can be understood as a layered pipeline:

Sensing the world

Perceiving and understanding the environment

Predicting what will happen next

Planning safe and legal actions

Controlling the vehicle in real time

Each layer is specialized, deterministic where needed, probabilistic where required, and engineered for safety, not conversation.

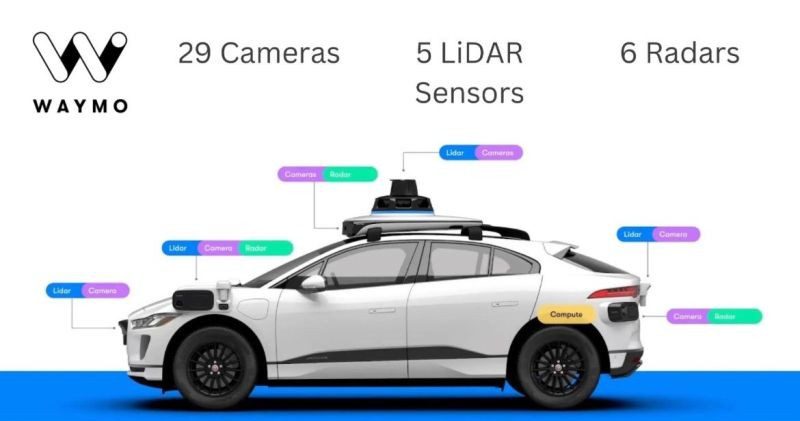

1. Sensors: Seeing More Than Humans Can

Waymo vehicles are packed with redundant, high-resolution sensors. This is the foundation of everything.

Key Sensor Types

LiDAR: Creates a precise 3D map of the environment using laser pulses. Essential for depth and shape understanding.

Cameras: Capture color, texture, traffic lights, signs, and human gestures.

Radar: Robust against rain, fog, and dust; excellent for detecting object velocity.

Audio & IMU sensors: Support motion tracking and system awareness.

Unlike humans, Waymo vehicles see 360 degrees, day and night, without blinking or getting distracted by billboards.

2. Perception: Turning Raw Data Into Reality

Sensors alone are just noisy streams of data. Perception is where AI earns its keep.

What Perception Does

Detects objects: cars, pedestrians, cyclists, animals, cones

Classifies them: vehicle type, posture, motion intent

Tracks them over time in 3D space

Understands road geometry: lanes, curbs, intersections

This layer relies heavily on computer vision, sensor fusion, and deep neural networks, trained on millions of real-world and simulated scenarios.

Importantly, this is not text-based reasoning. It is spatial, geometric, and continuous, things LLMs are fundamentally bad at.

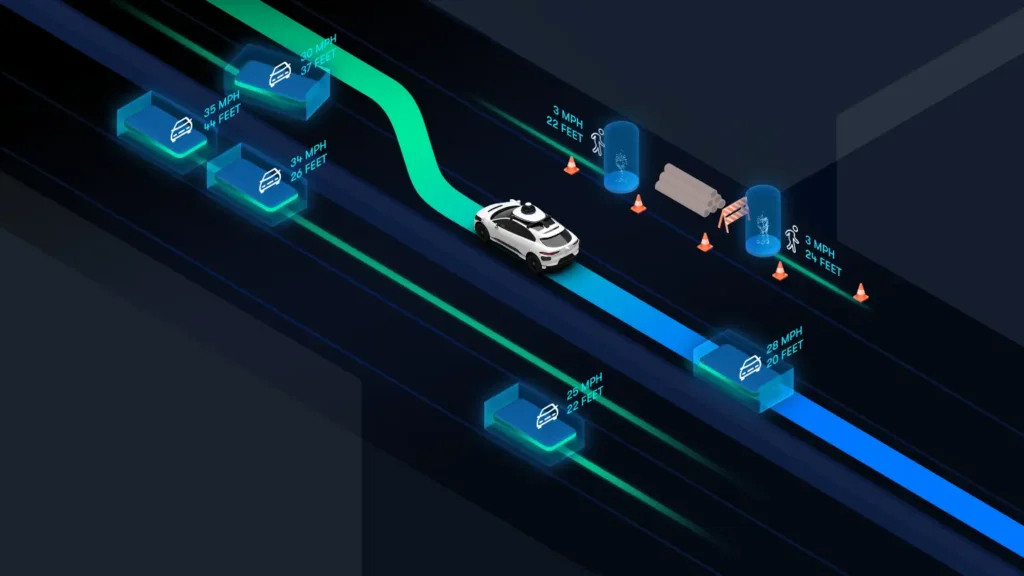

3. Prediction: Anticipating the Future (Politely)

Driving isn’t about reacting; it’s about predicting.

Waymo’s prediction systems estimate:

Where nearby agents are likely to move

Multiple possible futures, each with probabilities

Human behaviors like hesitation, aggression, or compliance

For example, a pedestrian near a crosswalk isn’t just a “person.” They’re a set of possible trajectories with likelihoods attached.

This probabilistic modeling is critical, and again, very different from next-word prediction in LLMs.

4. Planning: Making Safe, Legal, and Social Decisions

Once the system understands the present and predicts the future, it must decide what to do.

Planning Constraints

Traffic laws

Safety margins

Passenger comfort

Road rules and local norms

The planner evaluates thousands of possible maneuvers, lane changes, stops, turns, and selects the safest viable path.

This process involves optimization algorithms, rule-based logic, and learned models, not free-form language generation. There is no room for “creative interpretation” when a red light is involved.

5. Control: Executing With Precision

Finally, the control system translates plans into:

Steering angles

Acceleration and braking

Real-time corrections

These controls operate at high frequency (milliseconds), reacting instantly to changes. This is classical robotics and control theory territory, domains where determinism beats eloquence every time.

Where LLMs Fit (and Where They Don’t)

LLMs are powerful, but Waymo’s core driving system does not depend on them.

LLMs May Help With:

Human–machine interaction

Customer support

Natural language explanations

Internal tooling and documentation

LLMs Are Not Used For:

Real-time driving decisions

Safety-critical control

Sensor fusion or perception

Vehicle motion planning

Why? Because LLMs are:

Non-deterministic

Hard to formally verify

Prone to confident errors (a.k.a. hallucinations)

A car that hallucinates is not a feature.

The Bigger Picture: Democratizing Medical AI

Healthcare inequality is not just about access to doctors, it is about access to knowledge.

Open medical AI models:

Lower barriers for low-resource regions

Enable local innovation

Reduce dependence on external vendors

If used responsibly, MedGemma could help ensure that medical AI benefits are not limited to the few who can afford them.

Simulation: Where Waymo Really Scales

One of Waymo’s biggest advantages is simulation.

Billions of miles driven virtually

Rare edge cases replayed thousands of times

Synthetic scenarios that would be unsafe to test in reality

Simulation allows Waymo to validate improvements before deployment and measure safety statistically—something no human-only driving system can do.

Safety and Redundancy: The Unsexy Superpower

Waymo’s system is designed with:

Hardware redundancy

Software fail-safes

Conservative decision policies

Continuous monitoring

If something is uncertain, the car slows down or stops. No bravado. No ego. Just math.

Conclusion: Beyond Language, Into Reality

Waymo works because it treats autonomous driving as a robotics and systems engineering problem, not a conversational one. While LLMs dominate headlines, Waymo quietly solves one of the hardest real-world AI challenges: safely navigating unpredictable human environments at scale.

In other words, LLMs may explain traffic laws beautifully, but Waymo actually follows them.

And on the road, that matters more than sounding smart.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.