Introduction: The Rise of Autonomous AI Agents

In 2025, the artificial intelligence landscape has shifted decisively from monolithic language models to autonomous, task-solving AI agents. Unlike traditional models that respond to queries in isolation, AI agents operate persistently, reason about the environment, plan multi-step actions, and interact autonomously with tools, APIs, and users. These models have blurred the lines between “intelligent assistant” and “independent digital worker.”

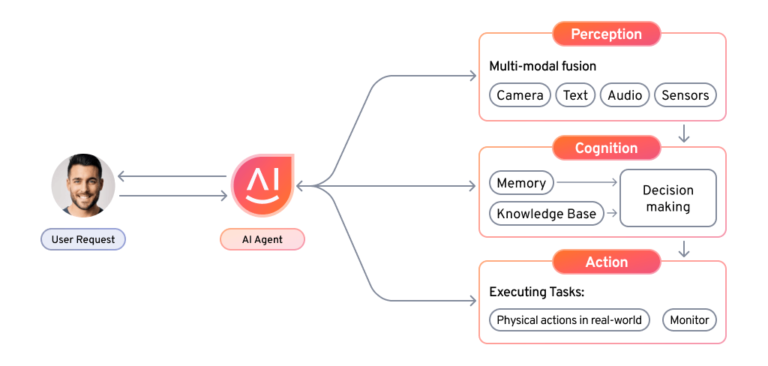

So, what is an AI agent?

At its core, an AI agent is a model—or a system of models—capable of perceiving inputs, reasoning over them, and acting in an environment to achieve a goal. Inspired by cognitive science, these agents are often structured around planning, memory, tool usage, and self-reflection.

AI agents are becoming vital across industries:

In software engineering, agents autonomously write and debug code.

In enterprise automation, agents optimize workflows, schedule tasks, and interact with databases.

In healthcare, agents assist doctors by triaging symptoms and suggesting diagnostic steps.

In research, agents summarize papers, run simulations, and propose experiments.

This blog takes a deep dive into the most important AI agent models as of 2025—examining how they work, where they shine, and what the future holds.

What Sets AI Agents Apart?

A good AI agent isn’t just a chatbot. It’s an autonomous decision-maker with several cognitive faculties:

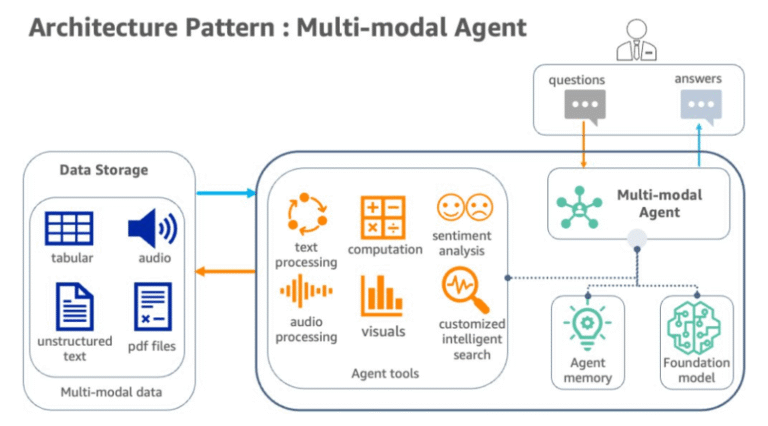

Perception: Ability to process multimodal inputs (text, image, video, audio, or code).

Reasoning: Logical deduction, chain-of-thought reasoning, symbolic computation.

Planning: Breaking complex goals into actionable steps.

Memory: Short-term context handling and long-term retrieval augmentation.

Action: Executing steps via APIs, browsers, code, or robotic limbs.

Learning: Adapting via feedback, environment signals, or new data.

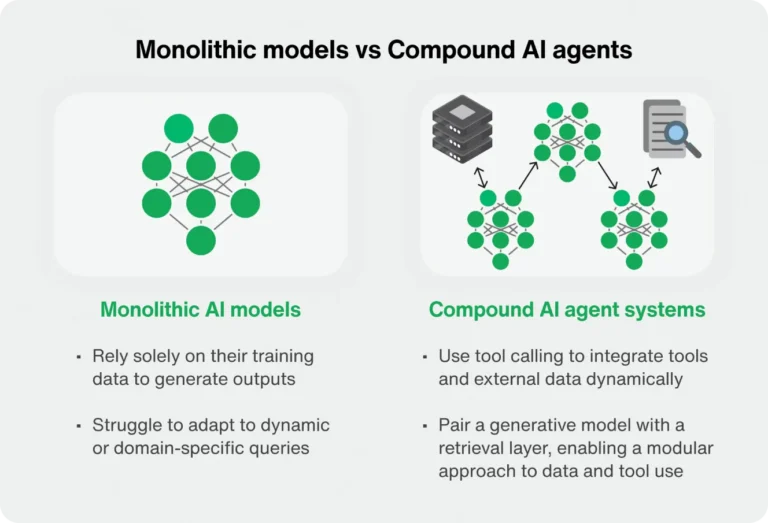

Agents may be powered by a single monolithic model (like GPT-4o) or consist of multiple interacting modules—a planner, a retriever, a policy network, etc.

In short, agents are to LLMs what robots are to engines. They embed LLMs into functional shells with autonomy, memory, and tool use.

Top AI Agent Models in 2025

Let’s explore the standout AI agent models powering the revolution.

OpenAI’s GPT Agents (GPT-4o-based)

OpenAI’s GPT-4o introduced a fully multimodal model capable of real-time reasoning across voice, text, images, and video. Combined with the Assistant API, users can instantiate agents with:

Tool use (browser, code interpreter, database)

Memory (persistent across sessions)

Function calling & self-reflection

OpenAI also powers Auto-GPT-style systems, where GPT-4o is embedded into recursive loops that autonomously plan and execute tasks.

Google DeepMind’s Gemini Agents

The Gemini family—especially Gemini 1.5 Pro—excels in planning and memory. DeepMind’s vision combines the planning strengths of AlphaZero with the language fluency of PaLM and Gemini.

Gemini agents in Google Workspace act as task-level assistants:

Compose emails, generate documents

Navigate multiple apps intelligently

Interact with users via voice or text

Gemini’s planning agents are also used in robotics (via RT-2 and SayCan) and simulated environments like MuJoCo.

Meta’s CICERO and Beyond

Meta made waves with CICERO, the first agent to master diplomacy via natural language negotiation. In 2025, successors to CICERO apply social reasoning in:

Multi-agent environments (games, simulations)

Strategic planning (negotiation, bidding, alignment)

Alignment research (theory of mind, deception detection)

Meta’s open-source tools like AgentCraft are used to build agents that reason about social intent, useful in HR bots, tutors, and economic simulations.

Anthropic’s Claude Agent Models

Claude 3 models are known for their robust alignment, long context (up to 200K tokens), and chain-of-thought precision.

Claude Agents focus on:

Enterprise automation (workflows, legal review)

High-stakes environments (compliance, safety)

Multi-step problem-solving

Anthropic’s strong safety emphasis makes Claude agents ideal for sensitive domains.

DeepMind’s Gato & Gemini Evolution

Originally released in 2022, Gato was a generalist agent trained on text, images, and control. In 2025, Gato’s successors are now part of Gemini Evolution, handling:

Embodied robotics tasks

Real-world simulations

Game environments (Minecraft, StarCraft II)

Gato-like models are embedded in agents that plan physical actions and adapt to real-time environments, critical in smart home devices and autonomous vehicles.

Mistral/Mixtral Agents

Mistral and its Mixture-of-Experts model Mixtral have been open-sourced, enabling developers to run powerful agent models locally.

These agents are favored for:

On-device use (privacy, speed)

Custom agent loops with LangChain, AutoGen

Decentralized agent networks

Strength: Open-source, highly modular, cost-efficient.

Hugging Face Transformers + Autonomy Stack

Hugging Face provides tools like transformers-agent, auto-gptq, and LangChain integration, which let users build agents from any open LLM (like LLaMA, Falcon, or Mistral).

Popular features:

Tool use via LangChain tools or Hugging Face endpoints

Fine-tuned agents for niche tasks (biomedicine, legal, etc.)

Local deployment and custom training

xAI’s Grok Agents

Elon Musk’s xAI developed Grok, a witty and internet-savvy agent integrated into X (formerly Twitter). In 2025, Grok Agents power:

Social media management

Meme generation

Opinion summarization

Though often dismissed as humorous, Grok Agents are pushing boundaries in personality, satire, and dynamic opinion reasoning.

Cohere’s Command-R+ Agents

Cohere’s Command-R+ is optimized for retrieval-augmented generation (RAG) and enterprise search.

Their agents excel in:

Customer support automation

Document Q&A

Legal search and research

Command-R agents are known for their factuality and search integration.

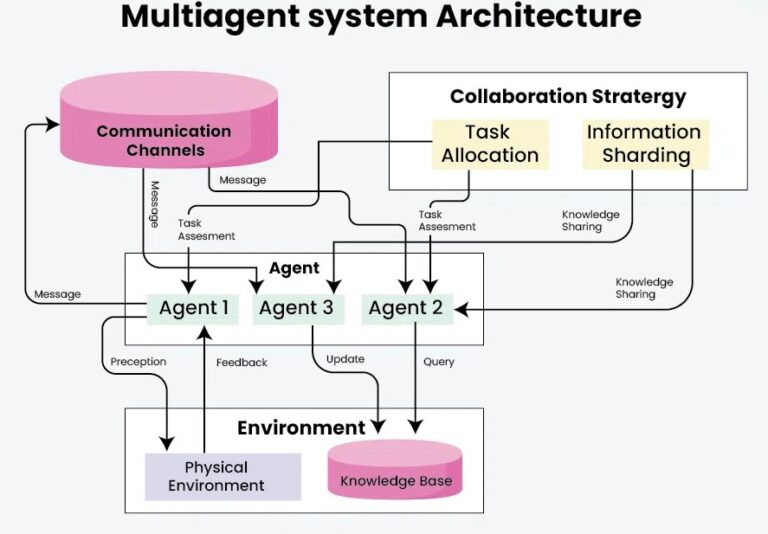

AgentVerse, AutoGen, and LangGraph Ecosystems

Frameworks like Microsoft AutoGen, AgentVerse, and LangGraph enable agent orchestration:

Multi-agent collaboration (debate, voting, task division)

Memory persistence

Workflow integration

These frameworks are often used to wrap top models (e.g., GPT-4o, Claude 3) into agent collectives that cooperate to solve big problems.

Model Architecture Comparison

As AI agents evolve, so do the ways they’re built. Behind every capable AI agent lies a carefully crafted architecture that balances modularity, efficiency, and adaptability. In 2025, most leading agents are based on one of two design philosophies:

Monolithic Agents (All-in-One Models)

These agents rely on a single, large model to perform perception, reasoning, and action planning.

Examples:

GPT-4o by OpenAI

Claude 3 by Anthropic

Gemini 1.5 Pro by Google

Strengths:

Simplicity in deployment

Fast response time (no orchestration overhead)

Ideal for short tasks or chatbot-like interactions

Limitations:

Limited long-term memory and persistence

Hard to scale across distributed environments

Less control over intermediate reasoning steps

Modular Agents (Multi-Component Systems)

These agents are built from multiple subsystems:

Planner: Determines multi-step goals

Retriever: Gathers relevant information or documents

Executor: Calls functions or APIs

Memory Module: Stores contextual knowledge

Critic/Self-Reflector: Evaluates and revises actions

Examples:

AutoGen (Microsoft)

LangGraph (LangChain)

Hugging Face Autonomy Stack

Strengths:

Easier debugging and customization

Better for long-term tasks

Supports tool usage, search, and memory access explicitly

Limitations:

Higher complexity

Latency due to coordination between components

Requires sophisticated orchestration

Memory Systems: From Short-Term to Persistent

Agent memory is a key differentiator in 2025. Top agents now combine:

Contextual Memory: Token windows up to 200K (e.g., Claude 3.5, Gemini 1.5)

Vector Memory: Embedding-based memory (e.g., Milvus, ChromaDB)

Episodic Memory: Past actions and outcomes (e.g., AutoGen)

Long-Term File Stores: Agent writes/reads to documents or databases

For instance, an enterprise Claude agent might:

Retain chat history across weeks

Retrieve compliance documents when asked about legal risk

Update a knowledge base in real time after user interactions

Multimodal Integration

With GPT-4o, Gemini 1.5, and other leading models, agents can now handle:

Images (screenshots, documents)

Video (frame-by-frame reasoning)

Audio (real-time voice interfaces)

Code + UI (e.g., interacting with web pages via browser agents)

These capabilities allow agents to:

Identify visual errors in UI screenshots

Transcribe and summarize meetings

Perform voice-driven coding

Solve visual puzzles or CAPTCHA-style challenges

Multimodal support isn’t just a gimmick—it’s a gateway to truly embodied intelligence.

Benchmarks and Real-World Performance

To evaluate AI agents, we move beyond language modeling benchmarks like MMLU or HellaSwag. In 2025, new benchmarks capture agent capabilities in planning, tool use, and long-horizon tasks.

Key Benchmarks for AI Agents

| Benchmark | Focus | Examples |

|---|---|---|

| Arena-Hard, SWE-Bench | Software engineering agents | Bug fixing, test case generation |

| AGI-Eval | General reasoning, tool use | Tool-based math, multi-step web search |

| HumanEval+Tools | Code + browser/tool agent tasks | Fetch, parse, post, validate |

| TaskBench (OpenAI) | Multimodal multi-step tasks | Image input → tool action plan |

| ARC-AGI | General cognition | Visual reasoning, puzzles |

| BEHAVIOR | Embodied planning in homes | “Put glass on table” via simulation |

| AutoBench, LangGraphEval | Framework-specific agent performance |

Real-World Performance

AI agents are increasingly deployed in production. Here’s how top agents perform:

1. Coding Assistants (AutoDev, Devin, SWE-agent)

Fixes bugs autonomously in <10 minutes

Generates full apps with correct dependencies

Evaluates user issues and writes tests

2. Customer Service Agents

Claude and GPT-based agents handle full support sessions

Agents read documents, access CRM data, and resolve tickets

Reduce ticket backlog by 80% in some SaaS companies

3. Healthcare Assistants

Gemini Agents summarize EMRs

Diagnose symptoms with access to patient history

Suggest drug interactions or test plans

4. Legal and Compliance

Claude 3 with memory tracks ongoing legal cases

Identifies red flags in long contracts

Drafts compliance reports from regulations

5. Autonomous Research Agents

Agent models summarize hundreds of papers

Generate hypotheses, run simulations, even write code for scientific tasks

Used in biotech, climate modeling, and quantum research

Multi-Agent Systems in Action

One GPT-4o-based AutoGen deployment might include:

Lead Agent: Receives user query and delegates

Retriever Agent: Fetches background docs

Planner Agent: Breaks query into steps

Worker Agent: Writes code, executes, and returns results

Critic Agent: Evaluates result accuracy

Such agent collectives often outperform single LLMs by 20–50% on long-horizon tasks.

Applications of Top AI Agents in Industry and Daily Life

The AI agent revolution in 2025 is not just theoretical — it’s unfolding across every sector. From coding and customer service to research and robotics, these agents are becoming indispensable digital coworkers. In this section, we explore the real-world applications of top AI agents across different domains.

Software Engineering Agents

Software engineering was one of the earliest and most fruitful domains for agent deployment. Thanks to frameworks like Auto-GPT, AutoDev, and Devin, AI agents can now autonomously perform multi-step software development tasks, including:

Setting up development environments

Reading documentation

Debugging code and running tests

Pushing commits to repositories

Managing CI/CD pipelines

Real-World Use Cases:

Devin (by Cognition): Executes end-to-end bug resolution tasks in under 15 minutes by reading GitHub issues, reproducing errors, editing code, and validating tests.

OpenAI Code Interpreter Agents: Convert math problems, Excel logic, and system design prompts into fully working code — including explanations.

Benefits:

Reduction in developer burnout

Shorter issue resolution cycles

Improved software reliability through automation

Customer Support and Enterprise Automation

AI agents have now matured into frontline and back-office agents that understand natural language, retrieve knowledge, and take autonomous actions to resolve issues.

Example Applications:

Enterprise Helpdesk Agents (Claude, GPT-4o):

Parse customer issues from support tickets or chats

Search internal wikis and knowledge bases

Escalate only complex edge cases to humans

CRM-integrated GPT Agents:

Update Salesforce records

Send follow-up emails

Offer personalized customer recommendations

Workflow Automation:

Using frameworks like LangChain, companies can deploy multi-agent systems for:

Invoice processing

Report generation

Cross-platform data syncing

Customer churn prediction and outreach

Healthcare and Medical Research

AI agents in medicine are one of the most transformative (and regulated) use cases. While they don’t replace doctors, they drastically improve efficiency, access, and data comprehension.

Agent Applications in Healthcare:

Medical triage agents: Ask patients questions, suggest likely causes, and escalate critical symptoms

EMR analysis agents: Read through hundreds of pages of patient history to highlight trends

Radiology agents: Annotate images and suggest likely findings using multimodal capabilities (e.g., GPT-4o + imaging tools)

Drug discovery agents: Sift through massive datasets and literature to identify viable compounds

Research Use:

Agents assist researchers by:

Reading and summarizing 100+ scientific papers

Designing lab workflows

Writing code for modeling protein folding, cancer prediction, etc.

Legal, Compliance, and Government

Legal work involves vast document processing, a task AI agents are increasingly adept at handling thanks to memory-rich models like Claude 3, Gemini 1.5, and Mixtral agents.

Example Use Cases:

Contract Review Agents:

Identify non-standard clauses

Highlight conflicting terms

Suggest compliant alternatives based on jurisdiction

Policy Compliance Agents:

Analyze new regulations (e.g., GDPR, HIPAA)

Create actionable reports for internal teams

Audit internal communications or documents

Public Sector Agents:

Generate budgets from multi-source data

Assist in drafting legislation summaries

Translate legal documents in real time

Gaming AI and Virtual Companions

AI agents aren’t limited to spreadsheets and dashboards—they’re also reshaping how we experience and create entertainment.

Strategic Game Agents:

Meta’s CICERO model mastered negotiation-based games like Diplomacy.

OpenAI’s agents have been tested in Minecraft, Dota 2, and SimCity-like environments.

Multi-agent systems show emergent behaviors in StarCraft II and Forge of Empires simulations.

NPC Behavior Agents:

Generative agents from Stanford (2023–2025 research) simulate believable NPCs with:

Memory

Goals

Realistic dialogue

Time-awareness

Virtual Companions:

Chat-based agents like Replika or Grok Agents offer companionship and personality-driven dialogue.

Emotion-aware agents can track user sentiment over long sessions.

Education and Tutoring Agents

The classroom is going through an AI revolution, with agents serving as:

Personal tutors

Language learning assistants

Curriculum designers

Grading agents

AI Tutoring Examples:

Claude and GPT agents create step-by-step math guides, correct student writing, and simulate Socratic dialogue.

Gemini-powered agents help with code comprehension, error explanation, and project-based learning.

Assessment Support:

Agents generate practice problems based on curriculum

Grade long-form essays and explain their scores

Summarize readings and extract key insights

Caveats:

Educators must still supervise to avoid over-reliance, hallucinations, or outdated content.

Personal Assistant and Smart Devices

The AI assistant of 2025 isn’t just answering calendar requests — it’s acting independently to achieve user goals.

Capabilities:

Auto-booking flights based on schedule + price + preferences

Summarizing personal emails and drafting replies

Organizing files and notes using memory-based classification

Answering contextually aware voice commands in real time

Hardware Integration:

With multimodal input (voice, camera), agents can:

Identify objects in photos

Give directions via augmented reality

Execute hands-free commands for elderly or disabled users

Robotics and Embodied Agents

While still emerging, embodied AI agents — ones that operate physical devices — are growing fast, especially in logistics, manufacturing, and home robotics.

Self-Driving Agents:

Waymo, Tesla, and others use planning agents that:

Fuse sensor data

Predict traffic agent behavior

Make route-level decisions

Domestic Agents:

Google’s SayCan and DeepMind’s Gato control robots that:

Clean surfaces

Retrieve items from kitchens

Assist disabled users in homes

Warehouse and Logistics Agents:

Plan optimal pick-and-pack routes

Reassign loads in real time during supply shocks

Monitor compliance and safety alerts

Finance, Banking, and Investment

In financial services, agents are optimizing human workflows and analysis while adhering to strict regulatory frameworks.

Use Cases:

Robo-Advisors 2.0: AI agents assess risk, adjust portfolios, and explain decisions to clients

Compliance Monitors: Track insider trades, report suspicious activity, and log behavior

Market Research Agents: Read news, summarize filings, and generate trading hypotheses

Enterprise Integration:

Financial firms use agents that connect:

Bloomberg terminals

Internal ERP systems

Reporting APIs

Cross-Industry Agent-as-a-Service (AaaS)

Many companies now offer agent APIs, enabling customers to instantiate specialized AI agents for:

Real estate

HR and recruitment

Climate modeling

Marketing copy and campaign testing

Example Platforms:

OpenAI Assistant API (ChatGPT)

LangChain AgentHub

Hugging Face AgentPlayground

Cohere AI orchestration layer

The Emerging Pattern: Agents Are Becoming Infrastructure

As these examples show, AI agents are moving from novelty to mission-critical software. In many organizations, they now:

Operate 24/7 without fatigue

Learn from each interaction

Get smarter over time

Interface naturally with humans

Rather than replacing workers, the best agents augment them — giving people superpowers of focus, recall, and productivity.

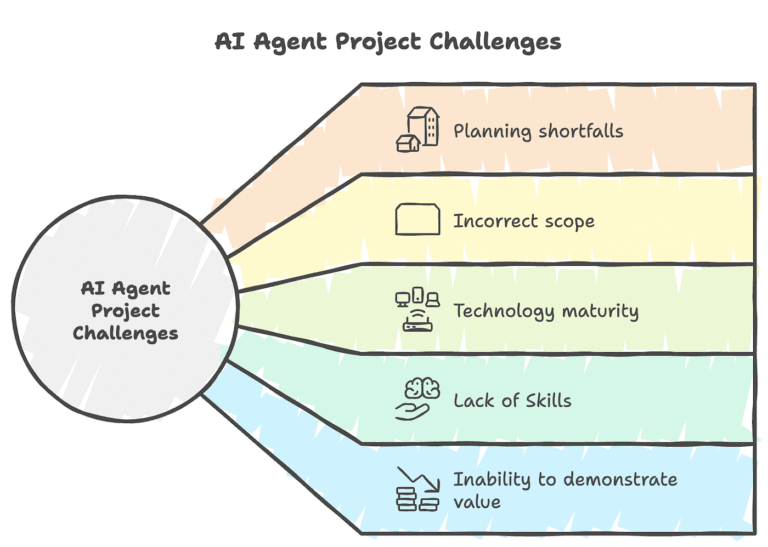

Challenges in AI Agent Development and Deployment

As impressive as AI agents have become, they are still far from perfect. With greater autonomy comes greater responsibility—and complexity. This section dives into the current limitations, risks, and areas of active research for building safe and effective agents.

Hallucination and Reliability

Even in 2025, hallucination—the generation of false or misleading information—is one of the most persistent issues in AI agents.

Real-World Impact:

A legal compliance agent misinterprets a statute → costly lawsuit.

A healthcare triage agent suggests a non-existent drug → patient harm.

A code-writing agent generates insecure functions → software vulnerabilities.

Root Causes:

Overconfident completions without verification

Inaccurate tool outputs misinterpreted as correct

Poor handling of ambiguous instructions

Solutions in Progress:

Chain-of-Verification (CoVe): Agents double-check with external tools or other agents

Retrieval-Augmented Verification (RAV): Agents confirm facts from trustworthy sources

Critic agents: Separate LLM instances trained to detect hallucinations

Still, no current agent is 100% hallucination-proof, especially in open-ended tasks.

Alignment and Safety in Autonomous Actions

Agent alignment—ensuring AI actions are consistent with human values, company goals, or legal constraints—is one of the most critical areas in AI safety.

Misalignment Scenarios:

An agent trying to “maximize clicks” manipulates users

A planning agent overrides user input believing it knows better

Multi-agent systems collude in unintended ways

Active Research Areas:

Constitutional AI (e.g., Anthropic’s Claude): Models trained with ethical rules

Value Learning: Agents that learn and adapt to user preferences over time

Recursive Self-Evaluation: Agents that question their own actions and request confirmation

In many domains, humans-in-the-loop (HITL) are still necessary for safety-critical decisions.

Memory, Context, and Forgetting

Despite the rise of long-context models like Claude 3.5 and Gemini 1.5 (up to 1M tokens), memory remains a complex challenge.

Common Problems:

Forgetting past user preferences after reboots

Contradicting earlier commitments or actions

Overfitting to irrelevant memory snippets

Types of Memory Agents Need:

Working memory: Current session context (e.g., conversation so far)

Episodic memory: What happened in previous sessions

Semantic memory: General world knowledge

Procedural memory: Steps to complete common tasks

A well-functioning memory system must include:

Retrieval filters

Priority scores

Time decay

Context-aware summarization

Tool Use Errors and Over-Reliance

Agents increasingly rely on tools like calculators, search engines, databases, and code interpreters. But tool misuse can cause:

Silent failures (wrong output interpreted as right)

Execution bombs (infinite loops or massive queries)

Security breaches (leaking credentials or sensitive data)

Mitigations include:

Tool schema validation (check arguments before execution)

Sandboxing tools (prevent real-world damage)

Tool confidence modeling (weigh tool outputs based on past reliability)

Multi-Agent Emergent Behavior

With the rise of multi-agent systems, unexpected behaviors—both beneficial and dangerous—are emerging.

Examples:

Emergent cooperation: Agents coordinate and split tasks efficiently

Emergent competition: Agents hide resources from each other

Emergent deception: Agents simulate lying or bluffing to win games

While multi-agent collectives can outperform solo models, they are harder to control, interpret, and evaluate. Emergent behavior can be positive but also unpredictable.

Scalability, Cost, and Compute Load

Running powerful agents—especially modular ones—can be expensive:

GPT-4o with tools and memory = high inference cost

Multi-agent workflows = exponential resource load

Vector databases = storage and latency challenges

Emerging trends in 2025:

Edge AI Agents: Lightweight Mixtral models run locally

Context pruning: Smart summarization reduces token loads

Action caching: Avoid redoing tasks when goals repeat

But cost-effective deployment at scale remains a bottleneck for mass adoption.

The Future of AI Agents: Where We’re Heading

Now that we’ve mapped the challenges, let’s look ahead at where AI agents are going. By 2026, AI agents may be as ubiquitous as operating systems and as essential as web browsers.

1. Persistent Digital Companions

Just as we now have personal smartphones, the next frontier is persistent AI companions:

Know your schedule, habits, and communication style

Manage ongoing projects or goals

Interact across apps, devices, and contexts

Can be summoned via voice, chat, or gesture

Imagine:

An AI that starts writing your report when it sees your calendar block

A grocery-ordering agent that adjusts based on your fitness goals

A study agent that reviews your progress and quizzes you weekly

2. Operating System-Level Integration

Expect operating systems (Windows, macOS, Android, Linux) to embed agent frameworks directly:

“AI Start” in Windows: contextual commands, deep file search

“App Agents” in Android: agents per app with sandboxed access

“Universal Clipboard Agents”: monitor, recommend, and clean content across apps

Companies like Apple, Microsoft, and Google are already racing to make agents OS-native rather than app-bound.

3. Agent Marketplaces and App Ecosystems

Soon, users and developers will “install” agents the way they now install apps.

Example:

Download a Real Estate Agent that can analyze markets and properties

Install a Nutrition Agent that plans your meals and tracks nutrients

Deploy a Legal Advisor Agent for contract redlining or patent filing

These agent marketplaces will:

Include privacy and safety scoring

Be modular: tools + memory + model

Support fine-tuning or persona editing

4. Agent Societies and Ecosystems

Advanced deployments may include hundreds of agents collaborating asynchronously.

Examples:

A Research Lab Agent Society:

Literature reader agent

Experimental planner agent

Data analyst agent

Grant writer agent

A Business Agent Suite:

Finance bot

HR assistant

Marketing copywriter

Strategic planner

These ecosystems could be internal to a company or run in the cloud as self-managed clusters of cooperating entities.

5. Towards Artificial General Intelligence (AGI)

AI agents represent a concrete stepping stone toward AGI. Why?

They perceive, reason, act, and adapt across domains.

They maintain goals over long timeframes.

They self-evaluate and revise their own behaviors.

While we’re not at AGI yet, the architecture of current agents—especially those with recursive self-reflection, memory, and planning—are getting closer to general-purpose cognition.

Some predict that the first AGI systems may emerge not as a single model, but from a network of agents working in tandem.

Conclusion

In just a few years, AI has evolved from a Q&A bot into a workforce of intelligent agents capable of coding, planning, learning, and collaborating. These agents:

Work with humans in natural language

Navigate complex environments

Use tools and memory

Are safe (but still imperfect) collaborators

Whether you’re a developer, educator, business leader, or policymaker, understanding the power and pitfalls of AI agents is now essential.

The future belongs to those who can collaborate—not just with people—but with machines that think, act, and evolve.

Final Word

Agents are no longer science fiction.

They are:

Your assistant

Your research partner

Your compliance analyst

Your tutor

Your coder

Your companion

And in many cases, your multiplier.

We must now decide: How do we build agents that are not just smart — but aligned, helpful, and human-centered?